Research

Breadcrumb

- RIT/

- ACTION Lab/

- Research

Dynamic Scene Graphs for Extracting Activity-based Intelligence

PI: Dr. Yu Kong, Co-PI: Dr. Qi Yu.

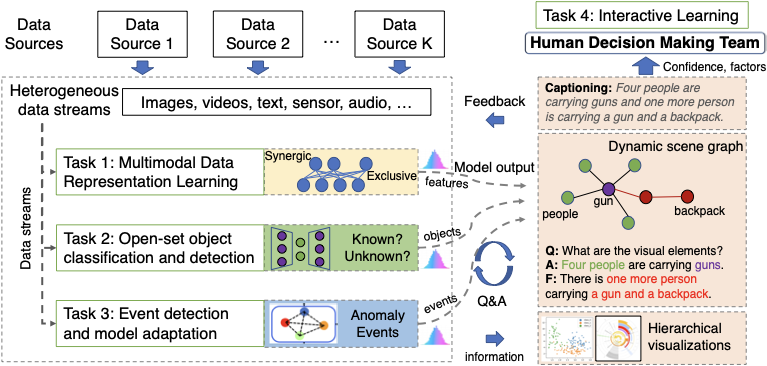

The primary objective of this project is to explore a dynamic learning framework that effectively extracts ABI in various highly complicated mission operations. This project plans to develop Dynamic Scene Graphs over large-scale multimodal time series data for representation learning. The new representations will enable learning from complex and dynamic environment, where a variety of vision tasks can be achieved including open-set object classification and detection, event detection, question-answering, and dense captioning. The proposed framework will achieve the following four properties: (i) Combining the strength of multimodal data for learning effective representations. (ii) Enabling open-set object classification and detection by learning the boundary of data distributions. This allows the proposed framework to understand novel objects in open-world scenarios. (iii) Effective event detection and model adaptation from limited samples. The proposed event detection model is practical as it can be efficiently adapted to novel events using few training samples. (iv) Interactive learning with human users via active question-answering and semantic description generation. This project will create a novel framework that allows users to communicate with machines and integrate human knowledge into the machines. All of these properties enable the extraction of ABI to support mission operations.

Generalized Visual Forecasting

Generalized Visual Forecasting

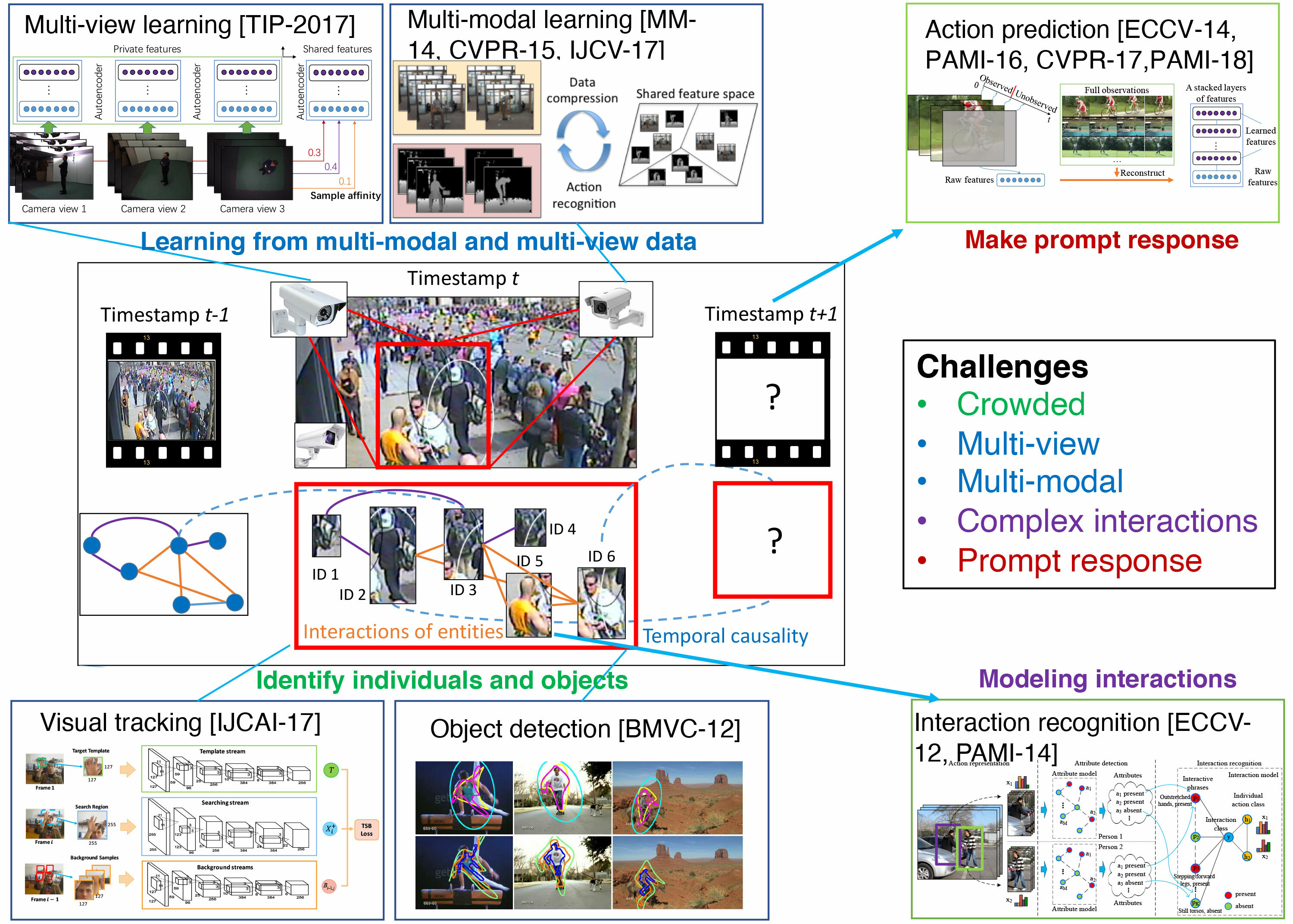

Seeing into the future is one of the most appealing powers, featured in hundreds of movies and books. Researchers in computer vision has developed various methods to attempt to gain this power. Possible methods include human action prediction, intention prediction, trajectory prediction, video prediction, and so on. These prediction methods enhance technology’s capability of seeing into the future, which has broad applications in practical scenarios where high-risk events may happen in the near future, such as crime prevention, traffic accident avoidance, and health care. It is extremely necessary to predict these events before they happen, rather than performing after-the-fact analysis. In this project, we plan to focus on the visual surveillance domain, and create a group of computational algorithms for visual prediction in uncontrolled scenarios.

- Yu Kong and Yun Fu. Human Action Recognition and Prediction: A Survey. arXiv:1806.11230

- Kunpeng Li, Yu Kong, and Yun Fu. Visual Object Tracking via Multi-Stream Deep Similarity Learning Networks. IEEE Transaction on Image Processing (T-IP), 2020. Accepted

- Yu Kong, Zhiqiang Tao, Yun Fu. Adversarial Action Prediction Networks. IEEE Trans. Pattern Analysis and Machine Intelligence (T-PAMI), 2018. Accepted

- Yu Kong, Shangqian Gao, Bin Sun, and Yun Fu. Action Prediction from Videos via Memorizing Hard-to-Predict Samples. AAAI Conference on Artificial Intelligence (AAAI), 2018.

- Yu Kong and Yun Fu. Max-Margin Heterogeneous Information Machine for RGB-D Action Recognition. International Journal of Computer Vision (IJCV), 123(3):350-371, 2017

- Yu Kong, Zhiqiang Tao, Yun Fu. Deep Sequential Context Networks for Action Prediction. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

- Kunpeng Li, Yu Kong, and Yun Fu. Multi-Stream Deep Similarity Learning Networks for Visual Tracking. International Joint Conference on Artificial Intelligence (IJCAI), pp. 2166-2172, 2017

- Yu Kong and Yun Fu. Max-Margin Action Prediction Machine. IEEE Trans. Pattern Analysis and Machine Intelligence (T-PAMI), 38(9):1844-1858, 2016.

- Yu Kong and Yun Fu. Bilinear Heterogeneous Information Machine for RGB-D Action Recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1054-1062, 2015.

- Yu Kong, Dmitry Kit, and Yun Fu. A Discriminative Model with Multiple Temporal Scales for Action Prediction. European Conference on Computer Vision (ECCV), pp. 596-611, 2014

- Yu Kong, Yunde Jia, and Yun Fu. Interactive Phrases: Semantic Descriptions for Human Interaction Recognition. IEEE Trans. Pattern Analysis and Machine Intelligence (T-PAMI), 36(9):1775-1788, 2014.

- Yu Kong, Yunde Jia, and Yun Fu. Learning Human Interactions by Interactive Phrases. European Conference on Computer Vision (ECCV), pp. 300-313, 2012

- Zhi Yang, Yu Kong, and Yun Fu. Contour-HOG: A Stub Feature based Level Set Method for Learning Object Contour. British Conference on Computer Vision (BMVC), pp. 1-11, 2012.

A Multimodal Dynamic Bayesian Learning Framework for Complex Decision-making

A Multimodal Dynamic Bayesian Learning Framework for Complex Decision-making

PI: Dr. Qi Yu, Co-PIs: Dr. Daniel Krutz and Dr. Yu Kong.

October 2018 - September 2022

The overall goal of this project is to explore a multimodal dynamic Bayesian learning framework to facilitate complex decision-making in various highly complicated scenarios and tasks. The proposed framework aims to provide comprehensive decision support to maximize the overall effectiveness of complex decision-making. The project will focus on four main methods: (1) analyzing large-scale, heterogeneous, and dynamic data streams from multiple sources and extract high-level and meaningful features, (2) algorithmically fusing multimodal data streams and provide decision recommendations with interpretable justifications, uncertainty evaluation, and estimated costs to prioritize/coordinate multiple tasks (3) identifying sources of uncertainty and offer informative guidance for cost-effective information gathering, and (4) visualizing model outcome and allow intuitive interactions with the human for collaborative learning and continuous model improvement to achieve high-quality decisions.

- Wentao Bao, Qi Yu, and Yu Kong. Uncertainty-based Traffic Accident Anticipation with Spatio-Temporal Relational Learning. 28th ACM International Conference on Multimedia (MM), 2020. [Paper, Code, Data]

- Junwen Chen, Wentao Bao, Yu Kong. Group Activity Prediction with Sequential Relational Anticipation Model. European Conference on Computer Vision (ECCV), 2020.

- Wentao Bao, Qi Yu, Yu Kong. Object-Aware Centroid Voting for Monocular 3D Object Detection. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020. [Paper, Demo]

- Junwen Chen, Haiting Hao, Hanbin Hong, and Yu Kong. RIT-18: A Novel Dataset for Compositional Group Activity Understanding. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshop, 2020. [Paper, RIT-18 Dataset]