Brain to Text

Bridging Minds and Machines: Using EEG, EOG, and AI to decode thoughts into words

Team Members:

- Abdulla Alserkal (EE)

- Ahmad Qusai (EE)

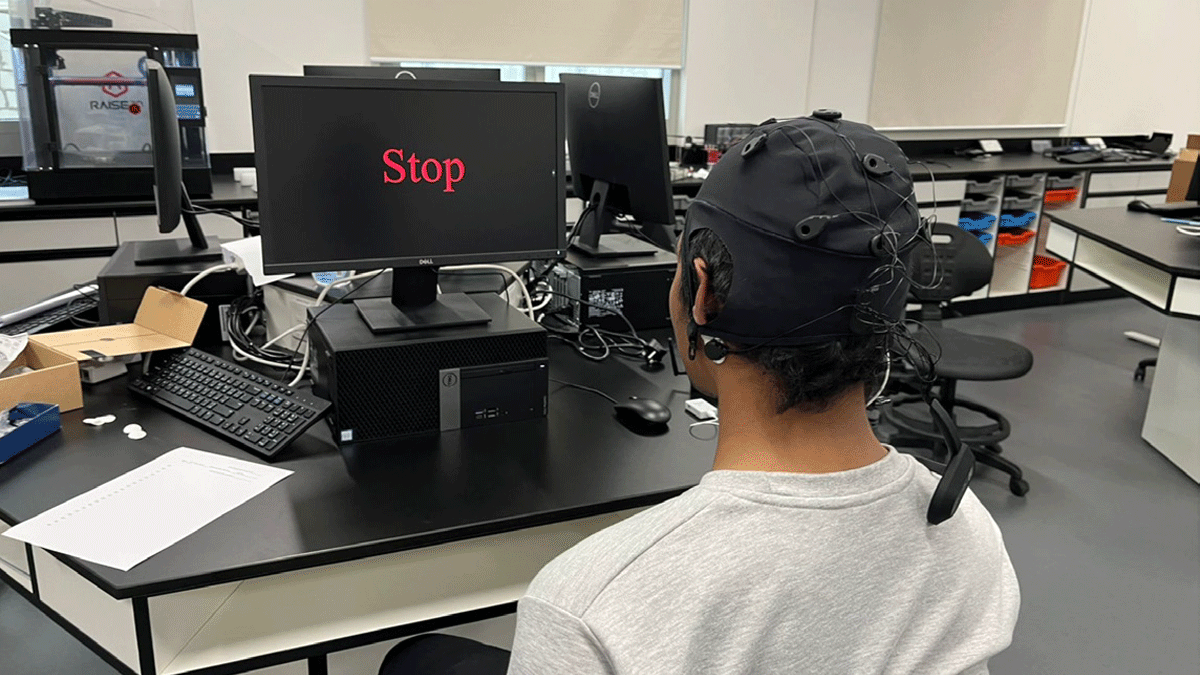

This study presents a robust methodological framework utilizing electroencephalography (EEG) and electrooculography (EOG) to extract linguistic content, aiming to enhance communication for individuals with cognitive impairments like stroke victims. The framework involves exposing participants to words linked with unique colors while collecting raw EEG and EOG data during one-minute contemplation intervals.

This data trains five AI models: KNN, SVM, DT, RF, and a customized VGG16, designed for interpreting CSV datasets. Validation of the methodology encompasses experiments with around 50 participants spanning various educational backgrounds, from bachelor's to PhD levels, enabling an assessment of how education impacts data quality for AI model training. Supervised learning models are predominantly utilized due to their superior accuracy with limited datasets.

Furthermore, the study integrates AI models with a 3D avatar, generated from participant images, for real-time communication feedback. When an AI model detects a pattern corresponding to a presented word, the avatar generates a video articulating the word, enriching the interactive communication experience.