grounding

Activity-driven Weakly-Supervised Spatio-Temporal Grounding from Untrimmed Videos

MM 2020

[Paper], [Code]

Junwen Chen, Wentao Bao, Yu Kong

Rochester Institute of Technology

Presentation

Please watch the video if Youtube doesn't work with you.

Abstract

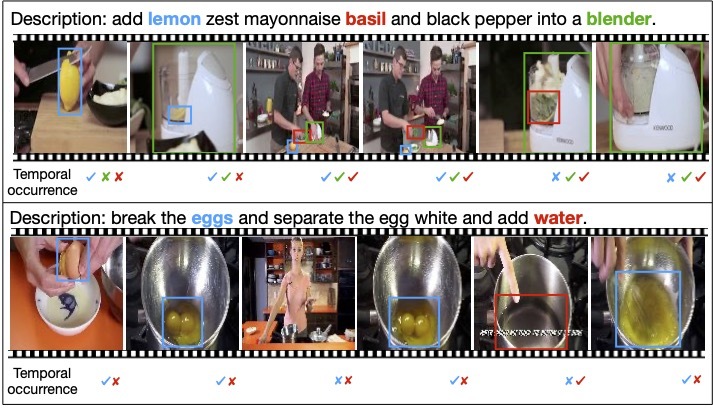

In this paper, we study the problem of weakly-supervised spatio-temporal grounding from raw untrimmed video streams. Given a video and its descriptive sentence, spatio-temporal grounding aims at predicting the temporal occurrence and spatial locations of each query object across frames. Our goal is to learn a grounding model in a weakly-supervised fashion, without the supervision of both spatial bounding boxes and temporal occurrences during training. Existing methods have been addressed in trimmed videos, but their reliance on object tracking will easily fail due to frequent camera shot cut in untrimmed videos. To this end, we propose a novel spatio-temporal multiple instance learning framework for untrimmed video grounding. Spatial MIL and temporal MIL are mutually guided to ground each query to specific spatial regions and the occurring frames of a video. Furthermore, an activity described in the sentence is captured to use the informative contextual cues for region proposal refinement and text representation. We conduct extensive evaluation on YouCookII and RoboWatch datasets, and demonstrate our method outperforms state-of-the-art methods.

CITATION

|

If you find our work helpful to your research, please cite: |

|

@inproceedings{chen2020activity, |