Research

Emerging Devices that Emulate Biology

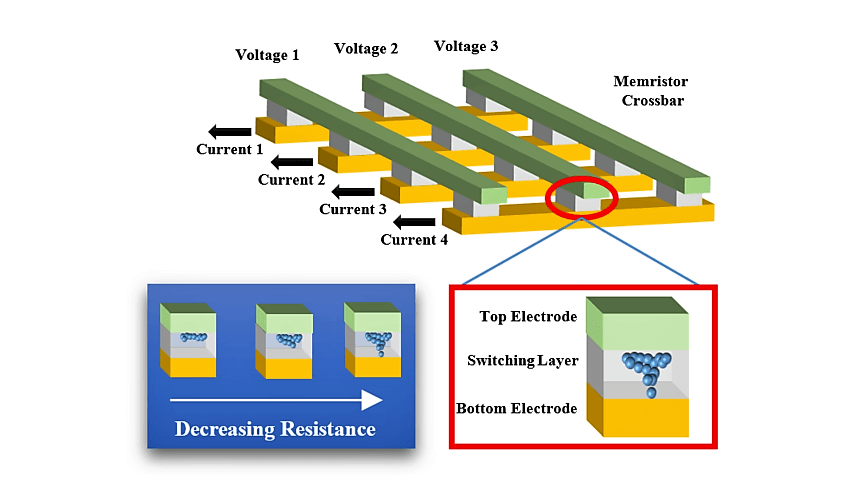

A key aspect of our research is the development and leverage of beyond-CMOS devices to efficiently implement AI primitives (e.g. synaptic plasticity, neuronal spiking, etc.) in hardware. One of the main focuses has been on memristive devices, or memristors, for implementation of neuroplastic behavior, especially at the level of synapses. Memristors are able to simultaneously store data and perform analog multiplications. This close coupling of memory and computation helps to remove the so-called von Neumann bottleneck, offering the potential for signficantly improved energy efficiency of load-compute-store types of architectures. The majority of our work in this area has been integration of memristors into neuron, synapse, and training circuits for neural networks. However, we have also done some semi-empirical modeling and SPICE model development. Other devices of interest include 3 and 4-terminal memristors and biristors for spiking neuron implementation.

Energy-Efficient Neural Network Topologies and Training Algorithms

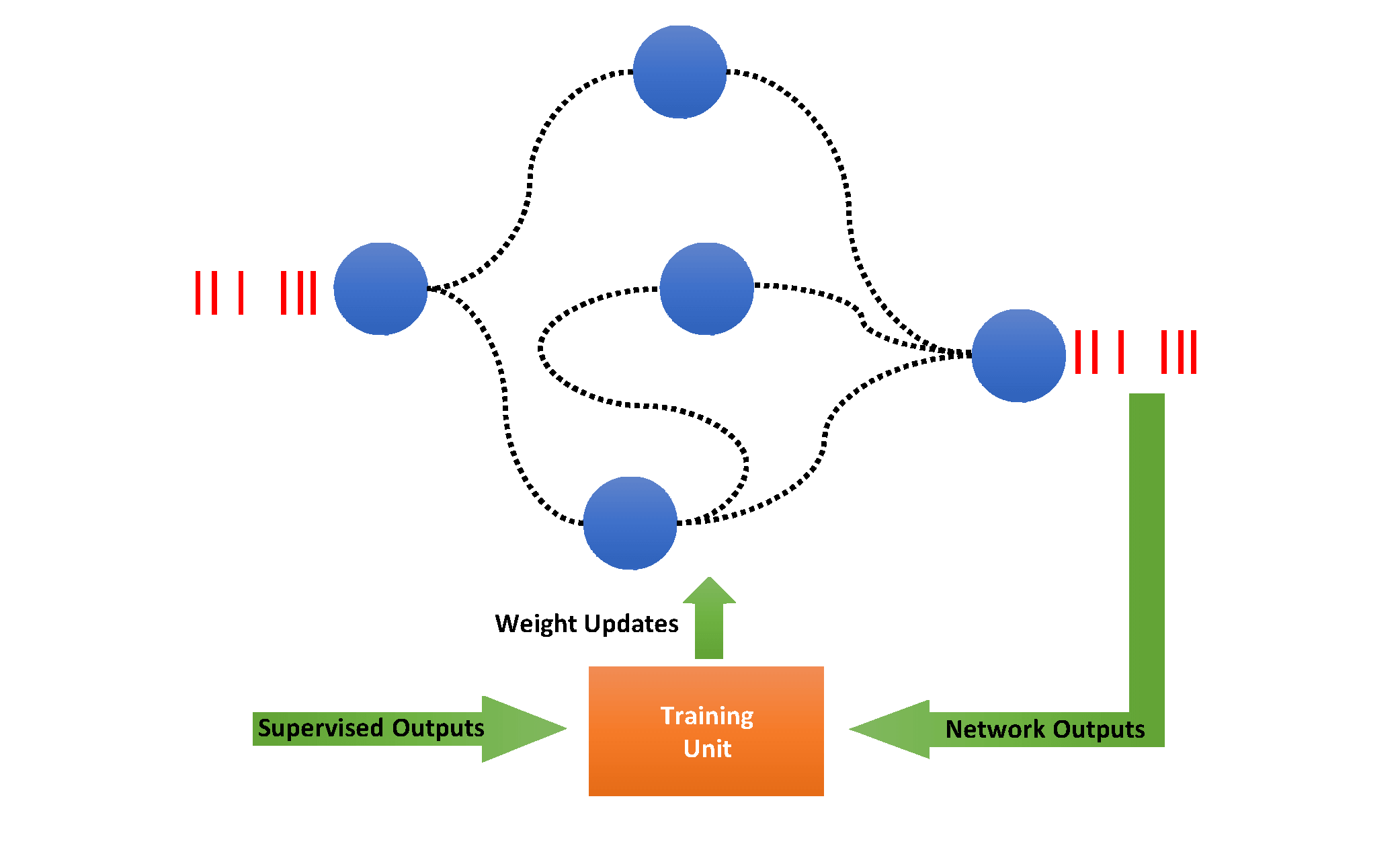

A key theme of the Brain Lab’s research is using the inherent randomness and other unique properties of devices to design energy-efficient neural network topologies and training algorithms. One specific focus has been on so-called “random projection networks,” which are neural networks that have random weights and topologies. This type of network is able to fit a target function (e.g. image classification) by pairing linear regression with large random feature spaces. The main advantage of random projection networks is that they can be implemented in hardware with fewer resources than other types of networks. Our lab also develops novel training algorithms that are custom-tailored to in-hardware learning. For example, we have explored the use of stochastic logic to reduce the hardware overhead associated with gradient calculations in supervised learning. Other topics of interest include spiking neural network hardware, energy-harvesting AI, and perturbation-based learning methods.

Trustworthy Neuromorphic Systems

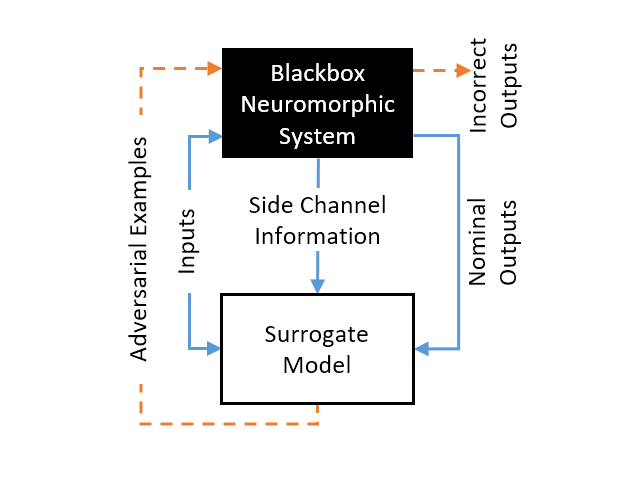

The recent artificial intelligence (AI) boom has created a growing market for AI systems in size, weight, and power (SWaP)-constrained application spaces such as wearables, mobile phones, robots, unmanned aerial vehicles (UAVs), satellites, etc. In response, companies like IBM, Intel, and Google, as well as a number of start-ups, universities, and government agencies are developing custom AI hardware (neuromorphic computing systems) to enable AI at the edge. However, many questions related to these systems' security and safety vulnerabilities still need to be addressed. Since the early 2000's a number of studies have shown that AI and especially deep learning algorithms are susceptible to adversarial attacks, where a malicious actor is able to cause high-confidence misclassifications of data. For example, an adversary may easily be able to hide a weapon from an AI system in plain sight by painting it a particular color. A number of defense strategies are being created to improve AI algorithm robustness, but there has been very little work related to the impact of hardware-specific characteristics (especially noise in the forms of low precision, process variations, defects, etc.) on the adversarial robustness of neuromorphic computing platforms. To fill this gap, we are pursuing research related to 1.) evaluation and modeling of the effects of noise and hardware faults on the susceptibility of neuromorphic systems to adversarial attacks; 2.) designing adversarially-robust training algorithms for neuromorphic systems; and 3. developing novel adversarial attacks that leverage hardware-specific attributes of neuromorphic systems.