Notice

-

September 30, 2025

We are hiring Ph.D. students!

The Music and Audio Cognition Lab is currently seeking two motivated Ph.D. students to join our team beginning in Fall 2026. These positions offer an exciting opportunity to engage in cutting-edge research at the intersection of audio processing, language modeling, and neuroscience. The student will be part of a collaborative environment that encourages innovation and exploration in the field of audio cognition.

The selected Ph.D. candidates will focus on three main areas:

- Developing and implementing machine learning algorithms for the speech signal separation, transcription, and clustering, as well as generating realistic immersive audio scenes for practical environments.

- Developing large language model (LLM)-based machine learning algorithms for applications across various scientific fields, with a particular focus on mapping relationships between audio, text, and EEG (electroencephalography) signals to advance our understanding of neural processing in response to language and sound.

- EEG (electroencephalography) data analysis/processing related to auditory stimuli, designing EEG experimental paradigms and developing attention decoding algorithms for hearing-related applications with a focus on understanding auditory perception and cognitive processing.

Ideal candidates will have a strong background in machine learning, specifically in audio and speech-related algorithms, as well as experience with large language models. We seek individuals who are passionate about exploring the connections between audio, language, and neural signals, and who are eager to contribute to pioneering research in the lab.

For more details and application instructions, please visit: rit.edu/computing/phd-computing-and-information-sciences

-

August 1, 2025

The Principal Investigator Dr. Hwan Shim has been awarded NIH R16 SuRE First grant

We are pleased to announce that Dr. Hwan Shim, Principal Investigator of Music and Audio Cognition Laboratory (MACL), has been awarded the prestigious NIH R16 SuRE First grant.

The NIH R16 SuRE (Support for Research Excellence) First grant is designed to support outstanding early-stage investigators in their pursuit of high-quality basic, biomedical, or health-related research.

With the support of the R16 SuRE First grant, Dr. Shim’s laboratory will further investigate "Semantic-based auditory attention decoder using Large Language Model." This funding will provide critical resources for advancing the lab’s projects, supporting student researchers, and fostering a dynamic research environment.

We are also pleased to announce that the lab will be hiring Graduate Research Assistants and Undergraduate Student Workers as research assistants to participate in this exciting project.

If you are interested in these research opportunities or have any questions, please contact Dr. Shim (hxsiee@rit.edu)

-

April 30, 2025

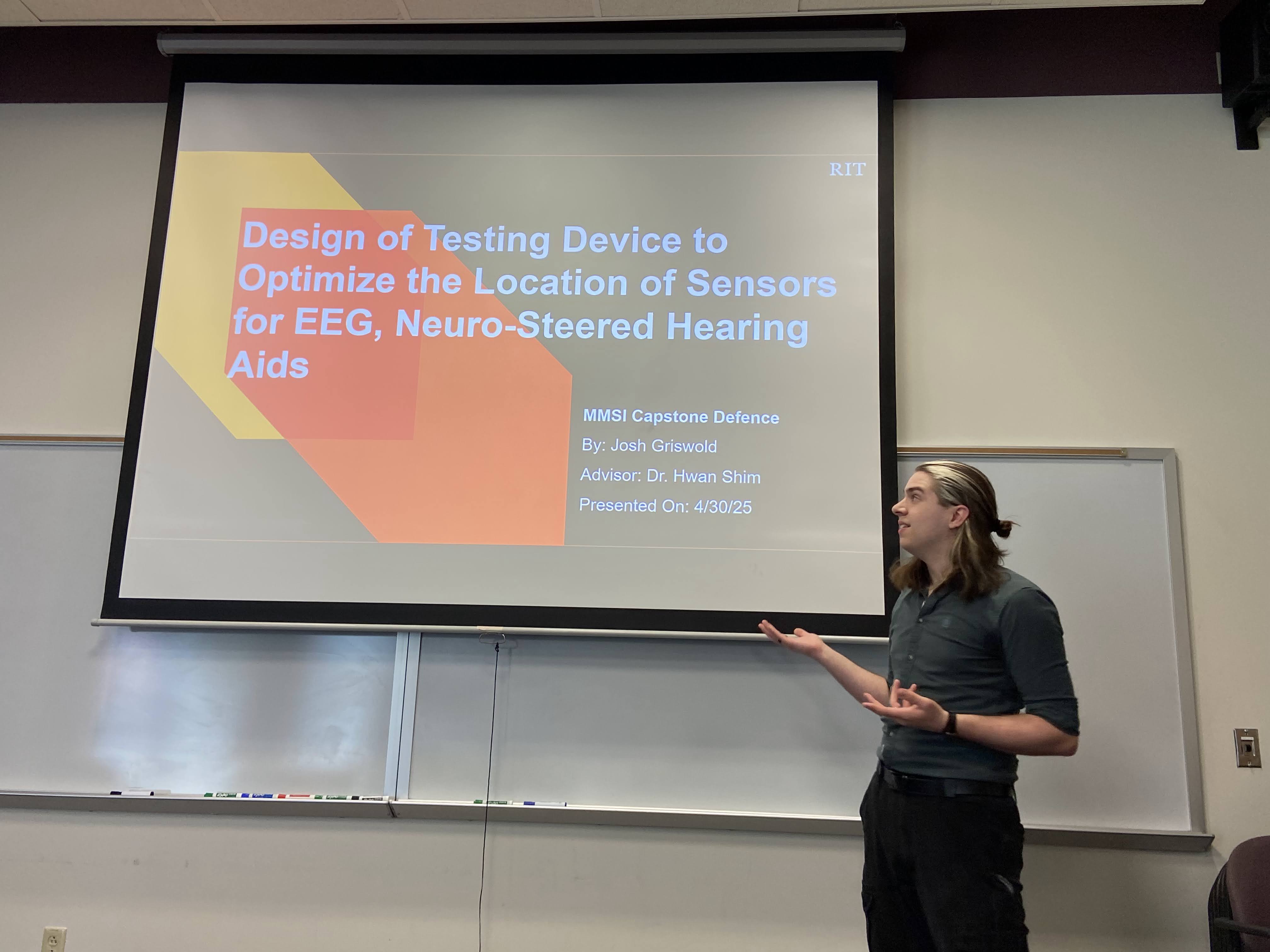

MS Student Josh Griswold Successfully Defends Capstone on Sensor Optimization for Neuro-Steered Hearing Aids

Josh Griswold, an MS student in the Manufacturing and Mechanical Systems Integration (MMSI) program, has successfully defended his capstone project titled “Design of Testing Device to Optimize the Location of Sensors for EEG, Neuro-Steered Hearing Aids.” Supervised by Dr. Hwan Shim, Josh’s work addresses a critical challenge in the development of next-generation hearing aids that use brain signals to selectively amplify sound.

His project focused on designing a wearable, eyeglass-based test device that enables precise adjustment and evaluation of EEG electrode and microphone placement. The goal: to optimize both electrical contact for brain signal detection and audio clarity, while ensuring comfort for about 80% of adult users.

The prototype demonstrated successful synchronized signal capture and provided valuable insights into sensor placement and device comfort. Josh’s work lays a strong foundation for future research on neuro-steered hearing aids, with all hardware and software to be further developed by subsequent students in Music and Audio Cognition Laboratory.

Congratulations to Josh Griswold on this significant achievement and contribution to the field of assistive hearing technologies!

-

October 27, 2024

We are hiring a Ph.D. student!

The Music and Audio Cognition Lab is currently seeking a motivated Ph.D. student to join our team. This position offers an exciting opportunity to engage in cutting-edge research at the intersection of audio processing, language modeling, and neuroscience. The student will be part of a collaborative environment that encourages innovation and exploration in the field of audio cognition.

The selected Ph.D. candidate will focus on developing machine learning algorithms based on large language models (LLMs) with applications across various scientific domains. A particular area of interest will be mapping relationships from audio and text to EEG (electroencephalography) signals, which holds promise for advancing our understanding of neural processing in response to language and sound.

Ideal candidates will have a strong background in machine learning, specifically in audio and speech-related algorithms, as well as experience with large language models. We seek individuals who are passionate about exploring the connections between audio, language, and neural signals, and who are eager to contribute to pioneering research in the lab.

-

October 10, 2024

Poster presented at Society for Neuroscience (SfN)

The Society for Neuroscience (SfN) held its annual meeting, Neuroscience 2024, at McCormick Place Convention Center in Chicago from October 5-9, 2024. This premier global neuroscience event brought together scientists from around the world to share cutting-edge research, discover new ideas, and advance the field of neuroscience.

The five-day conference featured a diverse program of lectures, symposia, and panel sessions covering a wide range of topics in neuroscience. Attendees had the opportunity to explore themes such as brain development, neurodegenerative disorders, motor and sensory systems, integrative physiology and behavior, cognition, and more. The meeting also included poster presentations, allowing researchers to showcase their latest findings and engage in discussions with colleagues.

During the Society for Neuroscience (SfN) annual meeting, Neuroscience 2024, Dr. Hwan Shim made a significant contribution on a poster titled "Compact and Explainable Decoding of Auditory Selective Attention in Normal Hearing and Cochlear Implant Listeners" with collaborators at University of Iowa and Carnegie Mellon University. The poster presentation, which took place in the bustling exhibition halls of McCormick Place, attracted considerable attention from fellow neuroscientists and auditory researchers. Dr. Shim's work focused on developing novel methods for decoding auditory selective attention, with potential applications for both normal hearing individuals and those with cochlear implants. The research highlighted innovative approaches to understanding how the brain processes competing auditory streams, offering insights that could lead to improvements in hearing aid and cochlear implant technologies.