Ongoing Research Projects

Characterizing the Nature of Visual Speech Processing in the Human Brain

NIH NIDCD R01DC016297 (PIs: Edmund Lalor and Matthew Dye)

We will more fully characterize visual speech processing in the human brain by studying deaf individuals who vary in their level of acoustic speech experience and, thus, in how they rely on and utilize visual speech. We will do this in two ways. First, we will recruit hearing and deaf individuals to speechread silent videos while we record their EEG. By modeling the EEG in terms of different visual speech features, we will test the hypothesis that successful speechreading is underpinned by a linguistic, complementary representation in visual cortex, independent of auditory speech representations. And second, we will model EEG responses to audio, visual, and audiovisual speech in hearing individuals and deaf users of cochlear implants. While auditory speech processing in individuals with cochlear implants can be successful, the signal provided by the implant is highly degraded and performance can vary greatly across individuals.

The ASL FEEL Corpus

NSF EAGER (PIs: Cissi Alm and Allison Fitch)

We will create an American Sign Language (ASL) corpus, inclusive of ASL dialogues. This resource will also systematize an annotation method for reproducible annotations led by team members with experience in ASL and data annotation. The annotation quality will be refined, through iterative evaluation with interannotator measures that are common in corpus creation. At the end of the project, we will release the annotated ASL corpus for linguistic research. Anticipated outcomes include: (1) an ASL corpus that captures ASL characteristics that are currently understudied, (2) a tested method of annotation for representing them in ASL, (3) best practice guidelines for continued annotation use, and (4) dissemination of the research in manuscripts written in English and in ASL video-recorded research products. Our diverse team of investigators will train deaf graduate and undergraduate research assistants in ASL corpus creation as well as annotation and resource evaluation.

Deafness, Vocabulary Knowledge, and the Emergence of Reading Efficiency: Evidence from Eye-tracking

NIH NIDCD R21DC023278 (PI: Frances Cooley)

This project explores how vocabulary knowledge in both American Sign Language (ASL) and English contributes to reading comprehension and efficient reading behaviors in deaf/hard-of-hearing (DHH) children who are first-language users of ASL and second-language readers of written English. We use eye-tracking and cognitive testing to evaluate successful reading behaviors and identify how knowledge of word meanings in both of deaf readers’ languages influence reading strategies and predict the emergence of skilled, efficient eye-movement-while-reading behaviors for these children, which has been noted in skilled deaf adult readers. Findings from this project will advance our understanding of reading development for not only for DHH children but second language readers of English and readers for whom sound-letter correspondence does not provide a successful path to literacy as well. We hope these findings will guide curriculum improvements to support DHH students' unique learning needs.

Investigating Deaf and Hearing Children’s Exploratory Behavior at the Strong Museum of Play, in Rochester, New York

Funding Source: NIH R01DC016346 (PI: Matthew Dye)

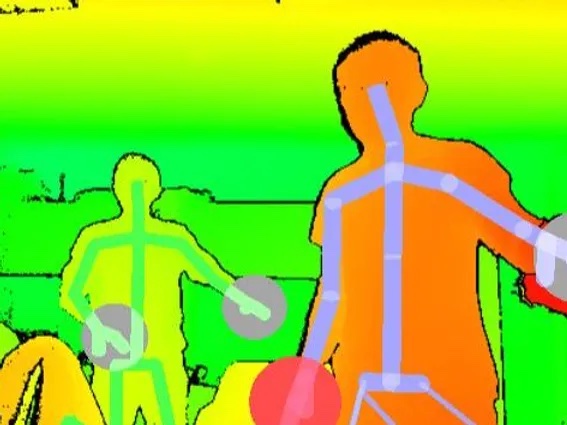

Neurocognitive Plasticity in Young Deaf Adults: Effects of Cochlear Implantation and Sign Language Experience

Funding Source: NSF BCS 1749376 (PI: Matthew Dye)

Multimethod Investigation of Articulatory and Perceptual Constraints on Natural Language Evolution

Investigating Deaf and Hearing Children’s Exploratory Behavior at the Strong Museum of Play, in Rochester, New York