Research Project

Integrating computation into STEM education

Principal Investigator(s)

Ben Zwickl, Tony Wong (School of Mathematics and Statistics)

Research Team Members

Postdocs:

Christian Cammarota, Mike Foster

Grad students:

Mike Verostek

Undergraduates:

Andrea Camacho-Betancourt, Kimberly Dorsey, Kayleigh Patterson, Mikayla MacIntyre, Matt Dunham

Collaborators:

Tor Odden (University of Oslo)

Funding

Current Funding:

The Science and Mathematics Education Research Collaborative Postdoctoral Program, NSF Award 2222337 October 2022 - September 2025

Project Description

Impact

Computation is playing an increasing role in all STEM fields, and the ability for students to use and understand various computational tools is vital to long term success. It is not a question of if students will use computation after completing their undergraduate degree, but when. Computational thinking is becoming a necessary skill in both academia and industry allowing people to solve problems through abstraction and procedural algorithms.

One way we can try to evaluate how the various STEM fields think about computation and incorporate it into their respective curricula is through their computational literacy. We hope to contribute to the field of study on computational literacy in STEM by probing research and classroom practices of educators in various fields as well as student retention.

Research questions

Related to computational literacy, we are interested in questions, such as:

- What role do social elements of computational literacy play in the way STEM experts use computation in their research and teaching?

- How can we best design assessments of students’ computational literacy, and how do these differ across scientific disciplines?

- What are the similarities and differences of disciplinary computational literacy across the sciences, and how can undergraduate STEM curriculum be structured to enhance this intersectionality?

Overview

Within Andrea diSessa’s theory of computational literacy (CL), there are three pillars of literacy that build a platform for computational learning. Material Literacy describes the mechanics of what to do to make functionally correct computation. Cognitive Literacy describes the reason why you use computation with respect to understanding your problem in context. Social Literacy describes the form and communication strategies behind how you should employ computation. Odden, Lockwood, and Caballero further analyze these pillars into the elements of: practices, knowledge and beliefs about literacy. This framework can help educators to hone in on specific difficulties students might be having to more effectively build computational literacy. In our group, we have been using this framework to examine social aspects of computational literacy as well as using CL as a framework for assessing students’ learning.

Social aspect of computational literacy

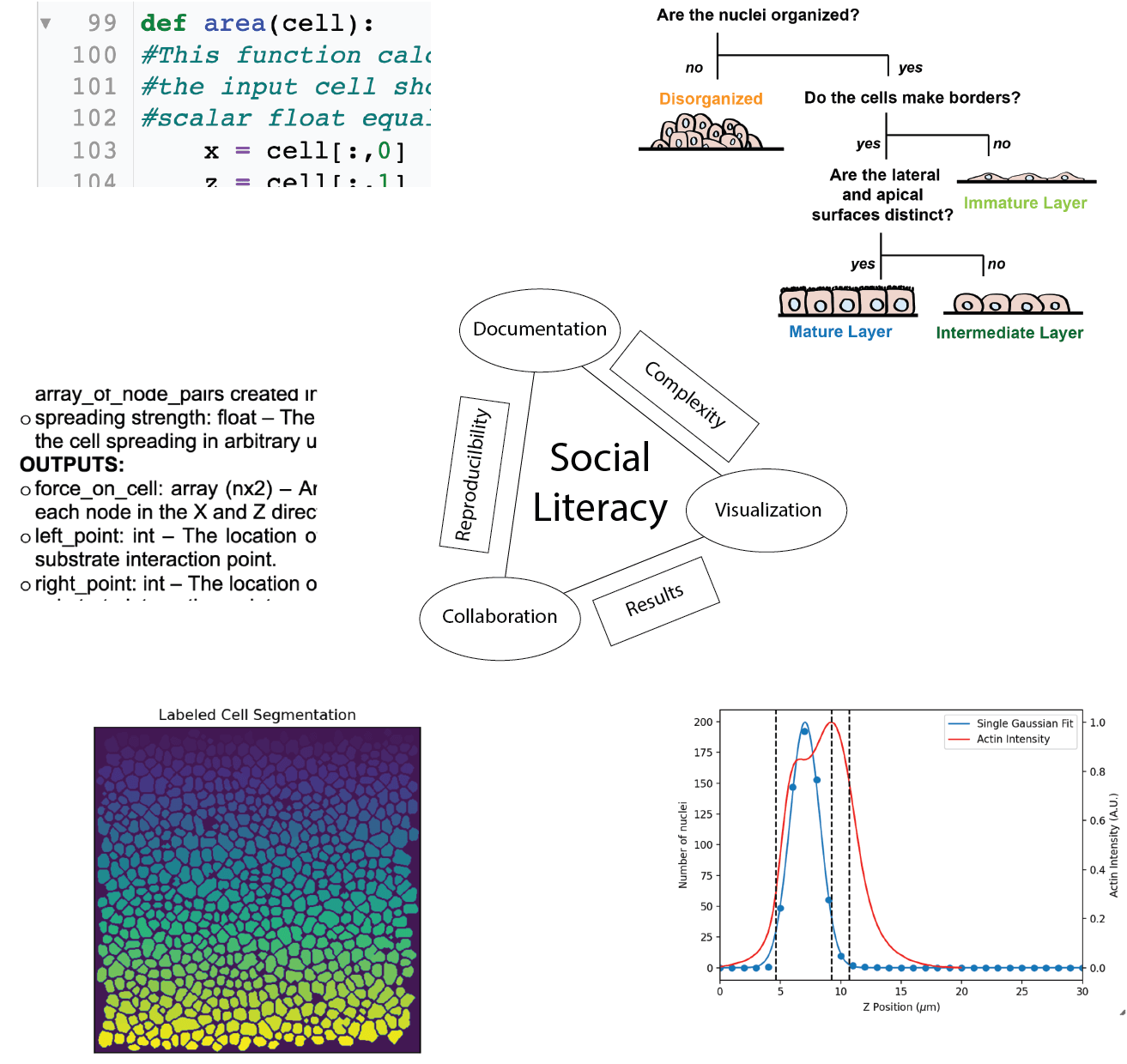

Examples of Social Computational Literacy within a physics research project. Comments, pseudocode, and visual representations of data are social aspects that are integral to the scientific and technical goals of the project.

Specifically regarding the Social pillar of computational literacy, direct interaction with a greater community is paramount. This form of literacy manifests in a plethora of ways; good documentation practices, clear organization schemes, and ability to search for help are just some of the ways people can exercise Social Literacy. Our work extends beyond research into the expression of Social Literacy by probing the motivation for this expression. We have conducted interviews with experts across STEM fields to explore the role of Social Literacy in research and education, and to explore how instructors help their research associates and students to become computationally literate.

To date 19 interviews have been conducted with faculty across physics, biology, math, chemistry, and engineering. The interview data contains discussions of the main types of computational activities that faculty do in their research or teaching along with examples of computational artifacts they use and produce. The interviews are being analyzed for elements of social computational literacy, and the elements are being organized into an Activity System (Yamagata Lynch 2010). Some common Social elements of computation are documentation for yourself or your team, visualization of results to persuade others or influence further analyses, collaboration with large and small groups, and data practices related to metadata and storage. One of our goals is to understand how social elements are supported by various resources, tools, norms, community, and practices. This feeds into a broader goal of identifying how social elements of computational literacy help achieve scientific research and educational objectives for experts and students.

Assessment of computational literacy

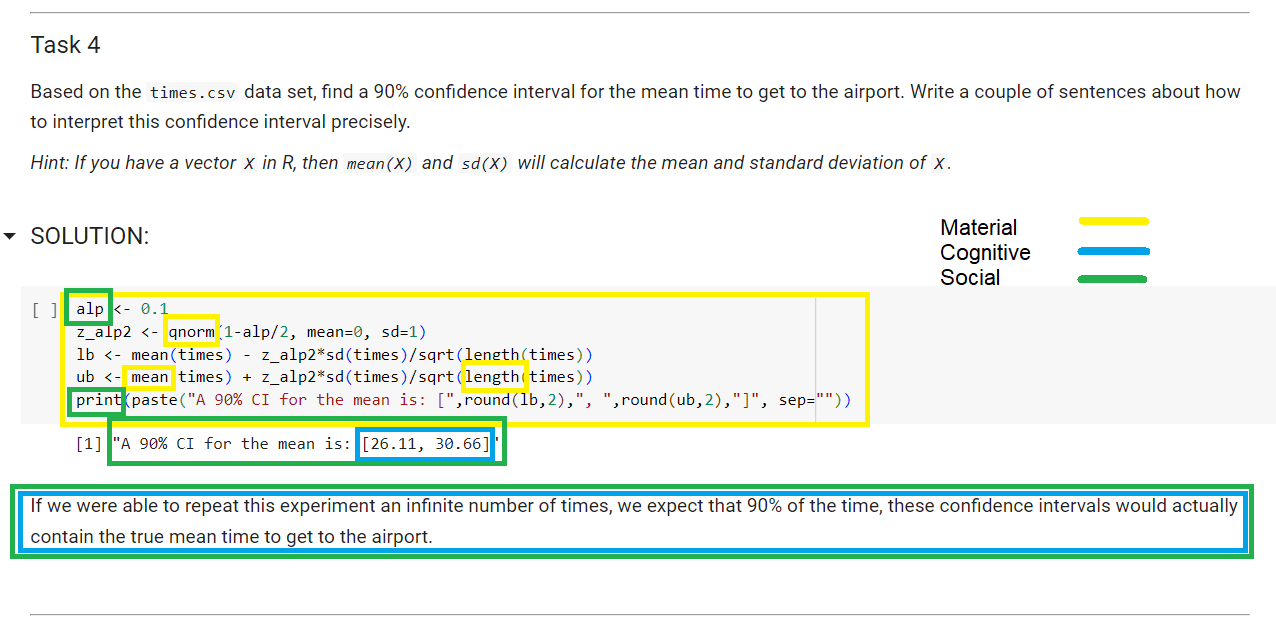

Example of a Jupyter notebook with elements of material, cognitive, and social computational literacy.

In our project on assessment of computational literacy, we are striving to develop a better understanding of how instructors can assess their students’ computational literacy. Building off the work of Odden, Lockwood, and Caballero (2019) and diSessa (2000), computational literacy is broken into a 3x3 framework. Following diSessa (2000). One axis consists of three literacies that constitute computational literacy: (1) Material Literacy, (2) Cognitive Literacy, and (3) Social Literacy. Expanding upon this framework, Odden and colleagues added another axis with three elements that make up each of these literacies: (1) practices, (2) knowledge, and (3) beliefs. For example, this framework can be used to assess one’s social practices by looking at the ways in which one communicates the results of a computation (e.g., through formatting print statements) or explains their code (e.g., using comments). Similarly, this framework can be used to assess one’s cognitive practices by analyzing how the result of a computation is interpreted using domain specific knowledge.

Currently, we are collecting a sampling of students’ coursework with computational tools (e.g., Jupyter Notebooks, Python scripts, MiniTab, etc.) and are conducting and analyzing a series of interviews with those students. With this data, we are applying Odden and colleagues' framework to compare what categories of computational literacy can be identified from the students’ work and which categories only emerge through interviews with students. Our goal is to then leverage this work toward the refinement of the assessment of students’ work, toward the identification of differences in the computational literacies emphasized in different computational tools, and toward the classification of activities that can be used to advance students’ computational literacy.