Projects and Output

Projects and Output

- RIT/

- Computational Sensing REU/

- Projects and Output

2026 Projects

Project 1: Energy-efficient Object Segmentation for a Sustainable Future

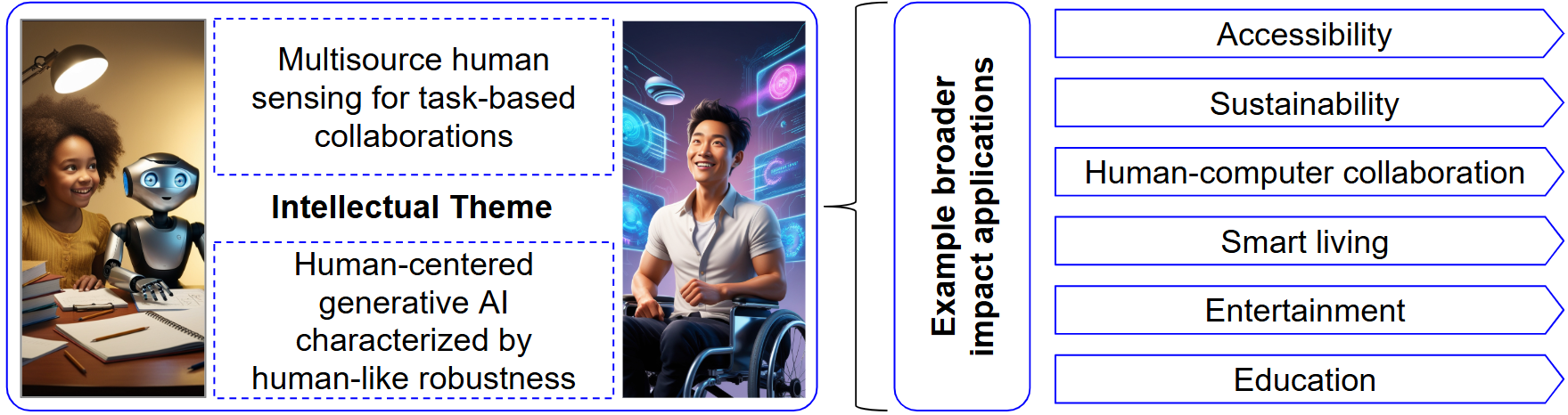

Current forms of artificial intelligence (AI), including generative AI, demand significant computational resources, which are often neither environmentally sustainable nor cost-effective. In contrast, the human brain exhibits remarkable energy efficiency, heavily utilizing recurrence, sparse patterns of activity, and specialized neurological pathways. In this project, we will investigate brain-inspired modifications of AI architectures in terms of constrained, sparse, temporal-oriented artificial neural networks, drawing insights from how the brain processes information. We will explore these concepts in the context of visual perception, particularly focusing on the development of more efficient and generative forms of scene segmentation and object recognition.

Mentors: Alexander Ororbia, Cecilia Alm, and Reynold Bailey

Project 2: Assistive Robots for Deaf/Hard of Hearing Individuals

Assistive agents for persons with disabilities are increasingly feasible. This project will leverage generative AI to maximize the individual's situational and awareness with a robot companion that perceives and processes distinct modalities (e.g., environment video, audio, ultrasonic). Next, the robot decides how to interact with the Deaf user, e.g., it may physically fixate on an auditory stimulus, send a tactile alert to the user, or convey visual information to augmented reality headsets. Using reinforcement learning, the robot companion updates its decision-making based on past decision's effectiveness and user preferences. The project will explore research questions such as How can a mobile robot companion maximize Deaf user awareness using visual, auditory, and other modalities? and How can this framework operate effectively in office vs. manufacturing environments?, using simulated environments in our labs.

Mentors: Jamison Heard, Cecilia Alm, and Reynold Bailey

Project 3: LLM-based Language Tutors for Different Languages

Large Language Models (LLMs) can generate fluent feedback, scaffold writing, and simulate conversational partners with impressive realism. However, most existing educational applications built on these models focus heavily on English, leaving open the question of how well LLMs support learners of other languages. Their ability to handle culturally appropriate expressions, non-Latin writing systems, or pragmatically sensitive contexts remain underexplored. This project invites students to design and evaluate LLM-driven applications for learners of other languages. It specifically aims to explore whether LLMs effectively support non-English language learners and, if so, how to ensure cultural appropriateness and adaptive difficulty across different linguistic contexts.

Mentors: Hakyung Sung, Cecilia Alm, and Reynold Bailey

2022 Projects

Project 1: Sensing fatigue during video conferencing using physiological signals

Video conferencing using software tools such as Zoom has become a necessity in recent times due to the COVID-19 pandemic situation. While video conferencing is productive for many, research has shown that prolonged hours of video conferencing can lead to psychological effects such as fatigue, a.k.a. 'Zoom fatigue'. Thus, the goal of this project is to develop a Zoom fatigue sensing method that leverages physiological signals. Based on previous research, the project aims to explore eye tracking-based methods (sensing blink rates and speeds) and/or speech patterns (changes in voice energy, etc.) to detect Zoom fatigue of video conferencing participants.

Mentors: Roshan Peiris, Reynold Bailey, and Cecilia O. Alm

Project 2: User interface design and task-oriented speech recognition for gaze-voice enabled magnification for low vision users

Magnifying technologies allow people with low-vision to read and access digital content. Prior work has shown that current eye-tracking technologies are generally inadequate for capturing the gaze of low-vision users. Gaze-voice enabled magnification using low-cost, built-in web cameras combined with intelligent voice interfaces offers a promising approach that may reduce the need for precise point-and-click interactions or expensive and complicated eye-tracking hardware. In this project, we aim to investigate task-oriented speech recognition and interface designs toward improving gaze-voice magnifying interfaces for low vision users.

Mentors: Kristen Shinohara, Cecilia O. Alm, and Reynold Bailey

Project 3: Adaptive interaction for inclusive social robots

Social agents and robots are becoming more prevalent in everyday life, from Siri to autonomous robotic cleaners. However, the design of how these agents interact with humans has typically focused on the general population, and these interactions are sometimes ineffective for specific groups of users. An adaptive interaction framework may promote inclusiveness by detecting ineffective interactions, reasoning why an interaction failed, and adapting the framework’s interaction strategy appropriately. This project will rely on physiological signals and computer-vision techniques to detect when an interaction between an agent and a human is ineffective, including when this interaction is between an agent and someone who is Deaf or Hard of Hearing.

Mentors: Jamison Heard, Reynold Bailey, and Cecilia O. Alm

Project 4: Cognitive stress detection and classification through physiological signal processing

Everyone experiences cognitive stress from time to time when facing pressure (stressful events) in their daily life. While not all stress is bad, in most cases, stress may alter people’s behaviors and may carry physical and mental health risks. It is important to study cognitive stress and understand how it impacts our bodies. Stress often causes physiological responses that lead to changes in physiological signals, such as heart rate and electrodermal activities, that can be sensed non-invasively. Cognitive stress has different types according to sources of pressure, such as workload and attention. Previous research has shown that automatic mental stress detection can be achieved by tracking physiological signal patterns. However, it is still unclear if physiological signals can reflect different types of cognitive stress. In this project, through experiments, we will collect physiological signals under different cognitive stress conditions. Computational and machine learning methods will be applied to understand whether we can develop effective detectors that can distinguish different types of cognitive stress. The outcomes of this project may help future human-machine interaction applications to automatically track interaction procedure and adjust interaction content, such as workload, task difficulty levels, and required response time.

Mentors: Zhi 'Jenny' Zheng, Reynold Bailey, and Cecilia O. Alm

Project 5: Neural task assistants for resolving confusion in multimodal, complex tasks

Multimodal language modeling remains a significant challenge to this day. Addressing some of the tasks involved would benefit language-driven dialogue systems that form the backbone of various applications on mobile devices and systems in the home. In this project, students will explore statistical learning techniques for dialogue that consider human data modalities, such as facial expressions, in the implementation of more human-like agents, including those that are capable of recognizing and resolving confusion between human interlocutors. Students will also evaluate the dialogue assistants in a user study.

Mentors: Alex Ororbia, Cecilia O. Alm, and Reynold Bailey

2021 Projects

Project 1: Emotional empathy and facial mimicry of avatar faces

Facial mimicry is the tendency to imitate the emotional facial expressions of others. It is a common trait that is naturally, and often unknowingly, exhibited by humans particularly when observing expressions of happiness or anger. In this work, we will explore and model the extent by which the same type of empathetic reactions are elicited when viewing 3D avatar faces guided by motion-captured movements applied on the avatar face, compared to when viewing people's faces. It is hoped that our findings could lead to a new metric of evaluating the effectiveness of avatar facial models in conveying facial expressions, and improve understanding of reactions to avatars.

Mentors: Joe Geigel, Reynold Bailey, and Cecilia O. Alm

Project 2: Gaze-voice enabled magnification for low vision users

Magnifying technologies allow people with low-vision to read and access digital content. Prior work has shown that current eye-tracking technologies are generally inadequate for capturing the gaze of low-vision users. Controlling the placement of software-driven magnification solutions by combining gaze estimation with intelligent voice interfaces offers a promising approach that may reduce the need for agile targeting devices such as a mouse, or expensive and complicated eye-tracking hardware. In this project, we investigate low vision user needs and devise computational control strategies toward improving magnifying interfaces.

Mentors: Kristen Shinohara, Cecilia O. Alm, and Reynold Bailey

Project 3: Journalists versus algorithms: Readers’ perception of credibility

The use of computational methods to produce journalistic content is gaining popularity in newsrooms, making it harder for news readers to differentiate between content written by humans and machine-generated content. The availability of automated-text generation models has also increased public and media concerns about the misuse of technology to generate disinformation. We will use an online experiment and multimodal behavioral data (eye gaze and facial expression) to study native and non-native English and Spanish-speaking newsreaders’ reactions to and the perception of machine-generated content versus stories written by journalists.

Mentors: Ammina Kothari, Reynold Bailey, and Cecilia O. Alm

Project 4: Social interaction information delivery through a humanoid robot

Humanoid robots have been increasingly applied as socially assistive robots (SAR), such as tutors, intervention facilitators, and companions. These tasks require social interaction between robots and users. Through subjective measurements, such as self-report, survey, and interviews, previous studies have indicated that social interaction information delivery is different between robots and humans. However, there is a lack of objective and quantitative evidence to identify when and how communicative features such as speech voice inflection (prosody), facial expressions, gaze, or body movements characterize these differences. In this project, through experiments, we will compare the human reactions to social interaction with a humanoid robot vs. a human interlocutor. Computational human behavior sensing methods will detect subjects’ reactions to the social interaction prompts by a robot vs. a human. Statistical and pattern recognition methods will be used to analyze and model potential differences in reactions to help improve future SAR design to effectively interact socially.

Mentors: Zhi 'Jenny' Zheng, Reynold Bailey, and Cecilia O. Alm

Project 5: Human-like intelligent cognitive agents

Language-based dialogue systems have become pervasive for daily activities, including on mobile devices and systems in the home. In this project, students will explore machine learning techniques for dialogue that consider human data modalities, such as facial expressions, towards the construction of more human-like agents, including those that are capable of recognizing and resolving confusion between human interlocutors.

Mentors: Alex Ororbia, Cecilia O. Alm, and Reynold Bailey

2019 Projects

Project 1: Measuring engagement in mixed reality applications

Mixed reality has increased in applications across education, entertainment, and manufacturing. We will study how multimodal data--skin response, pulse, facial expressions, eye gaze, speech, etc.--aids understanding of engagement levels in immersive environments with diverse users. We will seek to develop and evaluate a flexible metric of engagement for optimizing user experience in immersive applications

Mentors: Joe Geigel, Reynold Bailey, and Cecilia O. Alm

Project 2: Eye-tracking magnification

Magnifying technologies allow people with low-vision to read and access digital content. However, they also require agile use of targeting devices. We combine every-day eye-tracking technologies with speech capture to create as well as understand magnifying interfaces that follow the reader's gaze and do not require external pointing devices such as a mouse.

Mentors: Kristen Shinohara, Cecilia O. Alm, and Reynold Bailey

Project 3: Intelligent journalism

Automation and machine-generation of news content is changing newsrooms' practices, the news consumer's understanding of what journalism is, and how news is selected and presented. We will use multimodal behavioral data (gaze, facial expressions, biophysical signals) to study perception of machine-generated content versus stories written by journalists. Influences of credibility, objectivity, and political bias will be investigated.

Mentors: Ammina Kothari, Reynold Bailey, and Cecilia O. Alm

Project 4: Subtle gaze guidance for accessible captioning

Deaf or hard-of-hearing individuals may miss important visual content while reading the text captions on videos. We will build on team members' prior work on gaze-guidance and automatic-speech-recognition captioning to explore subtle gaze guidance strategies to draw attention to important regions of a video, with varying levels of caption text accuracy, and across four user groups: deaf/hard-of-hearing, non-native speakers, native speakers, and native speakers with autism spectrum disorder.

Mentors: Matt Huenerfauth, Reynold Bailey, and Cecilia O. Alm

Project 5: Augmented transfer learning for deep amusement

Positive experiences boost human well-being and productivity. We will attempt to explore innovative methods of achieving sensing-based inference of human amusement with deep learning and modest data. Using relevant base models and recent techniques in transfer learning and data augmentation, verbal and nonverbal sensing data elicited while diverse subjects view or read amusing content will be infused into systems to infer ranges of positive expressions and intensities of amusement.

Mentors: Raymond Ptucha and Cecilia O. Alm

2018 Projects

Project 1: Effects of immersive viewing of live performance in a virtual world

This project will build on our work in virtual theatre; which we define as shared, live performance, experienced in a virtual space, with participants contributing from different physical locales. In particular, we will explore how immersive viewing of a virtual performance (using a head mounted display such as an Oculus Rift) affects audience engagement compared viewing the same performance in a non-immersive environment (such as on a screen or monitor). Galvanic skin response, facial expression capture, and possibly eye gaze will be used to gauge audience interest and engagement. In addition, speech capture (of audience descriptions and impressions of the performance) will enable emotional prosody analysis.

Mentors: Joe Geigel, Reynold Bailey, and Cecilia O. Alm

Project 2: Human-aware modeling of humor and amusement

Systematically characterizing the semantics of humor is subjective and difficult. For language technologies, both the interpretation of humor or the expression of amusement remain challenging. A particular caveat is that in machine learning modeling, gold standard data collection tends to rely on third-party annotators interpreting and rating linguistic examples. Rather, for humor, people’s spontaneous verbal and non-verbal reactions of joy and amusement may constitute more natural ground truth. It is important to better understand the relationship between traditional annotation and spontaneous measurement-based annotation, and how they impact machine modeling. This study will capture sensing data from observers (pulse, skin response, facial expressions, and spoken spontaneous reactions such as laughter, lip smacks, gasps, breathing patterns), as they view humorous and non-humorous language-inclusive video clips. The observers will also be asked to label the clips. We will statistically compare amusement signals captured spontaneously against task-based annotation, and assess effect on machine modeling. We will also explore whether reactions to humor has a sustained effect on annotation of consecutive video clips.

Mentors: Ray Ptucha, Chris Homan, and Cecilia O. Alm

Project 3: Multi-modal sensing and quantification of atypical attention in autism spectrum disorder

While autism spectrum disorder (ASD) is primarily diagnosed through behavioral characteristics, behavioral studies alone are not sufficient to elucidate the mechanisms underpinning ASD-related behavioral difficulties. In previous research, behavioral difficulties associated with ASD have in part been attributed to atypical attention towards stimuli. Eye-tracking has been previously used to quantify atypical visual saliency in ASD during natural scene viewing. In this project, we will explore the potential of electroencephalography (EEG) in providing insight into the neurological correlates of information processing during natural scene viewing. We will integrate EEG and eye-tracking data in machine learning models, with the goal to uncover the attentional and neurological correlates of viewing performance between typical and ASD subjects. These findings may also provide potential markers for early diagnosis of ASD. In this REU project, together with the faculty mentors, student researchers will extend the current state-of-the-art, conduct experiments to collect necessary data, and utilize machine learning and statistical methods to analyze the collected data with a goal to uncover the mechanism underlying atypical attention in ASD.

Mentors: Linwei Wang, Hadi Hosseini, and Catherine Qi Zhao

Project 4: User intents in multi-occupancy, immersive smart spaces

Smart spaces are typically augmented with devices that are capable of sensing, processing, and actuating. For example, RIT SmartSuite is fitted with multiple sensors and actuators to make living spaces sustainable. These spaces are autonomous systems from which contextual information can be gathered/mined/used to support autonomy minimizing user intervention. However, sensing, modeling, and understanding user context such as intents accurately is difficult and often employs intrusion detection techniques. This project will (1) create an immersive representation of some smart spaces, such as the RIT SmartSuite, using augmented and/or virtual reality and supporting user participation through a head mounted display such as Oculus Rift, and (2) use eye gaze, motion, and facial expression capture to derive interactions between participants and objects. This project will test the effectiveness of visual cues and feedback techniques to improve the virtual presence and participation in technology rich smart spaces. Multi-modal sensor data will be analyzed with a goal to derive user intents.

Mentors: Peizhao Hu, Ammina Kothari, Joe Geigel, and Reynold Bailey

Project 5: Alignment of gaze and dialogue

Automated word alignment is a first step in traditional statistical machine translation. We have used alignment in a computational framework that establishes meaningful relationships between observers’ gaze and co-collected spoken language during descriptive image/scene inspection, for machine-annotation of image/scene regions. REU student researchers will extend the current framework to task-based dialogues with pairs of observers discussing visual content. We may also explore an alternative metric for assessing multimodal alignment quality when the alignment input consists of visual-linguistic data from more than one observer.

Mentors: Cecilia O. Alm, Preethi Vaidyanathan, Reynold Bailey, and Ernest Fokoué

2017 Projects

Project 1: Attention and behavior of students in online vs. face-to-face learning contexts

In the United States, online learning enrollments are on the rise and competing in growth with traditional course offerings. How is this shift in STEM educational delivery impacting attention, behavior, and performance of students? This project explores how online vs. face-to-face learning contexts influence students. We will use various sensing devices that capture facial expressions, skin response, etc. We will examine what happens when students are given performance incentives, and also consider the role of content difficulty. Project outcomes will contribute to improving individualized learning experiences.

Project 2: Learning and sensing of job-related narratives in social media

Employment and work circumstances have profound impacts on the well-being and prosperity of individuals and communities. Social media sites provide forums where individuals can divulge, tell stories about, and make sense of their experiences with employment. Students will build upon machine learning methods such as neural networks, social network analysis, and natural language processing techniques to study work-related discourse on social media. Deep architectures and recurrence have revolutionized the neural network community and these techniques will be paired with semantic role labeling or frame semantics to extract efficiently information on employment and work from social media data. The project will explore quantifiable relationships between social media narratives and communities, and psychophysical signals such as reader skin response. These activities will contribute to our understanding of how people make sense of and react to job narratives.

Project 3: An interactive dermatological diagnosis system

This project will investigate models and algorithms to best leverage human expertise in building machine-learning systems. Active and interactive learning techniques will be explored where the former focuses on minimizing humans’ input to the system and the latter aims to elicit and monitor the most effective user feedback to improve the learning outcome. Students will research a number of interaction strategies between human users and a learning system and help enhance existing active and interactive learning models.

Project 4: Sensing in computer-based creative writing instruction

Creative writing is a prominent feature of higher education. It remains very tradition-bound: 1) often taught in a workshop setting as a highly-subjective process with evaluation using aesthetic principles centered on professorial expertise; and 2) focused on the creative work as a fixed artifact (e.g. the story or poem). The model of creative writing instruction influences other forms of writing pedagogy and the model of the work of the creative writer influences other forms of written work. Over recent decades, this model has been critiqued from several angles, with attention to collaborative or social approaches. At the same time, creative writing has changed as digital technologies become a tool and medium for the writer. What happens to the work and the model of instruction in environments increasingly characterized by developments in synthetic language, such as the Amazon Echo and artificial intelligence? The creative work becomes a transaction deeply tied to the sensations and interactions of listeners. Such technologies, which will play a growing role in our environments, allow us to explore new forms of creativity and response. This project will use sensing modalities (pulse or skin response or natural language processing) to understand response to these new creative forms, with implications for creative writing instruction and for a broad range of interactions with the Amazon Echo and similar technologies, as they play a growing role in our daily lives.

Project 5: Visual-linguistic alignment with static and dynamic imagery

In statistical machine translation, bilingual word alignment is a first step in translating text from one language to another. Such algorithms can also establish meaningful relationships between observers’ gaze data and their co-collected verbal descriptions, for machine-annotation of visual content. REU student researchers will extend the current visual-linguistic alignment framework to video, consider holistic and region-based annotation for visual content with positive or negative valence, and explore a novel approach to human assessment of alignment quality within the context of different applications.

2016 Projects

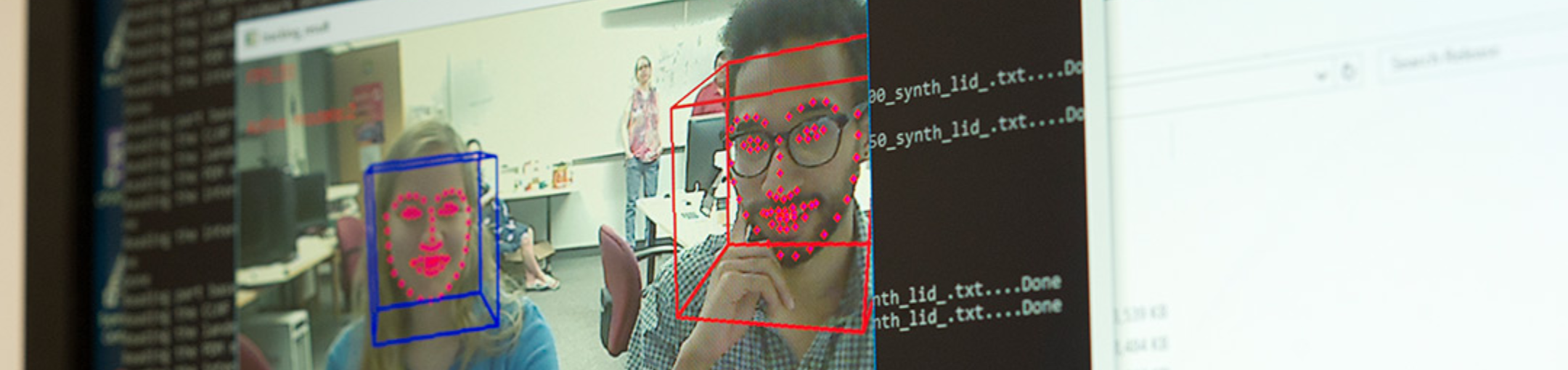

Project 1: Real-time attention and engagement monitoring

In recent work, we used a microphone to record speech and a camera to capture facial expressions of individuals engaged in computer-based tasks. The nature of the tasks were manipulated to induce moderate stress. We were later able to fuse both sources of data to reliably predict when the user was stressed. Students involved in this project will build on these ideas and develop techniques for real-time monitoring of user stress, attention, and cognitive load from multisource data towards monitoring in online learning systems.

Project 2: Macro-level understanding of interpersonal violence

Interpersonal violence (IPV) and abuse are prominent problems that impact individuals and macro-level community wellness. Our findings from large social media collections include that beliefs, values, social pressure, and fears play major roles in keeping people in abusive relationships. Semantic role labeling is a method that can help identify information. Our prior work also showed that such tools are not well tailored to this data. Students will focus on: (1) inventorying and modifying prior semantic role labeling tools to better extract information on IPV from social media data, and (2) identifying quantifiable relations between signals in social media narratives, geospatial information, and demographic data sources.

Project 3: Multimodal interface with adaptive user feedback

We will extend techniques to systematically fuse multimodal data, representing experts’ domain knowledge, to improve image understanding. Students will enhance a prototype multimodal interactive user interface with interactive machine learning, to effectively collect and incorporate user feedback to enhance the data fusion results.

Project 4: Storytelling Lab: Sensing and storytelling

Post-apocalyptic storytelling in computer games tends to heavily rely on conveying–and users experiencing–haptic, visual and aural cues of danger. Students will study how creative narrative and computational design capture, convey, and produce sensory experience. Students will participate in developing and implementing a game prototype of a post-apocalyptic version of Rochester, the city they are in, and study creative reactions in users.

Project 5: Visual-linguistic alignment and beyond

In statistical machine translation, bitext word alignment is the first step in translating text from one language to another. Alignment algorithms can establish meaningful relationships between observers’ gaze data and their co-collected verbal image descriptions, for image annotation or classification. REU student researchers will (1) extend the bi-modality alignment framework to new modalities, (2) automate transcription with speech recognition, and (3) explore alignment quality considering factors such as concept concreteness and specificity, image domain, and observer background knowledge

Publications

- Isabelle Arthur, Jordan Quinn, Rajesh Titung, Cecilia O. Alm and Reynold Bailey. 2023. MDE – Multimodal data explorer for flexible visualization of multiple data streams. In Proceedings of the Affective Computing and Intelligent Interaction Conference: Demos, Cambridge, Massachusetts, pp. 1-3.

- A'di Dust, Carola Gonzalez-Lebron, Shannon Connell, Saurav Singh, Reynold Bailey, Cecilia O. Alm, and Jamison Heard. 2023. Understanding differences in human-robot teaming dynamics between deaf/hard of hearing and hearing individuals. In Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, pages 552–556.

- Ammina Kothari, Andrea Orama, Rachel Miller, Matthew Peeks, Reynold Bailey, and Cecilia O. Alm. 2023. News consumption helps readers identify model-generated news. In Proceedings of the 2023 IEEE Western New York Image and Signal Processing Workshop, Rochester, New York, pp. 1-10.

- Cecilia O. Alm, Rajesh Titung, and Reynold Bailey. 2023. Pandemic Impacts on Assessment of Undergraduate Research. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 2 (SIGCSE 2023). Association for Computing Machinery, New York, NY, USA, 1382.

- Camille Mince, Skye Rhomberg, Cecilia O. Alm, Reynold Bailey, and Alex Ororbia. Forthcoming. Multimodal modeling of task-mediated confusion. In Proceedings of the Student Research Workshop (at NAACL 2022).

- Trent Rabe, Anisa Callis, Zhi Zheng, Jamison Heard, Reynold Bailey, and Cecilia O. Alm. 2022. Theory of mind assessment with human-human and human-robot interactions. In: Kurosu, M. (eds) Human-Computer Interaction. Technological Innovation. HCII 2022. Lecture Notes in Computer Science, vol 13303. Springer, Cham.

- Angela Saquinaula, Adriel Juarez, Joe Geigel, Reynold Bailey, and Cecilia O. Alm. In press. Emotional empathy and facial mimicry of avatar faces. In Proceedings of the IEEE Conference on Virtual Reality + 3D User Interfaces. Extended poster abstract. Accepted.

- Cecilia O. Alm and Reynold Bailey. 2022. Scientific skills, identity, and career aspiration development from early research experiences in computer science. Journal of Computational Science Education, 13(1): 2-16.

- Cecilia O. Alm, Reynold Bailey, and Hannah Miller. 2022. Remote early research experiences for undergraduate students in computing. In Proceedings of the SIGCSE Technical Symposium 2022, 43-49, Providence, Rhode Island

- Shubhra Tewari, Renos Zabonidis, Ammina Kothari, Reynold Bailey, and Cecilia O. Alm. 2021. Perceptions of human and machine generated articles. Journal of Digital Threats: Research and Practice, 2(2): 1-16.

- Cecilia O. Alm and Reynold Bailey. REU mentoring engagement: Contrasting perceptions of administrators and faculty. SIGCSE '21 (Poster).

- Cecilia O. Alm and Reynold Bailey. 2021. Transitioning from teaching to mentoring: Supporting students to adopt mentee roles. Journal for STEM Education Research, 4(1), 95-114.

- Natalie Maus, Dalton Rutledge, Sedeeq Al-Khazraji, Reynold Bailey, Cecilia O. Alm, and Kristen Shinohara. 2020. Gaze-guided magnification for individuals with vision impairments. In Proceedings of CHI 2020 (Late Breaking Work), pages 1-8, online.

- Cleo Forman, Pablo Thiel, Raymond Ptucha, Miguel Dominguez, and Cecilia O. Alm. 2020. Capturing laughter and smiles under genuine amusement vs. negative emotion. In Proceedings of the Workshop on Human-Centered Computational Sensing (at PerCom 2020), pages 1-6.

- Jonathan Kvist, Philip Ekholm, Preethi Vaidyanathan, Reynold Bailey, and Cecilia O. Alm. 2020. Dynamic visualization system for gaze and dialogue data. In Proceedings of the International Conference on Human Computer Interaction Theory and Applications (at VISIGRAPP 2020), pages 138-145.

- Victoria Kraj, Thomas Maranzatto, Joe Geigel, Reynold Bailey, and Cecilia O. Alm. 2020. Evaluating audience engagement of an immersive performance on a virtual stage. In Frameless, vol. 2(1), article 4, pages 1-15.

- Jessica Li, Matt Luettgen, Matt Huenerfauth, Sedeeq Al-Khazraji, Reynold Bailey, and Cecilia O. Alm. 2020. Gaze guidance for captioned videos for DHH users. Journal on Technology and Persons with Disabilities, vol. 8, pages 69-81.

- Regina Wang, Bradley Olson, Preethi Vaidyanahtan, Reynold Bailey, and Cecilia O. Alm. 2019. Fusing dialogue and gaze from discussions of 2D and 3D scenes. In Adjunct of the 21st ACM International Conference on Multimodal Interaction, pages 1:1-6.

- Saraf, Monali, Tyrell Roberts, Raymond Ptucha, Christopher Homan, and Cecilia O. Alm. 2019. Multimodal anticipated versus actual perceptual reactions. In Adjunct of the 21st ACM International Conference on Multimodal Interaction, pages 2:1-5.

- Gustaf Bohlin, Kristoffer Linderman , Cecilia O. Alm, and Reynold Bailey. 2019. Considerations for face-based data estimates: Affect reactions to videos. In Proceedings of the International Conference on Human Computer Interaction Theory and Applications (at VISIGRAPP 2019), pages 188-194.

- Kelsey Rook, Brendan Witt, Reynold Bailey, Joe Geigel, Peizhao Hu, and Ammina Kothari. 2019. A study of user intent in immersive smart spaces. In Proceedings of the Workshop on Pervasive Smart Living Spaces (at PerCom 2019).

- McKenna K. Tornblad, Luke Lapresi, Christopher M. Homan, Raymond W. Ptucha, and Cecilia O. Alm. 2018. Sensing and learning human annotators engaged in narrative sensemaking. In Proceedings of the Student Research Workshop (at NAACL 2018), pages 136-143.

- Rebecca Medina, Daniel Carpenter, Joe Geigel, Reynold Bailey, Linwei Wang, and Cecilia O. Alm. 2018. Sensing behaviors of students in online vs. face-to-face lecturing contexts. In Proceedings of the Workshop on Human-Centered Computational Sensing (at PerCom 2018).

- Nse Obot, Laura O'Malley, Ifeoma Nwogu, Qi Yu, Wei Shi Shi, and Xuan Guo. 2018. From novice to expert narratives of dermatological disease. In Proceedings of the Workshop on Human-Centered Computational Sensing (at PerCom 2018).

- Yancarlos Diaz, Cecilia O. Alm, Ifeoma Nwogu, and Reynold Bailey. 2018. Towards an affective video recommendation system. In Proceedings of the Workshop on Human-Centered Computational Sensing (at PerCom 2018).

- Nikita Haduong, David Nester, Preethi Vaidyanathan, Emily Prud'hommeaux, Reynold Bailey, and Cecilia O. Alm. 2018. Multimodal alignment for affective content. In Proceedings of the Workshop of Affective Content Analysis (at AAAI 2018), pages 21-28.

- Cecilia O. Alm and Reynold Bailey. 2017. Team-based, transdisciplinary, and inclusive practices for undergraduate research. In Proceedings of IEEE 47th Annual Frontiers in Education (FIE) Conference.

- Alexander Calderwood, Elizabeth A. Pruett, Raymond Ptucha, Christopher M. Homan, and Cecilia O. Alm. 2017. Understanding the semantics of narratives of interpersonal violence through reader annotations and physiological reactions. In Proceedings of the Workshop on Computational Semantics beyond Events and Roles (at EACL 2017), pages 1-9.

- Ashley Edwards, Anthony Massicci, Srinivas Sridharan, Joe Geigel, Linwei Wang, Reynold Bailey, and Cecilia O. Alm. 2017. Sensor-based methodological observations for studying online learning. In Proceedings of the ACM Workshop on Intelligent Interfaces for Ubiquitous and Smart Learning (at IUI 2017), pages 25-30.

- Aliya Gangji, Trevor Walden, Preethi Vaidyanathan, Emily Prud’hommeaux, Reynold Bailey, and Cecilia O. Alm. 2017. Using co-captured face, gaze and verbal reactions to images of varying emotional content for analysis and semantic alignment. In Proceedings of the Workshop on Human-Aware Artificial Intelligence (at AAAI 2017), pages 621-627.

News and Media

- RIT News (June, 2019): RIT Hosts REU Graduate Study and Research Symposium on June 28.

- RIT News (May, 2019): RIT Research Helps Artificial Intelligence be More Accurate.

- RIT News (July, 2018): RIT Evolves Partnership with Malmo University through Undergraduate Research Experiences: Undergraduates from Malmo are Spending the Summer at RIT Researching Computational Sensing.

- RIT News (June, 2018): RIT is one of the Nation's Top Universities for NSF Undergraduate Research Programs: Undergraduate Students from across the Nation come to RIT for Summer of Research.

- RIT News (June, 2018): Mapping Artificial Intelligence at RIT.

- Research at RIT - Research Magazine (Fall/Winter, 2017): Undergraduates Team up with RIT Faculty on Computational Sensing Research.

- REU Program - Video (October, 2017): RIT Program in Computational Sensing.

- RIT News - Campus Spotlight (June 30, 2017): The 2017 REU Graduate Study and Research Symposium.

- RIT News - Video (August 26, 2016): NSF Research Experiences for Undergraduates at RIT.

- RIT News - Antheneum (August 17, 2016): National Program Brings Together Undergrads to Experience Work as Researchers.

- CoLA Connections Newsletter (Summer, 2016): Undergraduate Research in Computational Sensing Connects Computing and the Human Experience.

Outreach Exhibits and Broader Events

- REU Graduate Study and Research Symposium 2022 - Program. June, 2022. Rochester Institute of Technology Campus, Rochester, NY.

- REU Graduate Study and Research Symposium 2021 - Program. July, 2021. Online.

- RIT tech expo. July, 2019. Greece Public Library, Rochester, NY.

- Research Experiences for Undergraduates (REU): Computational Sensing for Human-centered AI. April, 2019. Imagine RIT Innovation & Creativity Festival, Rochester, NY.

- Cool tech gadgets - RIT tech expo - Computational sensing. July, 2018. Greece Public Library, Rochester, NY.

- REU Graduate Study and Research Symposium 2018 - Program. June, 2018. Rochester Institute of Technology Campus, Rochester, NY.

- Strategies for successful undergraduate research: An interdisciplinary roundtable. December, 2017. The Stan McKenzie Salon Series (roundtable panel participation), Rochester, NY.

- Cool tech gadgets - RIT tech expo - Computational sensing. July, 2017. Greece Public Library, Rochester, NY.

- REU Graduate Study and Research Symposium 2017 - Program. June, 2017. Rochester Institute of Technology Campus, Rochester, NY.

- Undergraduate research in computational sensing. May, 2017. Imagine RIT Innovation & Creativity Festival, Rochester, NY.

- Undergraduate research in computational sensing. May, 2016. Imagine RIT Innovation & Creativity Festival, Rochester, NY.

REU Site: Computational Sensing for Human-centered AI

(2016-2018, 2019-2024, 2025-2028)

Rochester Institute of Technology

This material is based upon work supported by the National Science Foundation under Award Nos. IIS‐1559889, IIS-1851591, and CCF-2445028.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.