RIT researchers developing ways to use hyperspectral data for vehicle and pedestrian tracking

Associate Professor Matthew Hoffman secures grant from Air Force Office of Scientific Research

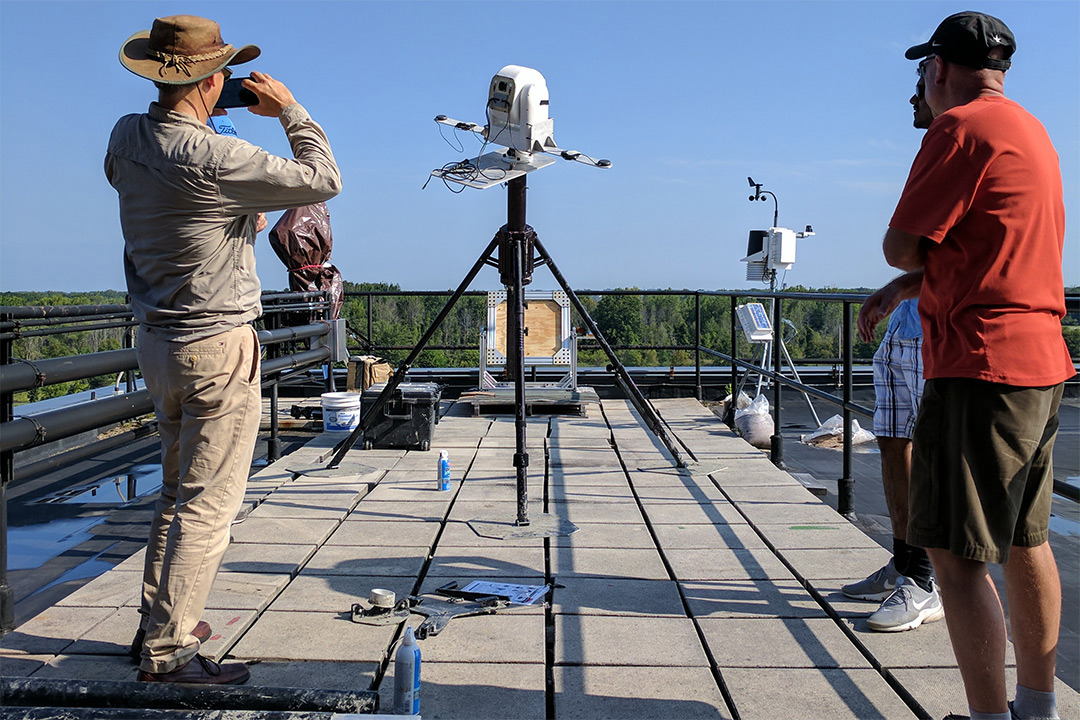

The Air Force Office of Scientific Research (AFOSR) funded a Rochester Institute of Technology project to utilize hyperspectral video imaging systems for vehicle and pedestrian tracking. The project will use the hyperspectral video system shown above, which was developed by Associate Professor and Frederick and Anna B. Wiedman Chair Charles Bachmann, left.

A classic scenario plays out in action films ranging from Baby Driver to The Italian Job: criminals evade aerial pursuit from the authorities by seamlessly blending in with other vehicles and their surroundings. The Air Force Office of Scientific Research (AFOSR) has Rochester Institute of Technology researchers utilizing hyperspectral video imaging systems that make sure it does not happen in real life.

While the human eye is limited to seeing light in three bands—perceived as red, green and blue—hyperspectral imaging detects bands across the electromagnetic spectrum far beyond what the eye can detect. This high-resolution color information can help us better identify individual objects from afar. The AFOSR awarded a team of researchers led by principal investigator Matthew Hoffman, an associate professor and director of the applied and computational mathematics MS program, a nearly $600,000 grant to explore if hyperspectral imaging systems can do a better job at tracking vehicles and pedestrians than current methods.

“It is very challenging to track vehicles from an aerial platform through cluttered environments because you cannot really see a vehicle’s shape as well, and a lot of machine learning computer algorithms are based on shapes,” said Hoffman. “Buildings, trees, other cars, and a lot of things can potentially confuse the system. As hyperspectral video technology has improved, we believe we can use color information to more persistently track targets.”

The project will require a multidisciplinary approach and Hoffman will work closely with researchers from RIT’s Chester F. Carlson Center for Imaging Science. Co-PIs include Professor Anthony Vodacek, Distinguished Researcher Donald McKeown and Assistant Professor Christopher Kanan. The project will use a hyperspectral video system developed by Associate Professor and Frederick and Anna B. Wiedman Chair Charles Bachmann. Senior Research Scientist Adam Goodenough and several Ph.D. students will also collaborate on the project.

The challenge with using hyperspectral imaging is that it produces massive amounts of data that can’t all be processed at once, so Hoffman and his team are also tasked with creating a process to efficiently use the information on demand. The team will use the Digital Image and Remote Sensing Image Generation model developed by RIT’s Digital and Remote Sensing Laboratory to develop a new dynamic, online scene building capability that helps re-track targets after they have passed by obstacles. Hoffman said he is excited by the prospect of creating such a unique product.

“This would be a dataset that just doesn’t exist today, so it would be a really novel solution,” he said.

The three-year project got officially underway in December. The goal this year is to develop the system’s infrastructure and the team hopes to mount the system on a plane at the start of year two to begin testing.

This material is based upon work supported by the Air Force Office of Scientific Research under award number FA9550-19-1-0021. Any opinions, finding, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the United States Air Force.