DIRSIG 5 Development

Principal Investigator(s)

Research Team Members

Adam Goodenough

Byron Eng

Michael Saunders

Jeffrey Dank

Serena Flint

Jacob Irizarry

Project Description

DIRSIG has had a heavy focus on the user experience this past year. With the help of both internal and external collaborators, many quality of life and efficiency improvements were made to the graphical tools as well as the input files.

The new image viewer program we introduced last year was improved with many quality-of-life improvements and new features. Among these new features is the ability to easily see any pixels with values of zero or NaN ('not a number') by setting a customizable color for these values. Another new feature is a tool that allows the user to quickly view image statistics. There were many other improvements to the image viewer this year that made viewing and debugging DIRSIG output files easier and more efficient.

DIRSIG scene representation and the construction process has been a focus for a few years now. This year some large improvements were made to how geometry is included and manipulated in these scenes. We added support for additional common geometry file formats, added the option to attribute materials individually to each instance of an object (previously all instances carried the same material attribution), and added UV mapping to DIRSIG's mathematical shapes ('primitive objects'). Additionally, we now support a new binary formatted file for geometry location information which is much more efficient when including a large number of instances.

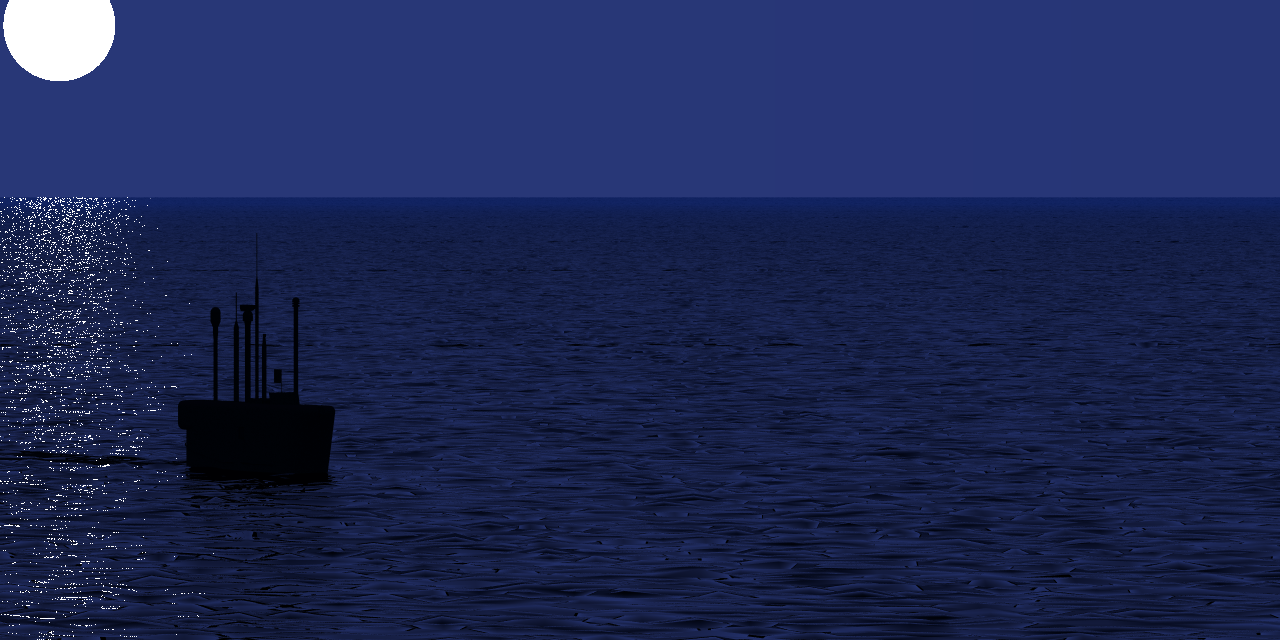

Figure1: Nighttime DIRSIG simulation of a submerged submarine. Glints from the moon disk can be seen on the water surface.

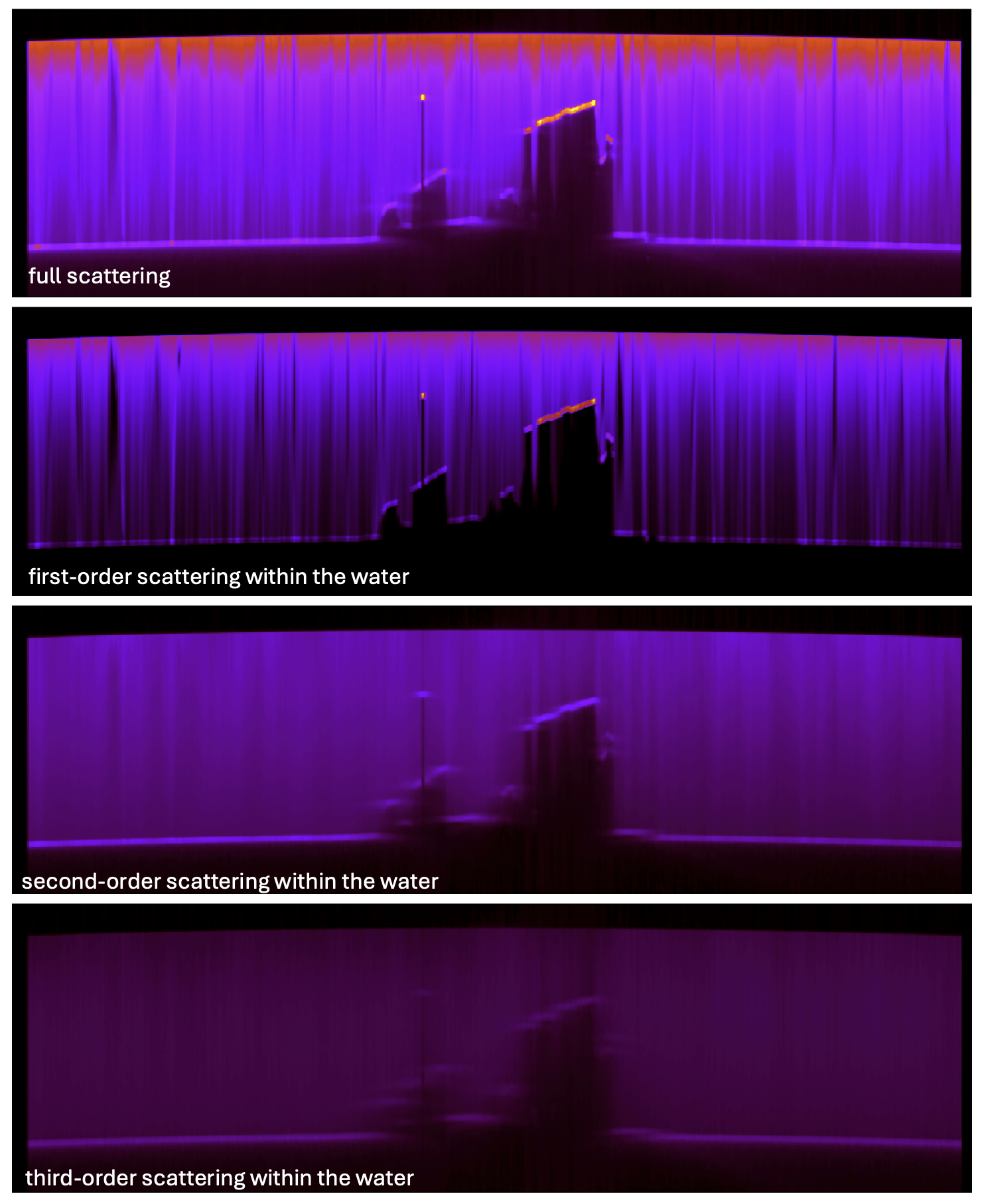

Figure 2: DIRSIG simulation of a Streak Tube Imaging LIDAR (STIL) system. A shipwreck is observed under a water surface. A detailed view of the 3D model is shown on the left. On the right side, the full scattering signal is shown in the top image. The following three images show the contributions from first-order, second-order, and third-order scattering events, respectively.

The DIRSIG LightCurve plugin was introduced over a year ago to simplify the collection of magnitude vs. time 'light curves' of exo-atmospheric objects traversing the sky. These 1D light curves are sometimes used as a characteristic signature of a specific space-based object. An increasingly common variant of this 1D characterization is a 'spectral waterfall', which is a 2D data product that captures the spectral magnitude vs. time. These 2D data products typically map the spectral data to the horizontal dimension and the time to the vertical dimension. To better facilitate a variety of non-traditional light curve products including spectral waterfalls, the LightCurve plugin was improved to export a spatial-spectral data cube for each time step in the collection. Providing user access to the underlying data that is spatially and temporally integrated to produce the traditional light curve data product provides the user with options to develop non-traditional products. The images and/or animations below show a newly introduced variant of the LightCurve1 demo, where a simple box shaped object with different color paints or each side tumbles in a LEO orbit. Depending on the time (and, hence, position) of the object in its orbit, a different color side is facing the ground based sensor tracking it from horizon to horizon. The new spectral data cube output is then processed with provided Python to produce a 'spectral waterfall' image by integrating the two spatial dimensions of the 3D spatial-spectral data cubes to produce a 1D spectra. These spectra are then appended to an image as rows to produce the spectral-temporal waterfall image shown below.

Figure 3: Spectral waterfall image produced by the Python include in the demo.

This year we started a long-term, on-going effort to audit and revitalize our existing scenes. This effort will phase out old input files in favor of updating to current standards and best practices. These scenes will be migrated to a public-facing version control system to ensure that everyone can easily obtain and update their local copies of the scenes.