Estimation of Beet Yield and Leaf Spot Disease Detection Using Unmanned Aerial Systems (UAS) Sensing

Principal Investigator(s)

Jan van Aardt

Sarah Pethybridge (Cornell U.)

Research Team Members

Mohammad Shahriar Saif

Rob Chancia

Timothy Bauch

Nina Raqueno

Imergen Rosario (Alumnus)

Pratibha Sharma (Cornell U.)

Sean Patrick Murphy (Cornell U.)

Project Description

The Rochester, NY region supports a growing economy of table beet producers, led by Love Beets USA, which operates local production facilities for organic beet products. In collaboration with the Rochester Institute of Technology’s Digital Imaging and Remote Sensing (DIRS) group and Cornell AgriTech, this project explores the use of unmanned aerial systems (UAS) remote sensing to improve beet crop management through yield estimation and plant population assessment. In parallel, we are investigating UAS-based tools for monitoring Cercospora Leaf Spot (CLS), a major foliar disease in table beets. Across the 2021–2023 growing seasons, CLS severity at multiple growth stages was visually assessed by Cornell pathologists, while end-of-season yield parameters—including root weight, root count, and foliage biomass—were collected for model development based on UAS-derived spectral and structural features. The overarching aim is to provide actionable insights for farmers, enabling more efficient and sustainable crop management decisions.

Building on earlier efforts, including Rob Chancia’s 2021 Remote Sensing publication on models developed from 2018–2019 imagery, the project expanded to include 13 UAS flights in 2021 and 2022. These missions captured hyperspectral (400–2500 nm), multispectral, and LiDAR data across a range of beet growth stages. Resulting models identified key wavelength indices linked to beet yield, with preliminary findings from 2021 published in the DIRS Annual Report (2023).

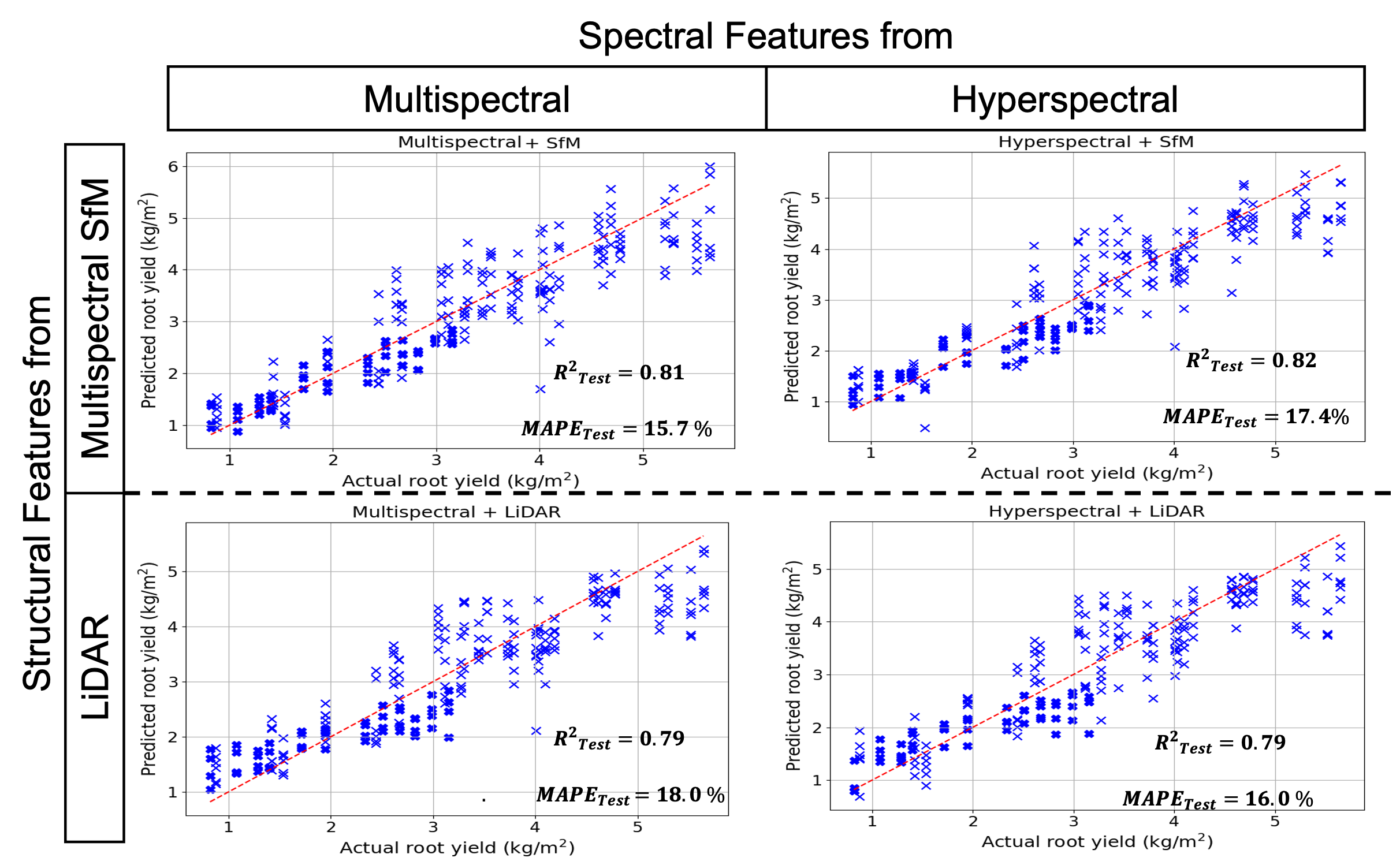

A key advancement has been the development of a multi-season and growth-stage-independent yield prediction model that integrates data from multispectral, hyperspectral, and LiDAR sensors. This framework, supported by a comparative analysis of sensor performance, is designed for real-world deployment and provides growers with flexibility across varying environmental conditions. These findings were presented at AGU 2024 and SPIE Defense + Commercial Sensing and are currently under peer review in the ISPRS Journal of Photogrammetry and Remote Sensing.

Figure 1: A DJI Matrice 600 (MX1) drone equipped with multimodal sensors lifting off from a designated launch pad adjacent to a table beet field. The flight was part of a data collection campaign aimed at monitoring crop health and estimating yield using multispectral, hyperspectral, and LiDAR imaging.

In parallel, we developed machine learning models to estimate CLS disease severity using both multispectral and hyperspectral imagery. The resulting spatial disease maps offer the potential for targeted fungicide application. This work was presented at STRATUS 2024, and a manuscript titled “Estimation of Cercospora Leaf Spot Disease Severity in Table Beets from UAS Multispectral Images” is under review in Computers and Electronics in Agriculture.

Ongoing work also includes estimating plant population density from UAS multispectral data. Early results are promising, and we aim to complete and publish this component by the end of 2025.

Figure 2: Comparison of predicted versus actual table beet root yield across four sensor configurations: (top left) Multispectral + Structure-from- Motion (SfM), (top right) Hyperspectral + SfM, (bottom left) Multispectral + LiDAR, and (bottom right) Hyperspectral + LiDAR. Each model was evaluated using the same machine learning framework. The red dashed line denotes the 1:1 reference line. Performance metrics include the coefficient of determination (R?) and mean absolute percentage error (MAPE), highlighting the predictive accuracy of each sensor combination.

References

[1] Chancia, R., van Aardt, J., Pethybridge, S., Cross, D., and Henderson, J. Predicting table beet root yield with multispectral uas imagery. Remote Sensing 13, 11 (2021), 2180.

[2] Saif, M., Chancia, R., Sharma, P., Murphy, S. P., Pethybridge, S., and van Aardt, J. Estimation of cercospora leaf spot disease severity in table beets from uas multispectral images.

[3] Saif, M., Chancia, R. O., Murphy, S. P., Pethybridge, S. J., and Aardt, J. v. Assessing multiseason table beet root yield from unmanned aerial systems. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. B23H–04.

[4] Saif, M. S., Chancia, R., Murphy, S. P., Pethybridge, S., and van Aardt, J. Exploring uas imaging modalities for precision agriculture: predicting table beet root yield and estimating disease severity using multispectral, hyperspectral, and lidar sensing. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXXI (2025), vol. 13455, SPIE, pp. 43–50.

[5] Saif, M. S., Chancia, R., Pethybridge, S., Murphy, S. P., Hassanzadeh, A., and van Aardt, J. Forecasting table beet root yield using spectral and textural features from hyperspectral uas imagery. Remote Sensing 15, 3 (2023), 794.