Feasibility of Using Synthetic Videos to Train a YOLOv8 Model for UAS Detection and Classification

Principal Investigator(s)

Research Team Members

Luke Spinosa (BS)

Jeffrey Dank

External Collaborators:

Matthew Kremens

Pano Spiliotis (Alumnus)

Nicholas Cox (Alumnus)

Dare Bodington

Jason Babcock (Alumnus)

Sponsor:

Eoptic

Project Description

This work received the "Best Paper Award" at the 2025 SPIE DCS Symposium's conference on Synthetic Data for Artificial Intelligence and Machine Learning: Tools, Techniques, and Applications III.

In fast-paced research and development environments, collecting and labeling real-world data for machine learning is often impractical and costly due to equipment expenses, limited availability, and environmental variability. With the rapid evolution of UAS technology, from commercial small unmanned aircraft systems (sUAS) to large military unmanned aircraft (UAS) and the dynamic nature and locations of modern warfare, adaptable detection systems are more critical than ever. Distinguishing UAS from natural flyers, such as birds, as well as clutter, such as trees, is essential to reduce false positives and improve the reliability of the detection system. Synthetic data offers a solution by accelerating development and enabling scalable counter-UAS detection models.

This research evaluates the effectiveness of synthetic video data in training the YOLOv8 architecture for counter-UAS applications, a growing focus in defense. The diversity in aircraft sizes, speeds, and materials, combined with the presence of natural flyers such as birds, complicates detection systems that rely solely on real-world data. Synthetic data enables the simulation of various types of UAS and natural flyers, improving the detection accuracy under various conditions.

Synthetic videos were generated using the DIRSIG environment, which accurately simulates real-world conditions. A specific location on the campus of the Rochester Institute of Technology (Rochester, New York, USA) was modeled, featuring an open field with a forested background, providing realistic scenarios for small unmanned aircraft and birds flying through the scene. This focus on video sequences replicates real-world conditions and offers potential for future AI models that incorporate flight paths.

Figure 1: Examples where the addition of synthetic data to both the training and validation sets negatively impacted the model’s performance. The red boxes represent the ground truth, while the blue boxes represent the model’s predictions. These instances highlight rare cases where the model trained on purely real data outperformed the model trained with a mix of synthetic and real data.

Although this study focuses on visible spectrum imagery due to the challenges of collecting real-world data for comparison, the current DIRSIG model is fully capable of simulating the capture of SWIR, MWIR, and LWIR image data. These additional bandpass regions are critical for detection in low-visibility and nighttime scenarios and are expected to be used in the near future. This research aims to develop a more robust system capable of adapting to the evolving sUAS landscape, enabling accurate detection of various makes and models of unmanned aircraft while distinguishing them from other natural flyers, such as birds.

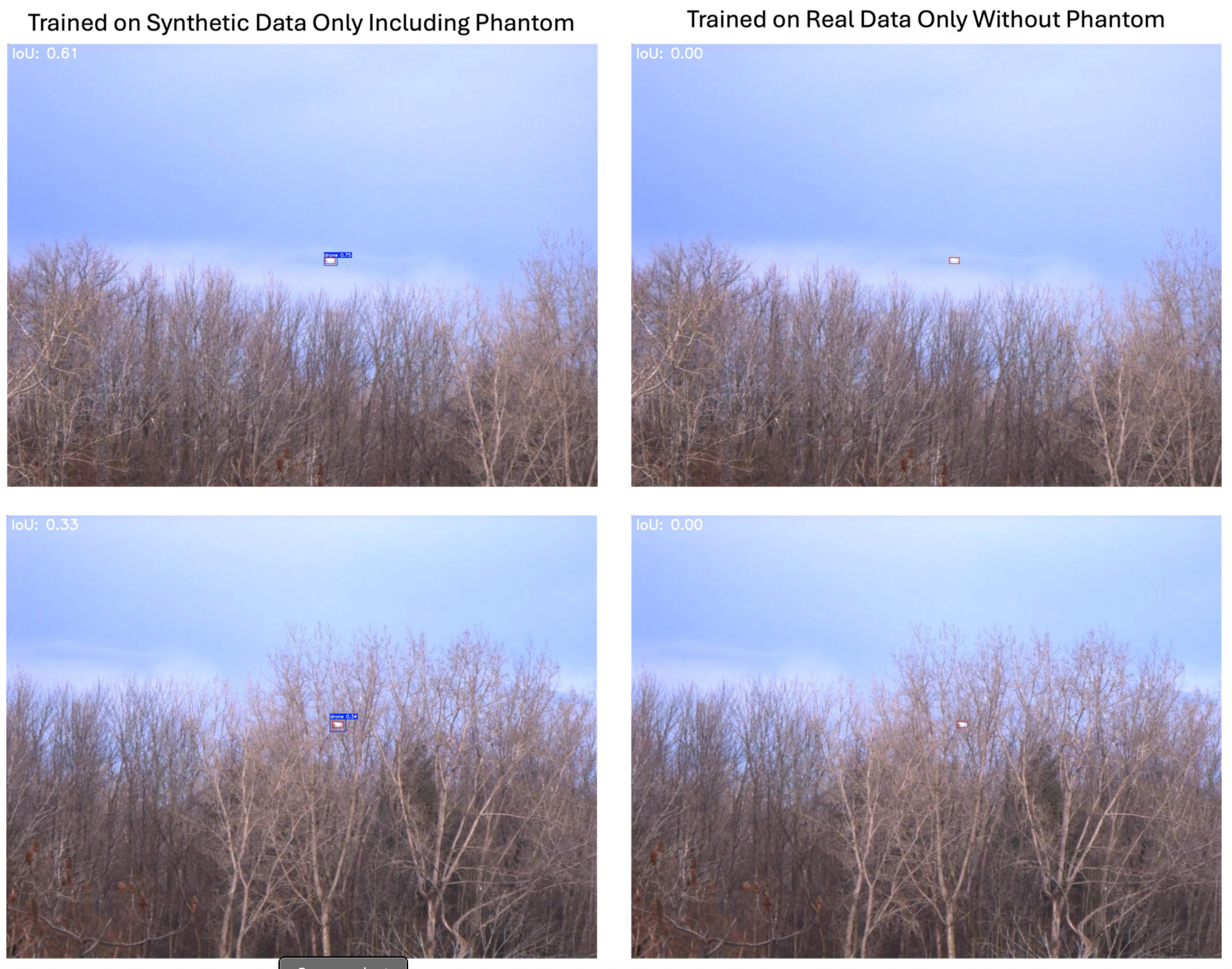

Figure 2: Performance snapshots illustrating superior synthetic data performance in cluttered scenarios. Each frame compares ground truth bounding boxes (red) against predicted bounding boxes (blue). Synthetic-trained models seemingly excel during periods of increased environmental clutter and occlusions.

References

[1] Spinosa, L. D., Dank, J. A., Kremens, M. V., Spiliotis, P. A., Cox, N. A., Bodington, D. E., Babcock, J. S., and Salvaggio, C. Feasibility of using synthetic videos to train a yolov8 model for uas detection and classification. In Synthetic Data for Artificial Intelligence and Machine Learning: Tools, Techniques, and Applications III (2025), vol. 13459, SPIE, pp. 365–383.