High-fidelity scene modeling and vehicle tracking using hyperspectral video

Principal Investigator(s)

Matthew Hoffman and Anthony Vodacek

Research Team Members

Zachary Mulhollan, Aneesh Rangnekar, Scott Brown

Project Description

Surveillance of vehicles from remote sensing platforms is a challenging problem when viewing conditions change and obscurations intervene. This project examines the use of multi-modal sensing and dynamic adjustment of tracking and background models to improve tracking performance. The primary mode of sensing for vehicle tracking methods is imaging spectrometry, however other modalities will be considered. Background modeling efforts are exploiting the capabilities of DIRSIG5 to dynamically update scene viewing angle and scene clutter.

Project Status:

The project started in February 2019. The project activities to date have concerned planning for the collection of tracking video using the gimbal mounted Headwall hyperspectral camera funded by the DURIP program. In addition, the students are developing skills and methods to dynamically interface DIRSIG and vehicle tracking. To date the students are focused on methods for applying deep learning in the tracking process by emphasizing spectral data in lieu of spatial data and dynamic modification and refinement of DIRSIG scenes.

Figures and Images

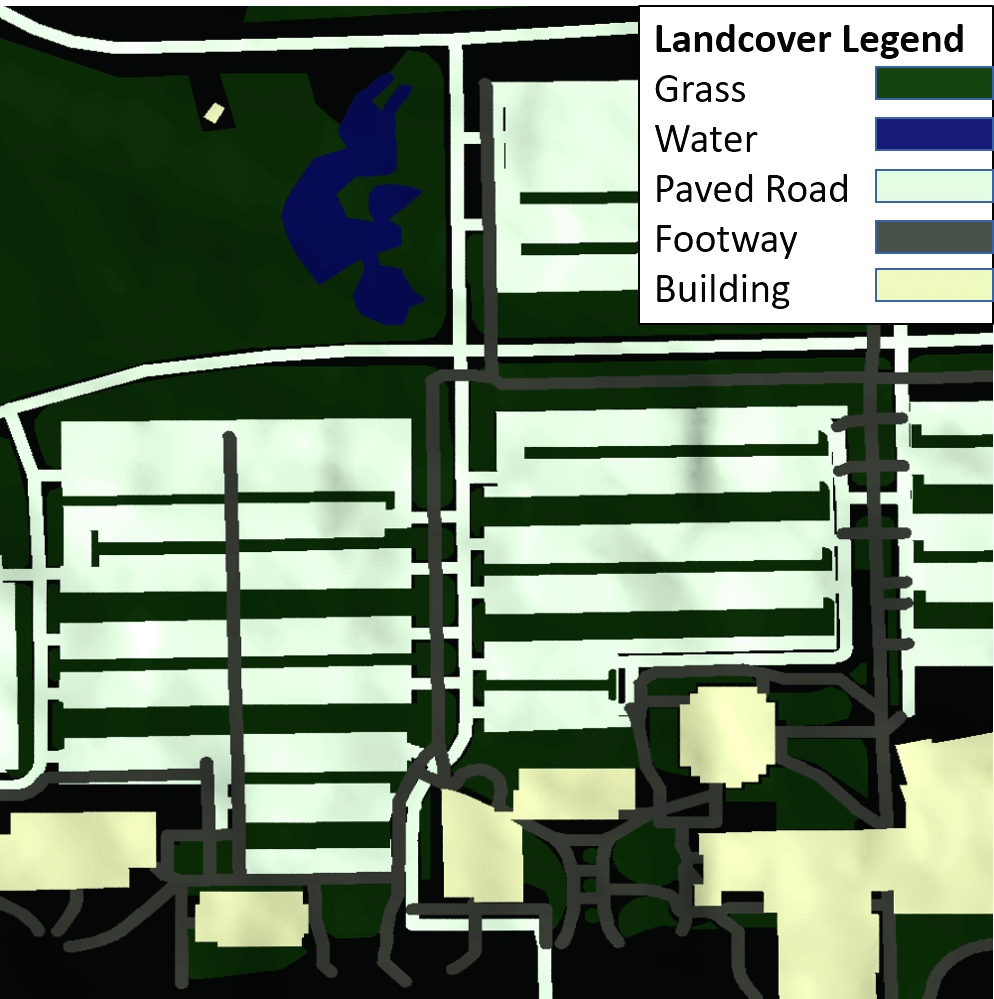

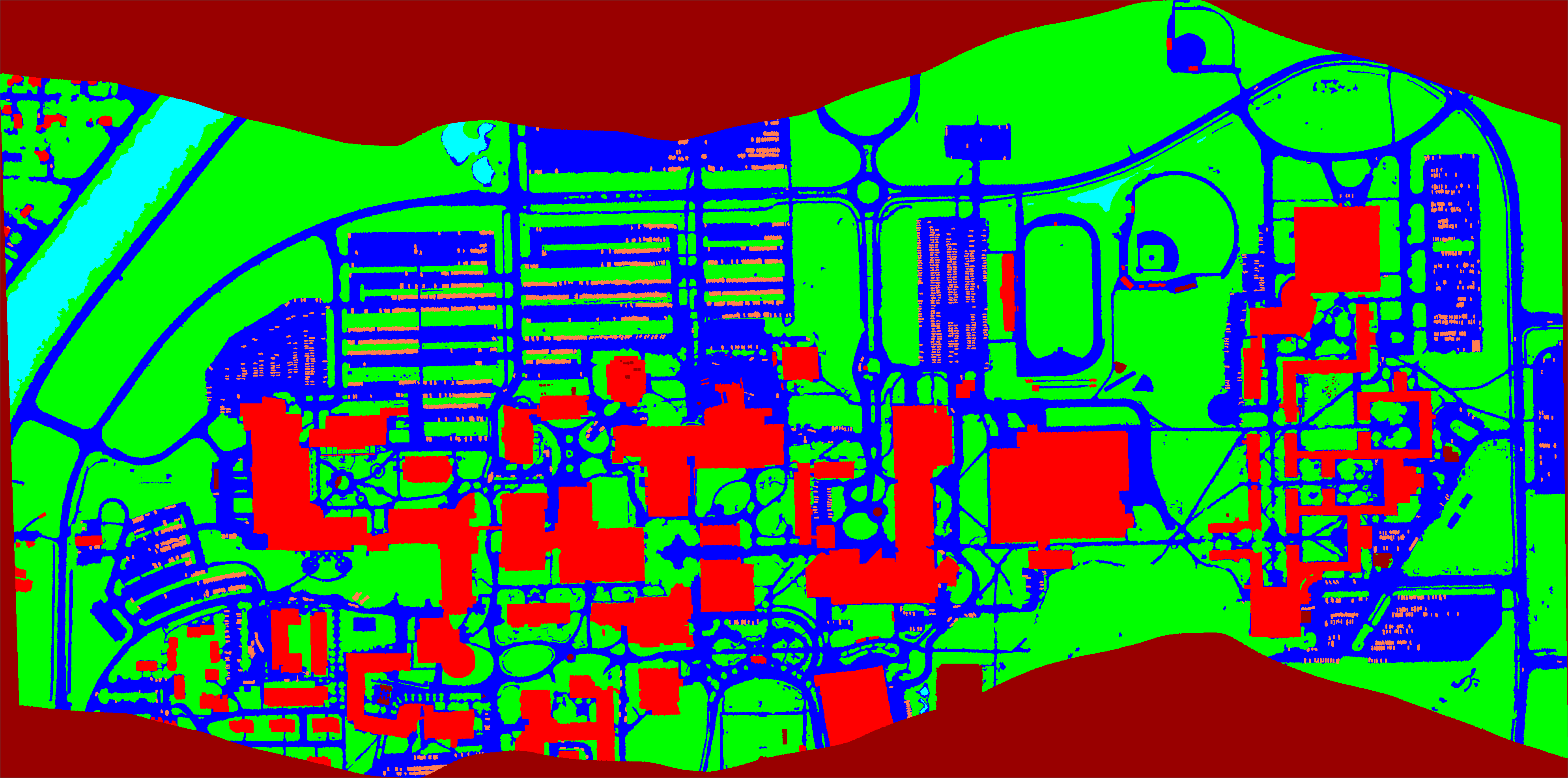

Labeled image useful for deep learning that was created from a hyperspectral scene of the RIT campus. Cars (pink) in the parking lots and roadways (blue) are individually labeled, with background vegetation (green) and buildings (red). This effort shows the ability to generate a large amount of labeled data for training a deep network.