High-fidelity scene modeling and vehicle tracking using hyperspectral video

Principal Investigator(s)

Matthew Hoffman

Research Team Members

Tony Vodacek, Chris Kanan, Tim Bauch, Don McKeown, Aneesh Rangnekar, Zachary Mulhollan

Project Description

The ability to persistently track vehicles and pedestrians in complex environments is of crucial importance to increasing the autonomy of aerial surveillance for the Air Force, Department of Defense, homeland security, and disaster relief coordination. Non-visual surveillance is particularly beneficial for when dealing with airborne collections, where the number of pixels on a target is relatively small and thus spatial features are not sufficient to identify a target. Spectral cameras have the ability to leverage additional phenomenological features to enhance robustness across varying environments.

This project involves 1) collecting and annotating novel hyperspectral datasets of vehicle and pedestrian movement that will be released to the community through the Dynamic Data-Driven Applications Systems (DDDAS) website, 2) developing a DDDAS framework for efficiently extracting and exploiting information from this large dataset to facilitate different applications, including real-time tracking and understanding actions at a meet up of different targets of interest and 3) developing a new capacity for DIRSIG to support dynamic, online construction of physics-based scene models.

Multiple data collections have taken place including hyperspectral video collected from the rooftop of the Carlson building. This is one of the first hyperspectral video scene for tracking vehicles. In addition drone collect of vehicle paint signatures was conducted on the RIT campus. This collect is being used to provide a larger database of paint signatures, including spectral variance information, to the DIRSIG platform. A larger vehicle signature database will increase the utility of DIRSIG for generating training data for deep learning applications. A mount has been constructed for the hyperspectral video camera to go on moving vehicles and we continue planning an airplane collection.

Figures and Images

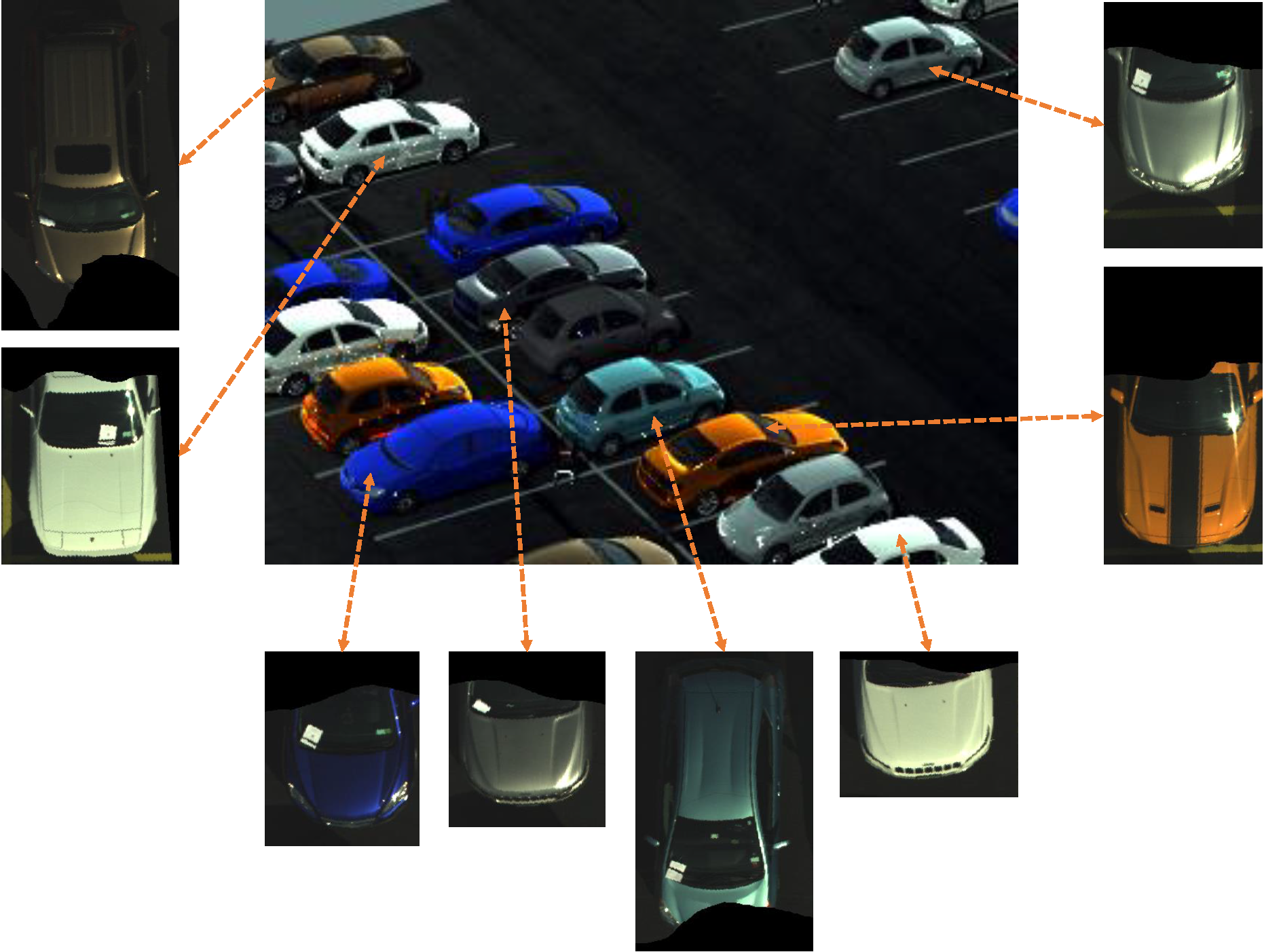

Simulated DIRSIG parking lot scene (center) using paint spectral signatures from a HIS drone collect of cars in the RIT parking lot (surrounding images). The collected paint signatures are being used to increase the DIRSIG vehicle paint library and allow improved generation of training data for target detection and tracking algorithms using HSI.

Output of vehicle detection results on frame of hyperspectral video taken from the roof of the Chester F. Carlson building at RIT. Detections are given by different convolutional neural network algorithms. The top two use only the traditional RGB information from the image, while the bottom two are showing the confidence in predictions using networks designed to incorporate hyperspectral data.