Radiometrically Accurate Spatial Resolution Enhancement of Spectral Imagery

Principal Investigator(s)

Research Team Members

Jose Macalintal (Ph.D.)

Amir Hassanzadeh

Sponsor:

National Geospatial-Intelligence Agency, NURI grant program

Project Description

This research project seeks to address the challenging problem of sharpening remotely sensed hyperspectral imagery with higher resolution multispectral or panchromatic imagery, with a focus on maintaining the radiometric accuracy of the resulting sharpened imagery. Traditional spectral sharpening methods focus on producing results with visually accurate color reproduction, without regard for overall radiometric accuracy. However, hyperspectral imagery (HSI) is processed through (semi-) automated workflows to produce various resulting products. Consequently, maintaining radiometric accuracy is a key goal of spatial resolution enhancement of hyperspectral imagery. Here, our focus will be in characterizing the issues related to accurate sharpening, and in particular understanding how the scene spatial - spectral content and complexity impact the ability to sharpen a particular image, or portion of an image.

To ensure high signal-to-noise ratios, hyperspectral sensors, because of their high spectral resolution, are typically designed to integrate in the spatial dimension. This ultimately means that they generally operate at significantly lower spatial resolutions than typical multispectral or panchromatic sensors. If we can develop methods that allow us to successfully sharpen the final imagery, then we may be able to use HSI systems for larger area coverage applications, while maintaining the ability to do targeted, quantitative, exploitation.

Current work uses a AI/ML approach with a U-Net architecture model for sharpening the Low Resolution SWIR data using the High Resolution VNIR imagery. The model is divided into the encoder, decoder, and output blocks. Each encoder block is composed of a 2D Convolutional layer with a 3 × 3 filter with a Parametric ReLu (PReLu) activation function and a dropout layer. Max Pooling was used to downsample subsequent encoders. The decoder block follows a similar structure to the encoder blocks, apart from the upsampling method used which was bilinear interpolation.

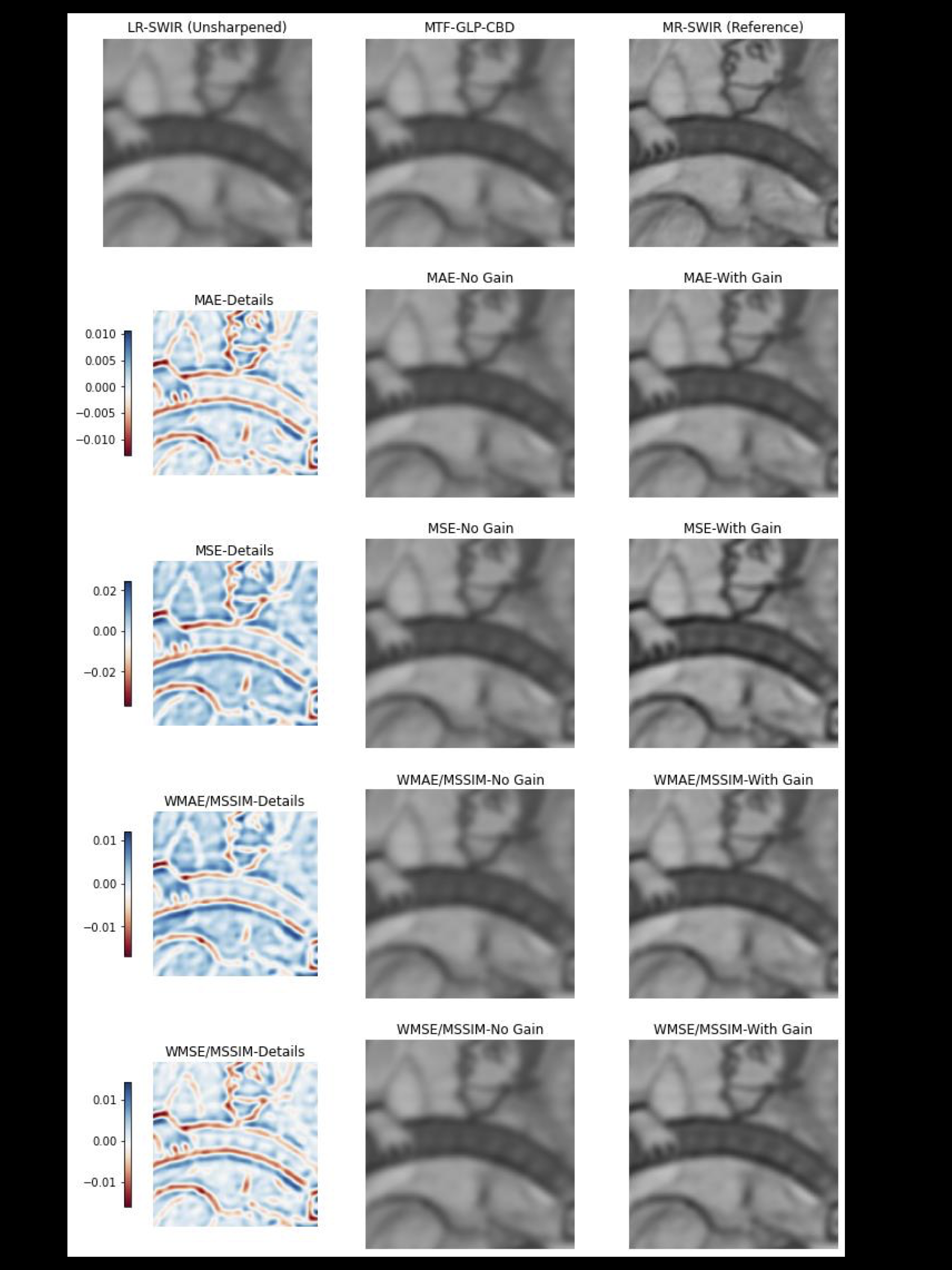

Four different loss functions are examined for the U-Net. Namely, the Mean-Squared Error (MSE), Mean- Absolute Error (MAE), Weighted Mean-Squared Error with Multiscale-SSIM(WMSE+MSSIM), and Weighted Mean-Absolute Error, and Multiscale-SSIM (WMAE+MSSIM).

The illumination of a 12th century manuscript is shown in Figure 1. A small 128 x 128 pixel patch is used for testing and Figure 2 shows the resulting error metrics for sharpening of this patch. The first row contains reference images of the LR-SWIR, MR-SWIR and the product of a non-neural network algorithm known as MTF-GLP-CBD. The remaining rows are the sharpened products of each loss function (with and without gain) as well as the details image added to LR-SWIR to sharpen it.

Figure 1

Figure 2

Across all loss functions, the model was able to identify the pixels that required sharpening, i.e. edge pixels or fine structures such as the face or the hand. However, the magnitude of the details-image without the gain may be too small to identify any significant sharpened improvements. However, with the gain, it is possible to see improvements in sharpening to get closer to the reference MR-SWIR image. Using the model trained under the MSE loss, with the addition of a gain, demonstrates this improvement by closely examining the improved facial features and thickening of the dark lines present in the image.

References

[1] Macalintal, J., and Messinger, D. Radiometric assessment of hyper sharpening algorithms using a detail-injection convolutional neural network on cultural heritage datasets. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXXI (2025), vol. 13455, SPIE.