Registration of full motion video

Principal Investigator(s)

Emmett Ientilucci

Research Team Members

Liam Smith, Nina Raqueno

Project Description

In many cases, it is important to have location information associated with imagery. When position and pointing telemetry is provided by the imaging platform, it is trivial to compute this information. However, when this information, which includes GPS, is deficient, the missing location information must be extrapolated. Thus there becomes a need to extract location information from motion imagery in such a scenario. Various techniques exist to compensate for lack of GPS data in an aerial image matching context, including using additional information from other sensors. Our work focuses on how various machine learning techniques can be implemented and leveraged to perform image matching, or alignment, in a real-time.

Our investigations of feed-forward convolutional neural networks (to perform image alignment) has resulted in three approaches, called HNET, VWNET and MOFLNET. HNET is based on literature, with a feed-forward convolutional neural network (CNN) that takes stacked grayscale images to produce a homography. VWNET is a modification of HNET that instead produces a coarse vector field describing the warping between two images. MOFLNET is another modification of HNET, essentially appending the CNN with a set of transposed convolutions that produce a stacked grayscale image pair representing the optical flow between the two input images, which has similarities to models in literature.

Figures and Images

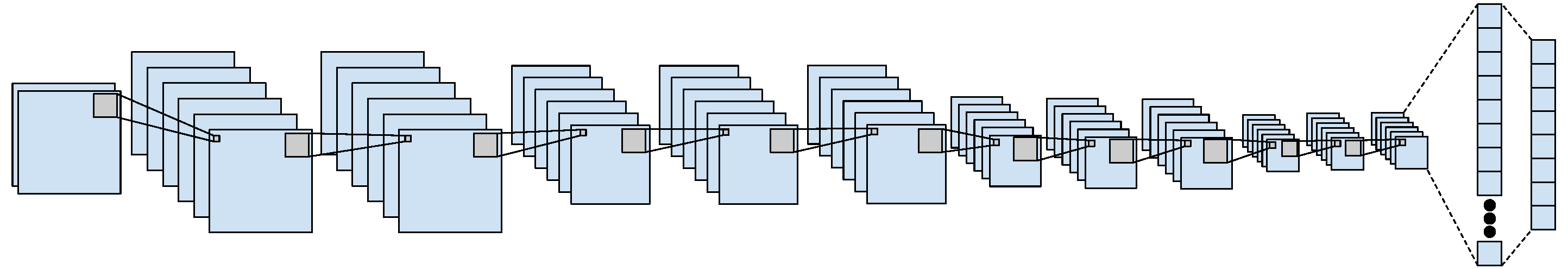

Visualization of the HNET architecture, which is based on the model presented in (D. DeTone, et. al., CoRR,2016).

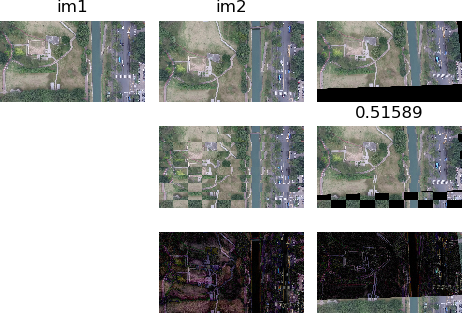

Example comparison image set to show that HNET performance is good on unseen and unrelated data. Img1 is the reference image while img2 is the warped image. The weighted average loss metric is shown below the result (upper left). Values between 0.5 and 0.59 are good while values below 0.49 are very good. We also visualize the alignment by taking the reference and warped images and overlaying them in a grid / checkerboard pattern so that alignment of features can be easily evaluated (second row). The absolute difference is shown (bottom row).