RIT becomes test site for Yamaha immersive audio technology

Sungyoung Kim’s research in virtual acoustic systems anchors collaboration with international company

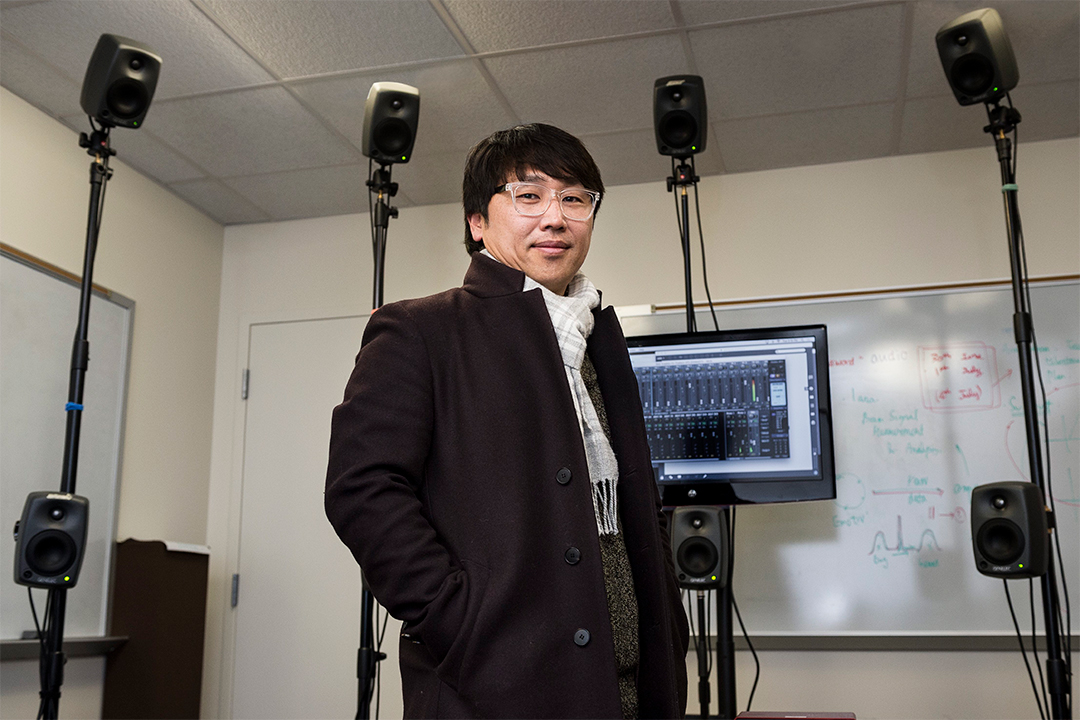

Elizabeth Lamark

An RIT audio engineering professor, Sungyoung Kim, worked with Yamaha engineers and a local composer to test Yamaha’s Active Field Control System.

Researchers at Rochester Institute of Technology are improving how people experience sound. Some of those improvements will add to the high-tech acoustics being developed by international entertainment company Yamaha Corp.

Sungyoung Kim’s Applied and Innovative Research for Immersive Sound Lab (AIRIS) at RIT became a test site to explore future capabilities of one of Yamaha’s newest technologies, the Active Field Control System (AFC). Kim and his students will help develop next-phase improvements to the high-tech audio system.

“I was approached by Yamaha to discuss the future of this technology. The first step was to adapt all the Yamaha technology into our lab. That was phase one,” said Kim, an associate professor of audio engineering technology in RIT’s College of Engineering Technology, who has a background in developing immersive audio systems that enhance sound in modern spaces.

AFC technology enhances an environment by controlling reverberations and sound/object positions, deepening acoustics in passive spaces, and creating various auditory ambient settings that augment the architecture of the site. Often referred to as 3D audio, virtual and immersive sound is an emerging research and production field where companies such as Yamaha continually seek to provide enriched quality sound over varied platforms, especially for classical music concerts in 3D.

“One of the existing issues in 3D music performances is how to synchronize computer-generated parts and acoustic atmospheres with human performances. And this is a hot research topic about how computers recognize music and follow a human’s performance,” said Kim. “Composers are using technology as a supporting one to accomplish their musical creativity. We just changed the concept.”

Part of the concept entailed understanding the integration of the music and listening environment. The system enables the re-creation of certain acoustical settings to enhance live performances. It’s more than improvements to speakers; the system adjusts acoustics of a modern space, for example, to give an audience the impression they are experiencing sound—from ancient cathedral to wind-blown caves—without having to be in the actual setting.

Photo provided by SungYoung Kim

From left to right, RIT’s SungYoung Kim, Shigeki Takasaka, stream editor/director, and Sihyun Uhm, composer, discuss the process for performing her new work with the Active Field Control System. The concert took place at Yamaha’s Ginza-Japan location.

As part of a first experiment, Kim worked with a composer from the Eastman School of Music, Sihyun Uhm, asking her to render a new piece of music through the system. Computers can have pre-recorded effects, but this system differs by putting an audience into the aural environment. The composer intended to have the audience sense or internally visualize two environments— a mountain and desert, and the composition moved back and forth between each one, Kim explained.

“Many people are trying this today. With orchestras, for example, there are always changes in orchestral movements, comparable from one scene to another in a play,” he said. “This new composition was only 10-minutes long. We changed the acoustic scenery, or the musical scenery, several times within one movement—which is unique and challenging. It is a different approach to music.”

Uhm’s composition, String Quartet No. 2, was performed at the Yamaha Ginza Studio in Tokyo, Japan last summer, and Kim was joined by Hideo Miyazaki, a Spatial Acoustic Design Engineer with Yamaha, local musicians, colleagues from the company as well as several alumni from RIT’s engineering technology program living in Japan.

Four RIT students participated as assistant engineers/operators for the concert, working with the AFC system as Kim prepared for the concert.

“While students can learn many aspects of acoustics from books, the system provided a unique learning opportunity in how to virtually manipulate acoustics in real-time,” said Kim, whose work is funded through two corporate grants from Yamaha. The first, “Toward individualized presentation of immersive experience,” is a three-year grant to assess the auditory selective attention process, a cognitive process humans undertake to distinguish sounds and environments. Another, “Investigating perceptual cues required for remote tuning of the Yamaha AFC System,” is about the process of virtual connections and how engineers virtually tune systems without going in to the actual space.

“It is more technical in how to remotely tune a system, and it involves the concept of working in the metaverse. With people doing more remote, virtual work, there is a need to have audio systems compatible and with audio quality as high as possible,” Kim said.

This audio research for Yamaha can also impact RIT with its multiple conference and auditorium spaces across campus. RIT can be an experiment space where physically separated spaces can be virtually synchronized.

“We can have one musician in one building, another musician in another building, and I want to see if they can play together,” he said. “It is the future of music in the metaverse. You can have an artist performing in Korea, while another plays in the U.S.—even here at RIT. In the metaverse there shouldn’t be any walls or barriers between those musicians. I think acoustic scenery is the thing to make them feel that they are in the same space.”