Home Page

Meet the Dean

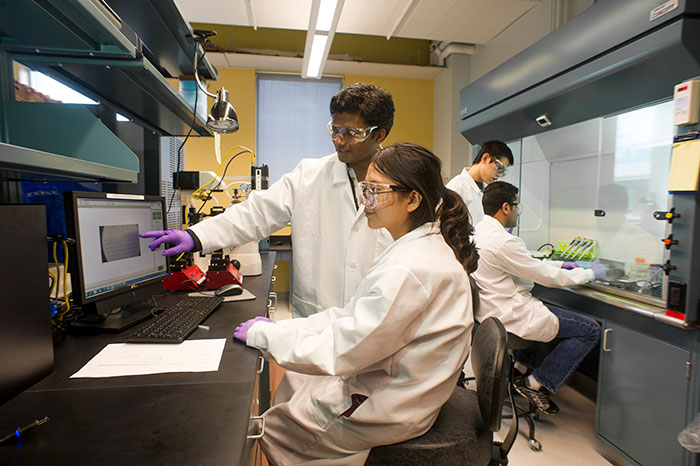

Engineers are creative problem solvers who can have a profound impact on the world. In addition to providing an outstanding technical foundation, the Kate Gleason College of Engineering encourages students to understand the many ways in which their work affects society. Because we believe that students learn by doing, we provide them an abundance of opportunities to design, build, and innovate both inside and outside the classroom.

Doreen Edwards