New study investigates illegal child sexual abuse material and anonymity on Tor network

RIT cybersecurity professor publishes research in ‘Nature’s Scientific Reports’

Provided

An RIT cybersecurity professor is part of a global research group addressing illegal and harmful behavior on the anonymous Tor network. The team analyzed search sessions for child sexual abuse material (CSAM) on the popular Ahmia Tor search engine.

Rochester Institute of Technology cybersecurity professor Billy Brumley is helping shed light on dark corners of the internet.

Brumley and a multidisciplinary group of researchers from around the globe are investigating the availability of child sexual abuse material (CSAM) online, in order to better understand and combat its widespread dissemination. Their findings provide crucial insights into harmful behavior on anonymous services and highlight potential strategies for public health intervention.

Provided

Billy Brumley, the Kevin O’Sullivan Endowed Professor in Cybersecurity at RIT.

The study, published in Nature’s Scientific Reports, offers a comprehensive analysis of the availability, search behavior, and user demographics related to CSAM on the Tor network. The team also intercepted CSAM users directly with a survey, discovering new ways to interfere with users’ activities.

Every day, millions of people use Tor—often known as the dark web—to encrypt communications and make internet browsing untraceable. There are both legal and illegal uses for anonymity. Via the Tor network, a vast amount of illegal CSAM is accessible.

“The cybersecurity research field largely ignores the CSAM epidemic. It is categorically the head-in-the-sand ostrich syndrome,” said Brumley, the Kevin O’Sullivan Endowed Professor in Cybersecurity at RIT. “Security and privacy scholars tend to focus on purely technical aspects of anonymity and anti-censorship. Whenever anyone proposes technologies that might threaten anonymity or provide content filtering, it’s often met with ignorant knee-jerk reactions.”

The study notes a 2022 U.S. Congress report that despite evidence of the growing prevalence and severe consequences of CSAM accessible through the Tor network, computer science research on CSAM remains limited and anonymous services have not taken action.

“We have a duty to the public,” continued Brumley. “Our technologies are not encased in a vacuum. Yes, it’s sometimes easier to produce research results by ignoring the social consequences. But, that doesn’t make it ethical.”

The study looked at more than 176,000 domains operating through the Tor network between 2018 and 2023. Researchers found that in 2023 alone, one in five of these websites shared CSAM. The material was also easily available on 21 of Tor’s 26 most popular search engines, four of which even advertised CSAM on their landing page.

Juha Nurmi

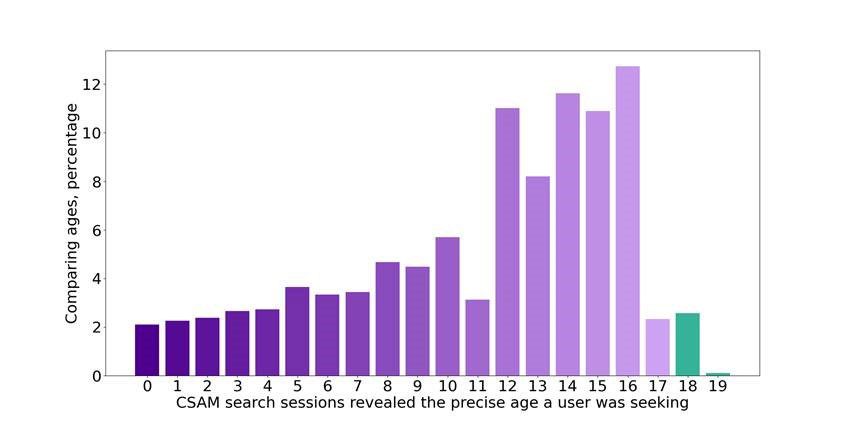

Search sessions for child sexual abuse material (CSAM) on the Tor network reveal the age that users are seeking. Fifty-four percent of searches targeted children between the ages of 12 and 16.

Several Tor search engines do seek to filter illegal activities, including Ahmia. Juha Nurmi, lead author of the paper and a researcher at Tampere University’s (Finland) Network and Information Security Group, created Ahmia in 2014.

“I programmed the search engine to automatically filter the search results for child sexual abuse material and redirect users seeking such content to self-help websites,” said Nurmi. “Despite these measures, a significant percentage of users are still trying to find this illegal content through my search engine.”

The researchers analyzed more than 110 million search sessions on the Ahmia search engine and found that 11 percent clearly sought CSAM. As part of the study, the search engine identified searches for CSAM and directed users to answer a 15-20 minute survey. Over the course of a year, the “Help us to help you” survey got 11,470 responses.

“There is irony in the research methods,” said Brumley. “The technology that enables CSAM content distribution is the same technology that allows CSAM addicts to respond to this survey designed by trained professionals without threat of persecution. Anonymity is truly a double-edged sword.”

The survey findings reveal that 65 percent of the respondents first saw CSAM when they were children themselves. Half of the respondents first saw it accidentally, indicating that the material is easily available online. Forty-eight percent of users said they want to stop viewing CSAM, with some seeking help through the Tor network and self-care websites.

“Our research finds links between viewing violent material and addiction,” said Nurmi. “Seeking help correlates with the duration and frequency of viewing, as well as an increase in depression, anxiety, thoughts of self-harm, guilt, and shame. Some have sought help, but 74 percent of them have not received it.”

The full study available on Nature’s Scientific Reports is titled “Investigating child sexual abuse material availability, searches, and users on the anonymous Tor network for a public health intervention strategy.” The research team and authors include Brumley, Nurmi, and other researchers from Tampere University, the Protect Children organization, University of Eastern Finland, and the Spanish National Research Council.

The study combined themes of information security, computer science, criminology, psychology, and society. Peer reviewers particularly noted how well the paper filled in research gaps, improved methods, and combined the data into a useful whole.

“I hope the research can evolve and progress to the point that we can actually reach CSAM addicts to connect them—in a personal and tailored way—with trained professionals to get the help that they need and deserve,” said Brumley. “As a society, it’s our obligation to provide that, ultimately to our own benefit in terms of social health and safety. Perhaps privacy-enhancing technologies provide an avenue for that—a safe space for discussion, understanding, and healing.”