Engineering faculty member wins Air Force Research grant for work in improvements to neuromorphic computing systems

Work could advance security of both hardware and software in computing systems through collaborations with engineers and social scientists

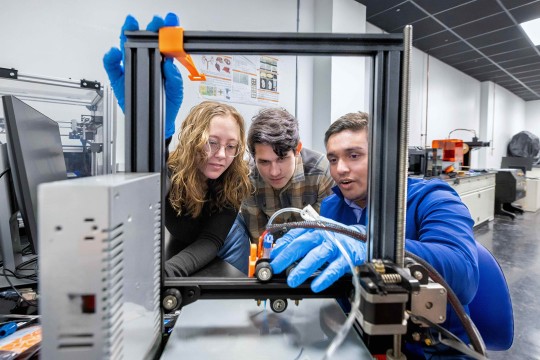

Researchers at Rochester Institute of Technology are discovering ways to improve artificial intelligence (AI) by reducing security vulnerabilities within the hardware and software being used in this growing technical environment.

Faculty-researcher Cory Merkel recently received a grant from the Air Force Research Lab for developing more secure AI functionality including how it defends against system attacks, and, through training the system, how it could learn to anticipate triggers for possible system attacks.

Artificial intelligence is being incorporated into applications such as wearables, mobile phones, robots, unmanned aerial vehicles and satellites. Some of the companies producing these devices are developing custom AI hardware (neuromorphic computing systems) to enable the devices. Questions related to these systems’ security and safety vulnerabilities still need to be addressed. A number of defense strategies are being created to improve AI algorithm robustness, but there has been very little work related to the impact of hardware-specific characteristics on the adversarial robustness of neuromorphic computing platforms, said Merkel.

“There are all these complicated characteristics of hardware that become part of an AI algorithm as soon as it runs on a physical device like a computer chip. What we want to do is understand how those hardware characteristics are going to affect the AI’s safety, security and vulnerability to adversarial attacks,” said Merkel, an assistant professor of computer engineering in RIT’s Kate Gleason College of Engineering.

Merkel and the project team will leverage experts in mathematics and in machine learning modeling to develop the algorithms, and will work with faculty in RIT’s College of Liberal Arts to understand how humans perceive visual stimuli and make sense of this information. The three-year, $400,000 project, “Towards adversarially robust neuromorphic computing,” which began in October, will entail building mathematical models of how the neuromorphic hardware characteristics affect the robustness—the ability to adapt and anticipate changes—of artificial intelligence functions running on that hardware.

“We are going to see an increase in the prevalence of AI in different areas of our lives, especially in safety or mission critical areas like transportation, health care and national security—basically places where we don’t want AI to fail or to be vulnerable to certain types of attacks,” said Merkel whose research focuses on the development of neuromorphic technologies.

Neuromorphic computing is an interdisciplinary approach to developing computing infrastructure based on how the human brain performs its complex functions. It combines elements of neuroscience, nanotechnology and machine learning with a broad goal to re-design today’s computing systems by emulating the brain’s biological processing capabilities to be able to assess and integrate ever-larger quantities of data.

This type of processing could improve applications such as real-time environmental sensing, load forecasting for smart grids, speech recognition, anomaly detection and object classification. Humans can understand and interpret different road signs, for example, from stop signs to traffic signal colors. Computers must be trained to distinguish the differences, and the underlying systems need to be secured from external attacks that could impact how autonomous vehicles, for example, act on the signals.

Merkel sees the differences between human perception and AI, but also understands that the human process can influence the computing processes: “If we can understand how biological intelligence is so robust and how it can easily generalize from just a few different examples, then we can make orders of magnitude improvements by basically copying some of those principles that are in biological systems into AI systems, which would have a huge impact on society, human life. To me that’s a really cool prospect.”

Although these capabilities continue to improve, the need to ensure system safeguards remains a challenge.

“We really don’t know much about the safety or the security of running AI on neuromorphic custom hardware. Until we do, understand that, there's one of two things that are going to happen,” Merkel said. “Either we’ll be cautious and only adopt neuromorphic hardware after careful consideration of all of these issues—which could impede the progress of AI—or we may adopt these systems prematurely, and we’ll be left with significant vulnerabilities which could affect national security, privacy, and even human life. That’s why I think this is a critical research area.”