Listen with Caution: Spotting audio deep fakes

Exhibitor Background: I am an undergraduate researcher at the University of Maryland, Baltimore County, working with a multidisciplinary team consisting of a team of linguistic experts and computer scientists. We present an innovative approach to auto-annotate Expert Defined Linguistic Features (EDLFs) as subsequences in audio time series to improve audio deepfake discernment. In our prior work, these linguistic features- namely pitch, pause, breath, consonant release bursts, and overall audio quality, labeled by experts on the entire audio signal - have been shown to improve the detection of audio deepfakes through AI algorithms. We now expand our approach to pilot a way to auto-annotate subsequences in the time series that correspond to each EDLF. Visitor Experience: Have you heard of a deepfake? Maybe you aren't familiar with the term, but you probably are aware of AI-generated photos, videos, and even audio. With AI tools, human voices can be faked in seconds, instantly creating opportunities for deception, fraud, and misinformation. Scientists typically try to detect fake audio by refining AI algorithms to catch it, but adversaries can then use that technology to generate better deep fakes. Our novel approach incorporates insights from sociolinguistics - the study of human language in society - to train listeners to better discern fake audio and to improve the science of deepfake detection. This experience will begin with visitors given the option to listen to audio and point out what stands out to them without necessarily guessing the authenticity of the audio. After which, they will be shown in real-time, a demonstration of my matrix profiling algorithm which detects linguistic features that help in deciphering audio deep fakes. Simultaneously, visitors will be educated briefly on several Expert Defined Linguistic Features (EDLFs) that can be useful cues to watch out for when in doubt as to the authenticity of the audio. Attendees will then be offered printed material that dives deeper into identifying audio deep fakes using EDLFs, the appearance of these EDLFs on waveforms as well as a deeper dive into my work on auto annotation of audio clips. To cater to our deaf and hard of hearing population here at RIT, there would be an accessible option where visitors will be given waveforms of audio clips and asked to mark any visual regularities or irregularities in a waveform, after which they will be shown where these irregularities are indicative of real versus fake speech, as in the demonstration described before as well as a printed infographic.

Christine Mallison(Co-PI) and Lavon Davis(graduate student) at "Can you Catch a Deepfake" exhibit, UMBC

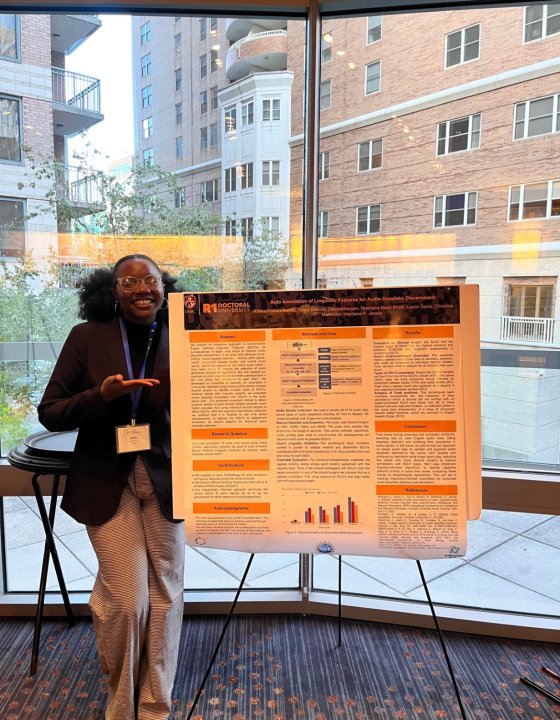

Kiffy Nwosu(CS'27) presenting related research at AAAI Symposium, Arlington Virginia

Topics

Exhibitor

kifekachukwu Nwosu

Chimamanda Eze

Organization

I was on co-op at UMBC supported by the National Science Foundation Award #2210011 with REU supplement, Dr. Vandana Janeja (PI) and Dr. Christine Mallinson (Co-PI), University of Maryland, Baltimore County

Thank you to all of our sponsors!