Designing Assignments with AI in Mind

- RIT/

- Center for Teaching and Learning/

- Teaching/

- Generative AI in Teaching/

- Student Activities Using Generative AI

Overview

Generative AI is reshaping how students read, write, problem-solve, and generate ideas across disciplines. Some faculty try to “AI-proof” assignments, while other faculty design with AI in mind: clarifying expectations, guiding responsible use, and highlighting the higher-order thinking skills that matter most in their fields. Transparent, well-scaffolded assignments reduce confusion, foster academic integrity, and help students develop durable strategies for learning in a rapidly changing environment.

The sections below offer a concise, practical framework for redesigning or creating assignments that are authentic, inclusive, and aligned with your course goals.

Clarifying Expectations with AI-Use Scales and Syllabus Statements

Students frequently report that inconsistent AI expectations across courses are confusing. Starting with clear guidelines, reinforced by a shared scale and transparent syllabus language, creates stability and increases students’ confidence in how to approach their work.

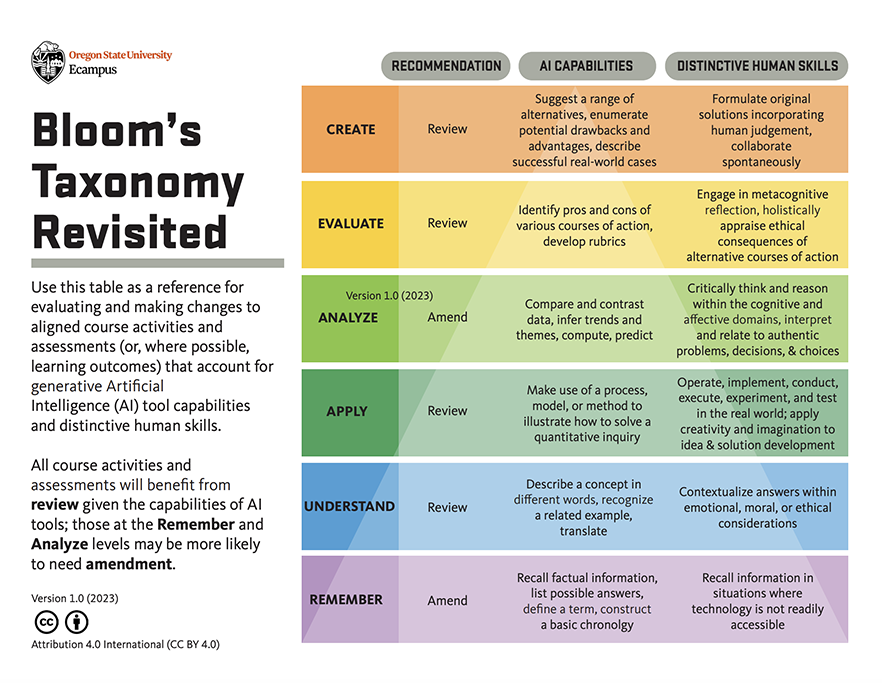

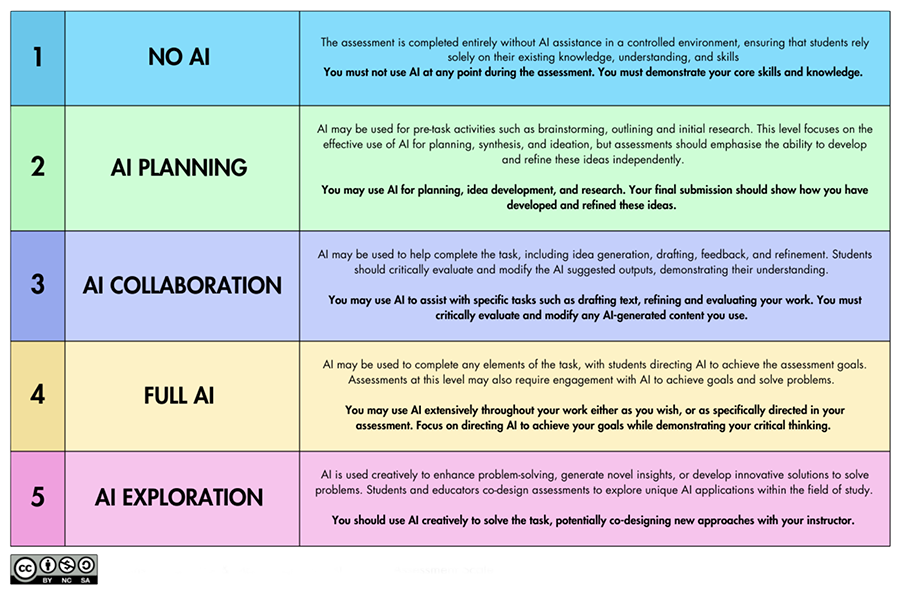

A simple scale–like the following AI Assessment Scale–communicates what kinds of AI use are permitted at both the course and assignment levels. Using the same scale across all levels helps students see patterns and understand when and why expectations shift.

A general AI syllabus statement–modeled upon an AI use scale–explains both the expectations and the reasoning behind them. Consider using–or just thinking through–the Worksheet for Creating Your Generative AI Syllabus Statement. Use the following bulleted items in the Worksheet as “checkboxes” to select the sample language you want to use or create your own language (with or without AI, or using examples from RIT or elsewhere):

- Students are allowed to use AI tools freely as they choose

- Students are only allowed to use AI tools in limited ways

- Students are not allowed to use AI tools, except when certain conditions are met

- Students are never allowed to use AI tools

- Other

Equally important, consider discussing your AI syllabus statement with students early in the course and, when appropriate, inviting them to help shape it. Doing so can surface mutual assumptions and clarify the learning goals behind AI expectations. Even a brief conversation can help frame the statement as a collaborative learning agreement rather than a fixed set of rules.

Is Using AI Cheating or Plagiarism?

Generative AI complicates traditional notions of cheating and plagiarism. Many students do not see unapproved AI use as “plagiarism” because they are not copying a human author’s work.

Students who grew up with search engines, remix culture, and abundant digital text often do not map AI output onto traditional plagiarism categories. The result is a disconnect between faculty intent and student interpretation.

Additionally, AI does not fit neatly into existing misconduct frameworks. When faculty rely on old categories without rethinking the underlying learning goals, students often respond by using AI covertly rather than transparently.

Academic-integrity scholars Tricia Bertram Gallant, David Rettinger, and Clay Shirky suggest a simpler and more durable definition: cheating is deceiving an instructor into believing one’s mastery is greater than it is. This definition applies regardless of whether the tool involved is a human ghostwriter, a calculator on a no-calculator test, or ChatGPT.

This framing allows faculty to ask:

- Does this assignment clearly communicate the boundaries of AI use?

- Would a student be deceiving me if they used AI in a way I didn’t intend?

- Have I redesigned the task so that the learning cannot be outsourced?

The goal is not to outlaw tools but to avoid situations where students feel compelled to hide their process.

While many educators long for the AI equivalent of Turnitin, there is no reliable AI detection tool. Research continues to highlight the unreliability of AI detectors and the student perception that enforcement is inconsistent or unfair. As noted in our companion webpage Detecting AI Use:

- AI detection tools are unreliable, prone to false positives, and lack transparency

- Students can easily modify AI-generated text to evade detection

- A focus on catching AI use is less effective than redesigning assessments and teaching responsible engagement

Redesigning assignments and setting clear expectations are more effective and equitable approaches.

Applying TILT for Transparent Assignment Design

The Transparency in Learning and Teaching (TILT) framework—Purpose, Tasks, and Criteria—is especially effective when integrating AI. It reduces cognitive load, clarifies expectations, and supports responsible decision-making.

Explain what knowledge or skill the assignment develops and how AI does or does not fit that purpose. Students work more honestly when they understand why boundaries exist.

Describe where AI is permitted, optional, or prohibited. Examples:

- “You may use AI to generate topic ideas, but you must refine and justify your selection.”

- “Do not use AI to summarize the research; this course emphasizes developing disciplinary reading skill.”

Highlight human skills that AI cannot perform well: reasoning, evidence evaluation, disciplinary insight, originality, or reflection. Clear criteria redirect students toward deeper thinking and away from AI shortcuts.

Assignments that Limit or Are More "Immune" to AI Use

Some assignments are intentionally designed so that GenAI tools cannot easily produce complete or accurate responses. These examples emphasize students’ authentic thinking, personal insight, real-world context, or in-class interaction, making it difficult for AI alone to satisfy the task.

- In-class presentations with live Q&A: Students explain their work and respond to questions that require reasoning grounded in discussion or reading

- Oral exams or debates: Students articulate their understanding in real time, reducing the value of off-task AI output

- Process documentation: Have students submit work with version history or annotated drafts showing how their ideas developed over time, thus capturing thinking that AI cannot reproduce

- Personal reflection on learning: Ask students to connect course concepts to their own experiences or challenges, which AI can’t authentically replicate

- Assignments with real-world or local context: Tasks that require recent or place-specific knowledge (e.g., analyzing a recent local policy change) limit an AI’s ability to generate accurate responses

- Multi-step investigations: Break projects into phases (brainstorming, mapping, interviewing, synthesis) so students’ decisions at each stage shape later work

- Discussion-linked writing: Require students to integrate points from class discussions or collaborative work into a written product

- Group problem solving: Design activities where students must co-construct solutions during live interaction

Assignments that Work with AI

Rather than avoiding AI, some assignments make generative tools part of the learning process, thus compelling students to use AI thoughtfully and then reflect on or refine its output. These approaches foster critical evaluation, ethical use, and human judgment.

- AI-generated drafts for critique: Students ask an AI to produce an initial draft (essay, code, explanation) and then evaluate its strengths and weaknesses in light of course concepts

- Prompt refinement exercises: Have students iteratively improve AI prompts to generate better evidence of understanding, documenting how changes affect the output

- AI feedback and revision cycles: Students use AI to get feedback on their drafts, then reflect on that feedback and revise their work accordingly

- Comparative analysis of multiple AI responses: Provide different AI-generated solutions and ask students to compare, defend, or improve upon them

- AI-enhanced creative tasks: Students might use generated images, outlines, or outlines as starting points and then add human context, evaluation, or extension

- Role-playing AI interactions: Assignments where students debate with or against an AI model on a concept, defend choices, or explain why one interpretation is stronger

Fostering Higher-Order Thinking and Critical Engagement

Prompts that require comparison, judgment, or explanation help students focus on thinking.

Sentence frames and analytic prompts support deeper reasoning and inclusive learning.

Ask students to produce models, workflows, recommendations, or other deliverables requiring synthesis and originality. AI can support ideation, but students must supply disciplinary judgment.

References and Resources

Barnard College, Center for Engaged Pedagogy. (n.d.). Integrating generative AI into teaching and learning. https://cep.barnard.edu/integrating-generative-ai-teaching-and-learning

Dawson, D. (2023, November 14). Free OER book: ChatGPT assignments to use in your classroom today. Digital Education Strategies Community. https://desc.opened.ca/2023/11/14/free-oer-book-chatgpt-assignments-to-use-in-your-classroom-today/

Furze, L. (2024, August 28). Updating the AI assessment scale. https://leonfurze.com/2024/08/28/updating-the-ai-assessment-scale/

Gallant, T. B., & Rettinger, D. A. (2025). The opposite of cheating: Teaching for integrity in the age of AI.

metalab (at) Harvard. (n.d.). AI Pedagogy Project: Assignments. https://aipedagogy.org/assignments/

North Carolina State University, DELTA Teaching Resources. (n.d.). Designing assignments with AI in mind. https://teaching-resources.delta.ncsu.edu/designing-assignments-with-ai-in-mind/

Shirky, C. (2025, November 3). The post-plagiarism university. Chronicle of Higher Education. https://www.chronicle.com/article/the-post-plagiarism-university

Teaching & Learning Resource Center, Ohio State University. (n.d.). AI teaching strategies: Transparent assignment design. https://teaching.resources.osu.edu/teaching-topics/ai-teaching-strategies-transparent

Transparency in Learning and Teaching Project. (n.d.). Example assignment prompts. https://www.tilthighered.com/resources