Open your eyes and draw at Imagine RIT

Eye-tracking and facial-expression systems allow for hands-free computer use

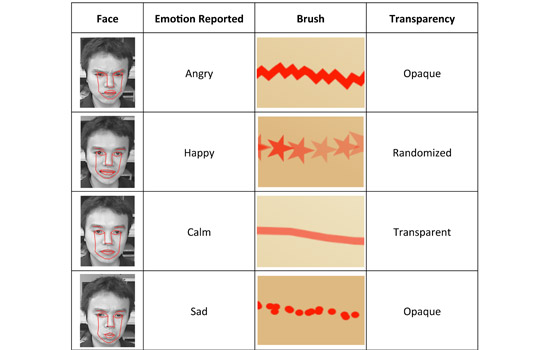

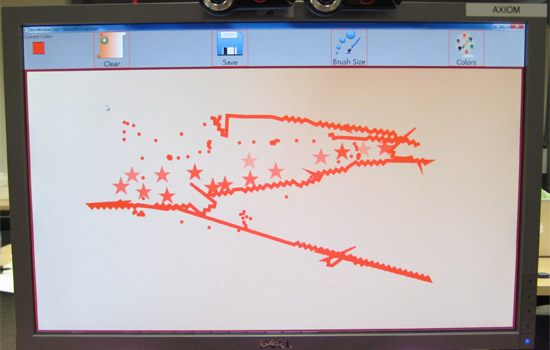

Students and faculty from the Department of Computer Science use eye-tracking and facial-expression systems to call up preselected brush strokes. The facial-expression system detects four different expressions: happy, sad, angry and calm. Expressions are represented by specific brush strokes that allow a user to create their own artwork.

Keep your eyes peeled!

At Imagine RIT, instead of using a mouse to move a computer cursor, you can use your eyes and face.

Students and faculty from the Department of Computer Science have created systems that capture and use facial expressions and eye gaze to create emotionally expressive artwork.

The exhibit, on the first floor of the Gordon Field House and Activities Center, gives a firsthand look at how eye-tracking and facial-expression analysis works.

“Eye tracking uses infrared illumination and infrared cameras to capture video of your pupil and corneal reflection,” says Reynold Bailey, assistant professor of computer science. “The video is analyzed in real time to allow you to interact with the screen.”

Sitting down at the computer system also allows the facial-expression server to recognize your lips, eyebrows and other facial features. A user can then make a specific face that corresponds with a preselected brush stroke.

“For example, an angry face will create jagged lines and a happy face will create stars,” says Cyprian Tayrien, a computer science graduate student. “Then, using your eyes, you can move across the artwork like a normal cursor.”

Artists looking to keep evidence of their hands-free drawing can simply hit the save button with their eyes and have the drawing sent to their e-mail address.

Next door to the drawing station, the computer science group will also showcase a gaze-based image retrieval system. Similar to the drawings, users can search through a database of images simply by using their eyes. The user can also select different objects or regions in the images by fixating on them.

“Looking at a car in one image will cause other images containing cars to be loaded,” says Srinivas Sridharan, a computing and information sciences Ph.D. student. “This process can continue until the user is satisfied with their image search.”

“Ultimately, systems like this can be used to enable people with disabilities to easily interact with computers using their eyes and facial gestures,” Bailey says.

Exhibitors include professors Reynold Bailey, Joe Geigel and Manjeet Rege. Student exhibitors include Srinivas Sridharan, James Coddington, Junxia Xu, Yuqiong Wang, Bharath Rangamannar, Cyprian Tayrien and Stephen Ranger.

Students and faculty from the Department of Computer Science have created systems that capture and use facial expressions and eye gaze to create emotionally expressive artwork.

Students and faculty from the Department of Computer Science have created systems that capture and use facial expressions and eye gaze to create emotionally expressive artwork.