What the Doctor Prescribes: Customized Medical-image Databases

RIT professor designs database with physician input and novel eye-tracking techniques

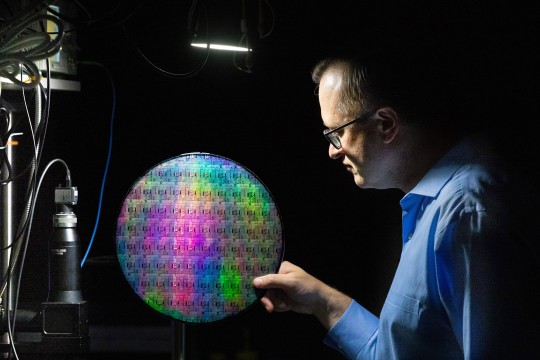

A. Sue Weisler

Anne Haake

Digital archives of biomedical images could someday put critical information at doctors’ fingertips within seconds, illustrating how computers can improve the way medicine is practiced. The current reality, however, isn’t quite up to speed, with databases virtually overwhelmed by the explosion of medical imaging.

Rochester Institute of Technology professor Anne Haake recently won grants from the National Science Foundation and the National Institutes of Health to address this problem. Haake envisions an image database built on input from the intended end-users and designed from the beginning with flexible user interfaces. Haake and her interdisciplinary team will develop a prototype using input from dermatologists to refine the search mechanism for images of various skin conditions.

“We need to involve users from the very beginning,” says Haake, professor of information sciences and technologies at the B. Thomas Golisano College of Computing and Information Sciences. “This is especially true in the biomedical area where there is so much domain knowledge that it will be specific to each particular specialty.”

Haake understands the real need to make biomedical images useful. She began her career as a developmental biologist before pursuing computing and biomedical informatics. This project combines her two strengths and was inspired by research she conducted while on sabbatical at the NIH National Library of Medicine.

Dr. Cara Calvelli, a dermatologist and a professor in the Physician Assistant program in RIT’s College of Science, has recruited dermatologists, residents and PA students for the project. She is also helping to properly describe the sample images, some of which come from her own collection. “The best way to learn is to see patients again and again with various disorders,” Calvelli says. “When you can’t get the patients themselves, getting good pictures and learning how to describe them is second best.”

Funding Haake won from the NSF will support visual perception research using eye tracking and the design of a content-based image retrieval system accessible through touch, gaze, voice and gesture; the NIH portion of the project will be used to fuse image understanding and medical knowledge.

Bridging the “semantic gap” is the challenge facing researchers working in content-based image retrieval, Haake says. Search functions can go awry when computer engineered algorithms trip on nuances and fail to distinguish between disparate objects, such as a whale and a ship. Building a system based on the end-user’s knowledge can prevent semantic hiccups from occurring.

Pengcheng Shi, director for graduate studies and research in the Golisano College, is providing his expertise in image understanding. “For many years computing/technical people have said we can write algorithms such that it will work,” he says. “But people start to realize that machines are not all that powerful. At the end of the day we need to put the human back into it. What are the physicians looking at and how are they looking at it in order to make their decisions?”

A novel aspect of the project explores the use of eye tracking to find out what an expert thinks is important. Watching where physicians look when making a diagnosis from a picture can reveal the key regions in an image in a more reliable manner than by asking the same people to remember where they concentrated the most to make their conclusions.

“Where people look is not really where people say they look because we’re just not aware of our visual strategies,” Haake says. “Eye tracking is a way to identify the perceptually important areas, what people pay attention to and where they are looking.”

The eye tracking effort is taking place in RIT’s Multidisciplinary Vision Research Laboratory in the Chester F. Carlson Center for Imaging Science under the supervision of co-director Jeff Pelz. “People tend not to pay attention to where they look. People move their eyes 150,000 times a day, but you don’t spend time thinking about where you will move your eyes next and you don’t waste any memory remembering where your eyes have been,” says Pelz, whose lab is part of the College of Science. “You just move your eyes to the next place you need information and a fraction of a second later you move them again.”

The study asks 16 pairs of dermatologists and physician-assistant students to view skin conditions in 50 different images displayed on a monitor. The pairing creates a master-apprentice dynamic.

“If you record the interaction between the master and apprentice while the master is explaining to the apprentice how to do something, it is an excellent way to learn domain knowledge from an expert,” Pelz says. “You get something different and better than if you just listen to two doctors talking to each other or a doctor talking to a layperson.”

A tracking device attached to the monitor recorded the physicians’ eye movements as they lingered on the critical regions in each image. At the same time, vocabulary mined from audio recordings of the physicians’ explanations will form the common search words in the database.

Identifying the relevant features in the images provided by Calvelli and Logical Images Inc., a Rochester-based company, will help Haake’s team improve the accuracy and efficiency of retrieving images from the database. Based on the eye-tracking data, the algorithms will compare similarities and differences in subject matter, color, contrast, size and shape—what the dermatologists focused on during the eye-tracking observations.

The efforts of three graduate students are instrumental to the project. Rui Li, a doctoral student in computing and information science, writes algorithms to search for the important features identified in the eye-tracking data, and Sai Mulpura and Preethi Vaidyanathan, who are seeking their master’s and doctorate, respectively, in imaging science, work in the Multidisciplinary Vision Research Laboratory meshing the eye-tracking data and mining the audio files.

“We will fuse all these data and find a way with one single image to find a number of images that look alike based on these descriptions,” says Vaidyanathan.

Haake envisions the database as a model for similar applications in fields struggling to make use of vast amounts of digital imagery.

“This is very specialized for dermatology but the one thing we want to establish is that this is maybe a better paradigm for developing systems in terms of involving the end-user in the development of these systems and some of the methodologies,” Haake says. “Hopefully, some of the approaches where we use the domain expert will lead to more automated systems. When you have tens of thousands of images, you can’t sit down and eye track every situation.”