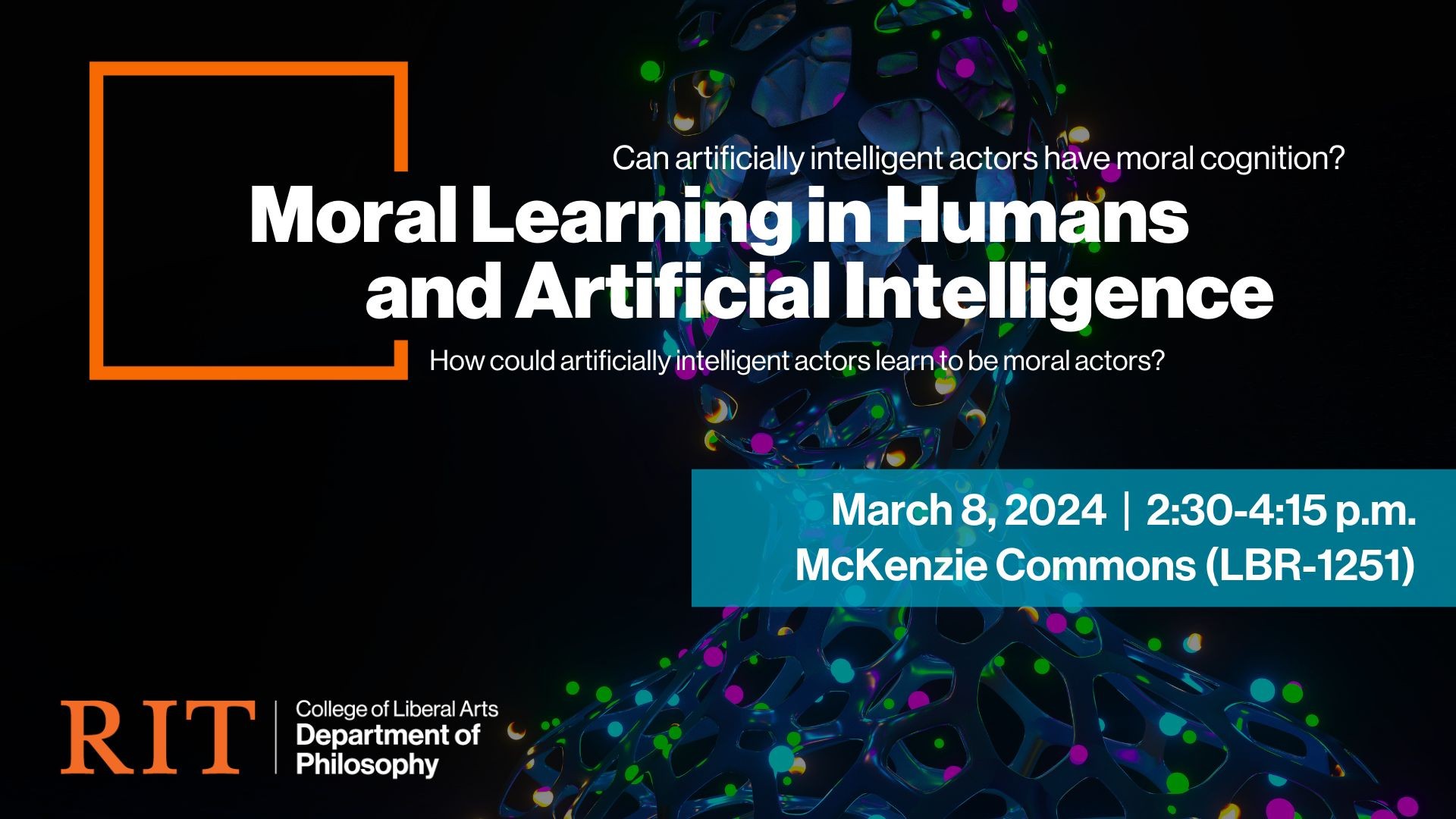

Moral Learning in Humans and Artificial Intelligence

As we continue to develop technology, there is a question as to how such technology will transform not only our everyday lives, but our moral lives as well. The issue becomes more pressing as artificial actors become more sophisticated and more human-like in their capacities. The question then becomes, can artificially intelligent actors have moral cognition? With a further question of how could artificially intelligent actors learn to be moral actors? I propose an avenue to make sense of both questions using an ecological theory of moral learning. The theory holds that moral learning occurs as a dynamic process of interaction between an agent and their environment. The learning occurs in two basic steps, first agents learn how to detect the features of the environment that indicate opportunities for moral behavior, and second agents learn how to appropriately act upon those opportunities for moral behavior. I argue that the ecological human moral learning not only explains moral perception and action but can also be used to teach artificially intelligent actors how to acquire human-like moral cognitive capabilities.

Event Snapshot

When and Where

Who

Open to the Public

Interpreter Requested?

No