Humans are the nuts and bolts of RIT robotics research

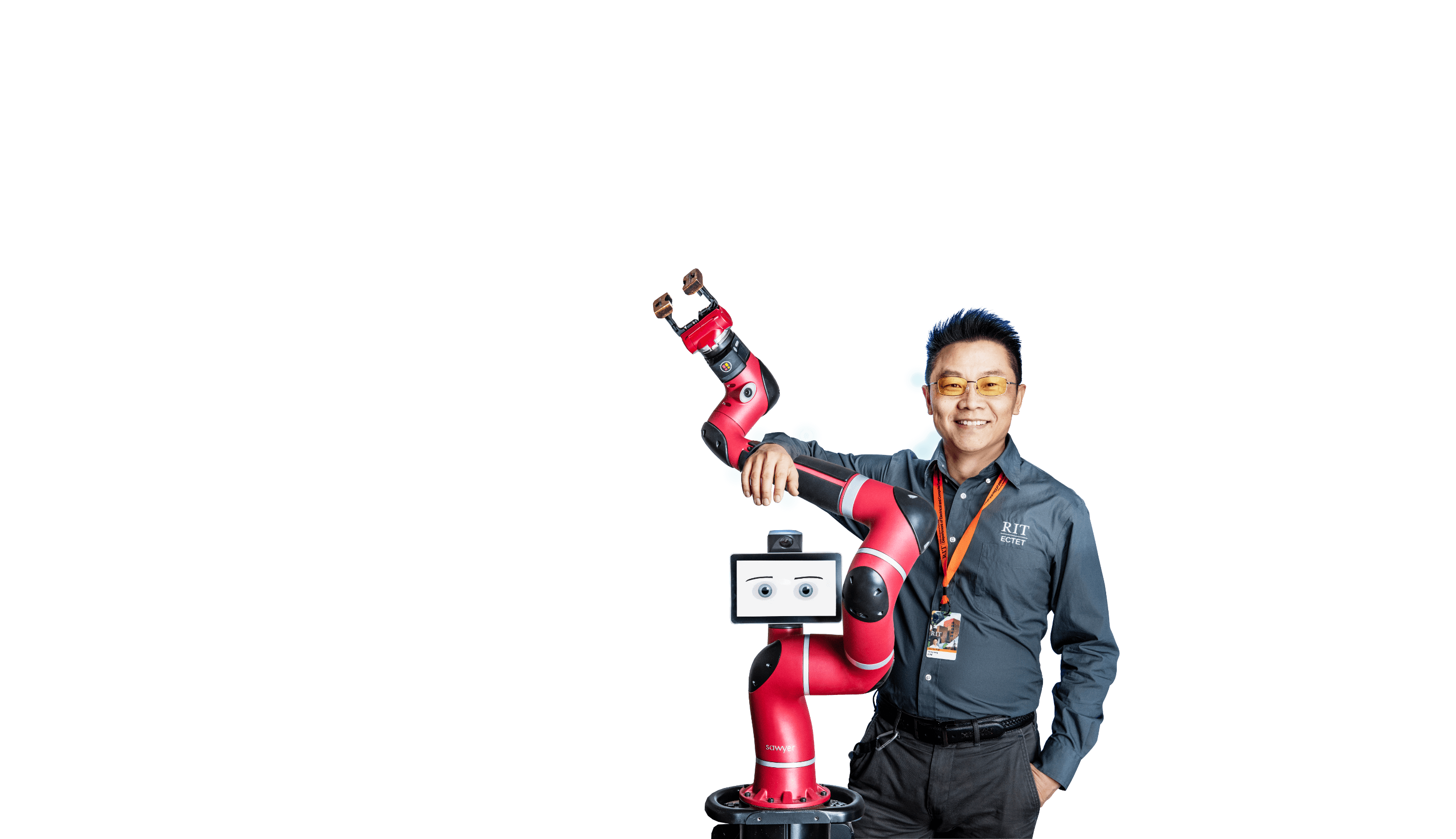

Some of the RIT researchers pushing the boundaries of human-robotic interactions include, from left to right, Ferat Sahin, Karthik Subramanian, Jamison Heard, and Yangming Lee.

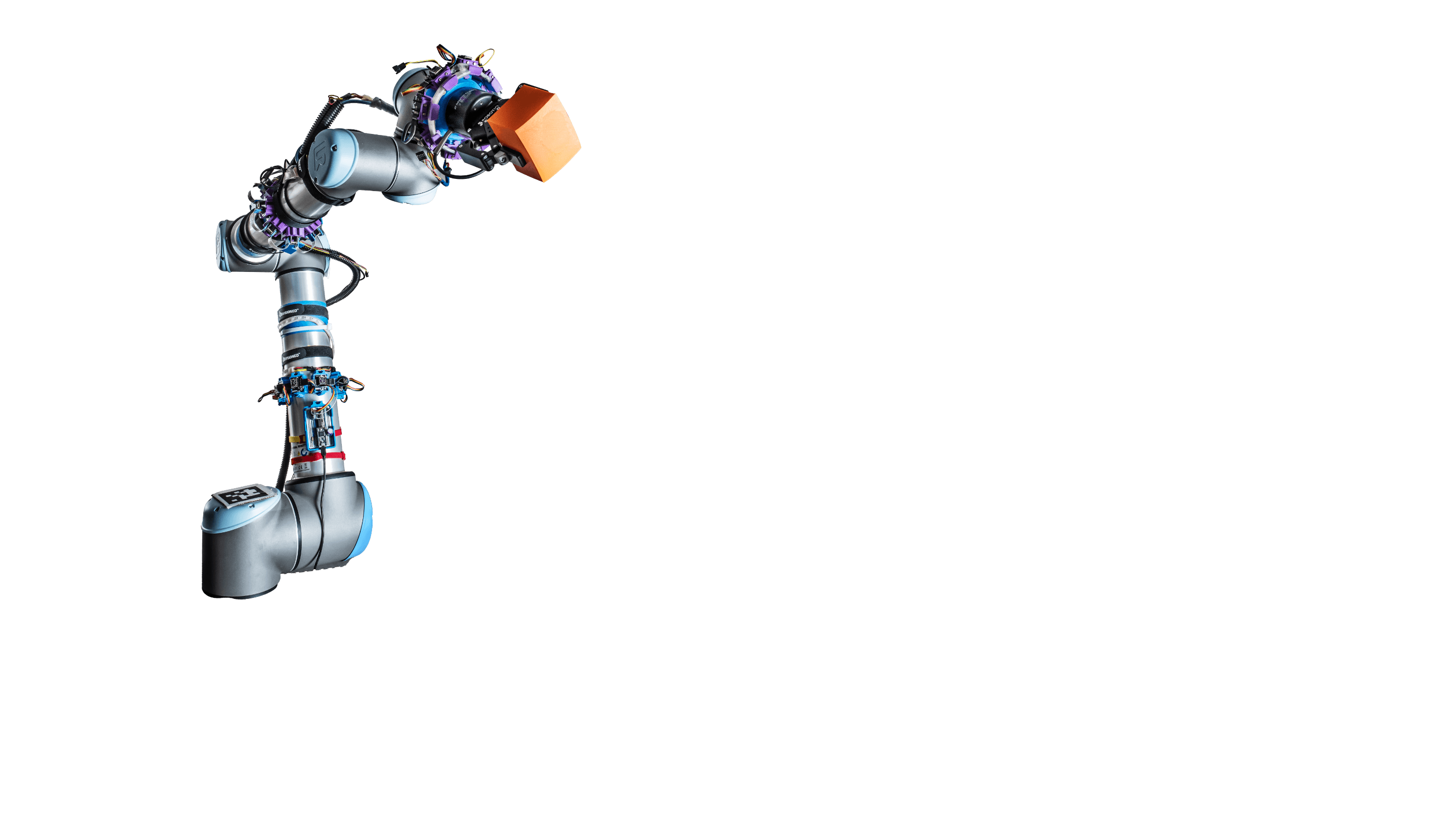

In one campus laboratory, electrical engineering doctoral student Karthik Subramanian adds facial recognition and heart rate bio-signals into the mind of a 9-foot-tall robot.

In another workshop, faculty-researcher Jamison Heard is making manufacturing robots into better partners for people who are deaf and hard of hearing.

Additionally, researcher Yangming Lee is improving the internal systems used to track tissue changes in image-guided surgical robots.

At RIT, robots are learning to read the room—especially rooms with humans.

Robots work with individuals everywhere, from storerooms to operating rooms. These robots can see pupils dilate, detect sweat on a brow through biosensors, and perceive heart rates going up. Using this bio-information to adapt to humans—rather than the other way around—robots are becoming sophisticated enough to predict behaviors and act on them.

Improved communication between robots and people is part of the human-centered philosophy that anchors much of RIT’s work in robotics.

“Industry wants robot systems that work collaboratively with humans, that are safer and have more flexibility in how they interact together to solve problems no matter what field,” said Ferat Sahin, department head of RIT’s electrical and microelectronic engineering programs. “We are teaching robots to understand human qualities. Our students use this information to build solutions for people and the work they are doing.”

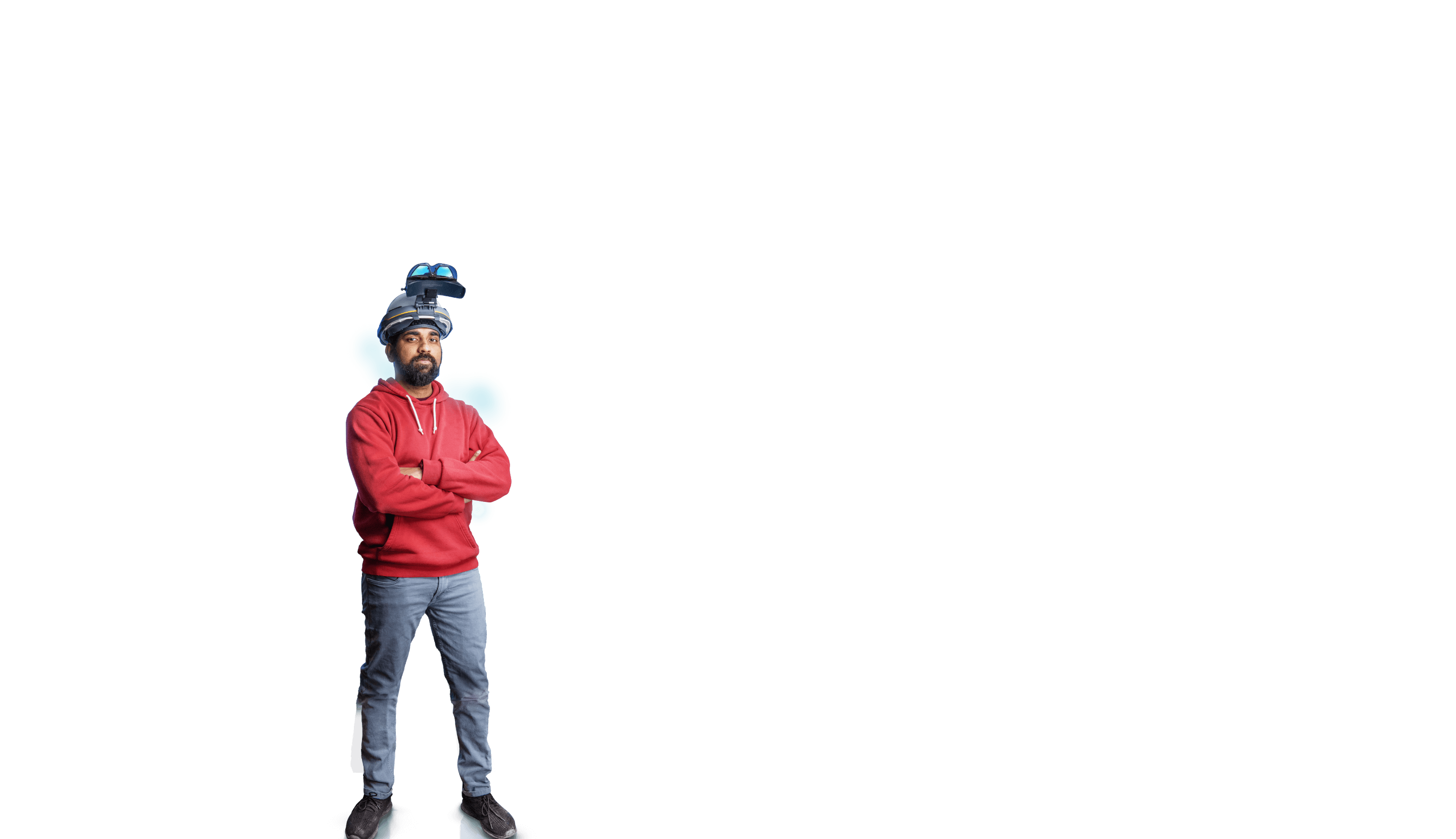

Doctoral student Karthik Subramanian wears augmented-reality goggles that create a digital twin of the environment and display information to help keep people safe around collaborative robots.

Aligning with industry

RIT’s work with robotics began decades ago with course work in mechanical functions. Now, with a human-centered design approach, students and researchers are bringing together both technical and collaborative options in robotics.

This human approach means providing robots with the intelligence to make critical decisions that do not harm people in the workplace or impede manufacturing processes.

Today, RIT has dozens of undergraduate and graduate degree programs in robotics that now incorporate vision systems in robotics, advanced automation, sensor technology, and control systems.

Robotics content is available through minors and concentrations in each of RIT’s colleges; and many courses are offered to non-engineering or computing majors across campus.

Students are learning how these mechanics and foundational technologies can be added to robot systems and used in different sectors from manufacturing to healthcare, where reading the environment may give a company an edge.

Robert Garrick, head of the Department of Manufacturing and Mechanical Engineering Technology, said industry professionals are teaching courses in everything from consumer-packaged goods to aerospace robotics at RIT.

“Change is driven by workforce and marketplace demands and the growth of machine learning technologies,” Garrick said. “This is where industry is today and where our students need to be. This translates to job opportunities and placements.”

RIT graduates are taking their robotics skills to places like Tesla, Amazon, Boeing, and Yamaha.

Additionally, early research from Sahin’s lab has contributed to International Standardization Organization standards for collaborative robots. By integrating behavioral cues with spatial features, his group is advancing the safety and efficiency of human-robot collaboration.

“Robots today are more electrical than mechanical,” said Sahin, a leader in the field of collaborative robots and Industry 5.0, a concept that includes the use of autonomous robots in smart manufacturing.

“We are working to assess a robot’s awareness of humans because its behaviors must change to optimize its interactions.”

Yangming Lee, an assistant professor in the College of Engineering Technology, is leading a research project to improve surgical robots’ tracking performance, specifically focusing on endoscopic images to track bone removal and soft tissue changes in sinus and cranial areas.

Applications with impact

To develop the best human-robot partnerships, RIT researchers are refining how robots measure and detect signals from humans.

For his Ph.D. project, Subramanian is adding facial recognition information into the AI system for a UR-10 collaborative robot. He has developed a way to determine human emotion from facial images of people working with robots.

“Some robots are only programmed to pick up objects and place them—a single task,” said Subramanian. “These simple robots have their place in industry—they are fast and precise—but are not suited to work too close to people. That is changing. A robot that can sense physiological signals from humans can help make human-robot collaboration safer.”

The challenge, he said, lies in being sure that robots can make real-time decisions. Robots can act autonomously based on human physiological signals, but it can take months to perfect AI models and run them efficiently.

Heard, an assistant professor of electrical engineering, is teaching robots to read a person’s anxiety through sensor readings—which are essentially emotional cues—that come from monitoring people in lab or workplace settings.

Cues are translated into electronic signals for a robot to recognize and act on. Sensors must provide data to the robot system in less than 8 milliseconds—the comparable time it takes for humans to make decisions.

“And you may wonder, why does the robot need to recognize anxiety?” Heard said. “Robots deal with rational agents, but it is difficult to predict what a human is going to do. Having this information may provide for much more natural and improved collaboration.”

Heard is partnering with Shannon Connell, a lecturer in the Department of Visual Studies at the National Technical Institute for the Deaf, to help robots and deaf and hard of hearing teammates work better together.

The project is important because in manufacturing, where many robots are being used, nearly 18 percent of individuals employed are deaf or hard of hearing, according to the Centers for Disease Control and Prevention.

Heard said teams are built on fluency, adaptability, effective communication, and trust.

“We found generally that individuals who were deaf or hard-of-hearing were more in sync with the robot, which illustrates a better team dynamic,” he said. “We are diving more into this data to determine if it is a cultural aspect so we can put results into context.”

RIT’s robotics researchers are also making a difference in healthcare.

Today’s surgeons rely on robotics and imaging systems, as well as their operating skills, to work efficiently. Using ultra-thin, articulated probes best suited for delicate areas, robots can provide better accuracy for less invasive surgeries.

“The core of robotics is to improve the quality of human life,” said Lee, assistant professor in the Department of Electrical and Computer Engineering Technology. “Although surgical robotic technology has rapidly advanced, current robotic surgeries still solely depend on the operation of doctors and do not fully leverage the advantages of robots in terms of stability, precision, and reliability. How to enhance collaboration between surgeons and robots for improving surgical outcomes is a challenge we are committed to addressing.”

Lee’s work using advanced tracking systems is allowing surgeons to reach areas once thought of as impossible. These systems can improve precision in resection—surgery to remove tissue or part or all of an organ.

And more advancements are on the way. Lee was awarded a National Institutes of Health grant to dynamically track surgical modifications and deformations to enable real-time planning for supervised autonomy in robotic surgeries.

“Hospitals are pushing to train surgeons with robots,” Lee said. “Our project will overcome the barrier of robot-surgeon collaborations. This is a way to bridge engineering and medicine.”