Annual Report

DIRS Annual Report 2024-2025

Foreword

Foreword

As the 2024–2025 fiscal year draws to a close, the members of the Digital Imaging and Remote Sensing (DIRS) Laboratory can look back on another wonderful, productive, and impactful year. As has been true throughout our history, we continue to evolve in response to changes in our field — not necessarily in the problems we face, but in how we choose to approach them. We’re seeing especially rapid and exciting developments across the landscape: the explosive growth in commercial satellite constellations, the continued transformation of small unmanned aircraft systems (sUAS), and the meteoric pace of innovation in artificial intelligence. These shifts are reshaping our tools, expanding our reach, and opening new frontiers in remote sensing research.

As the 2024–2025 fiscal year draws to a close, the members of the Digital Imaging and Remote Sensing (DIRS) Laboratory can look back on another wonderful, productive, and impactful year. As has been true throughout our history, we continue to evolve in response to changes in our field — not necessarily in the problems we face, but in how we choose to approach them. We’re seeing especially rapid and exciting developments across the landscape: the explosive growth in commercial satellite constellations, the continued transformation of small unmanned aircraft systems (sUAS), and the meteoric pace of innovation in artificial intelligence. These shifts are reshaping our tools, expanding our reach, and opening new frontiers in remote sensing research.

Education remains front and center for all of us at DIRS. This year, we continued expanding our curriculum with the introduction of two new advanced courses: a pair of offerings in synthetic aperture radar (SAR) developed and taught by Dr. James Albano, and a focused class on machine and deep learning for remote sensing applications created by Dr. Amir Hassanzadeh. These courses reflect the generous contributions of our talented research staff, who bring their cutting-edge expertise into the classroom — a unique strength of DIRS. We are deeply grateful to them, and I know our students will carry these experiences forward into their careers. I suspect few academic research labs can match the level of commitment to educational excellence we maintain here.

This year also brought transitions in the broader research landscape, with a shift in national leadership following the November election. Despite the uncertainty that often accompanies such changes, we’ve been fortunate to continue advancing our research agenda. Support remains strong in many critical areas — national defense, intelligence, food and agricultural science, and both basic and applied research in remote sensing — and we’re proud to contribute in ways only DIRS can.

We also bid farewell to two outstanding members of our research staff this year. Jacob Irizarry and Serena Flint moved on as several long-term programs came to a close. Jacob is now offering multimedia and management support as an independent consultant, while Serena has taken an exciting next step — rejoining us as a doctoral student in Imaging Science. While we’ll miss them both as staff, we’re thrilled to continue collaborating with Serena in her new role.

As I say every year — and always mean — I am deeply grateful for the support we receive from the many individuals and offices across RIT that make our work possible. I want to especially thank:

- Denis Charlesworth, Susan Michel, Terry Koo, and Maria Cortes in Sponsored Research Services (SRS), who guide us through the life cycle of every project,

- Laura Girolamo and Amanda Zeluff in the Controller’s Office and Sponsored Program Accounting, who keep us in compliance and help us navigate the complex world of grants and contracts,

- Our partners in Human Resources, who invest their time and care to ensure that DIRS remains a supportive, thriving workplace for everyone here.

All of these individuals — and many more I may have missed — are essential to the daily success of this laboratory, and I offer my sincere thanks to each of them.

I also want to thank Dr. Jan van Aardt, Director of the Chester F. Carlson Center for Imaging Science, and Dr. André Hudson, Dean of the College of Science, for their steadfast support and encouragement. We are also fortunate to have strategic guidance from Dr. Ryne Raffaelle, Vice President for Research, and institutional support from President David Munson and Provost Prabu David. Looking ahead, we are excited to welcome incoming RIT President Dr. William Sanders, and we look forward to working closely with him and his team as we continue to elevate research across campus.

Celebrating 40 Years of Innovation: 1984–2024

And finally — forty years. This marks the fortieth year of the DIRS Laboratory at RIT. It’s been an extraordinary journey — filled with exceptional students, staff, faculty, and families — and we are proud to continue a tradition that began decades ago, with some of us here since the very start. We remain committed to upholding and advancing this legacy of excellence in the years to come.

As we celebrate this milestone year, we are also laying the foundation for DIRS's future. If you are an alum or partner interested in contributing to our continued success, please reach out — we would love to hear from you.

The world of remote sensing is evolving faster than ever, and DIRS is evolving with it. With our passionate students, dedicated staff, and supportive partners, we are ready for what’s next.

My warmest regards,

Financial Reports

The DIRS financial reporting for FY25 is summarized below for both the sponsor research and enterprise center portions of the laboratory ...

The Digital Imaging and Remote Sensing (DIRS) Laboratory’s fiscal year dashboard reveals a story of resilience, adaptation, and strategic repositioning over the decade from 2016 to 2025. DIRS typically operates within a complex ecosystem of grants and contracts that inherently produce funding cycles. Early in the period, the lab experienced a high point in project volume, reaching 51 active projects in 2017, but this number declined significantly to a low of 34 by 2019. Since then, DIRS has maintained a relatively stable number of projects, fluctuating between 36 and 46 annually. Despite these fluctuations, we remain committed to encouraging faculty and research staff to pursue proposal development, ensuring a steady stream of new funding opportunities to drive future research and innovation.

It is not uncommon for federal budget cycles to introduce variability, as government funding priorities shift with changes in administration and budget constraints, affecting the availability of grants and contracts. However, we are now seeing unprecedented disruptions in funding cycles introducing uncertainty and financial strain. The greatest challenge currently facing the Laboratory is the delay of grant and contract awards. As a result, DIRS is experiencing heightened funding volatility.

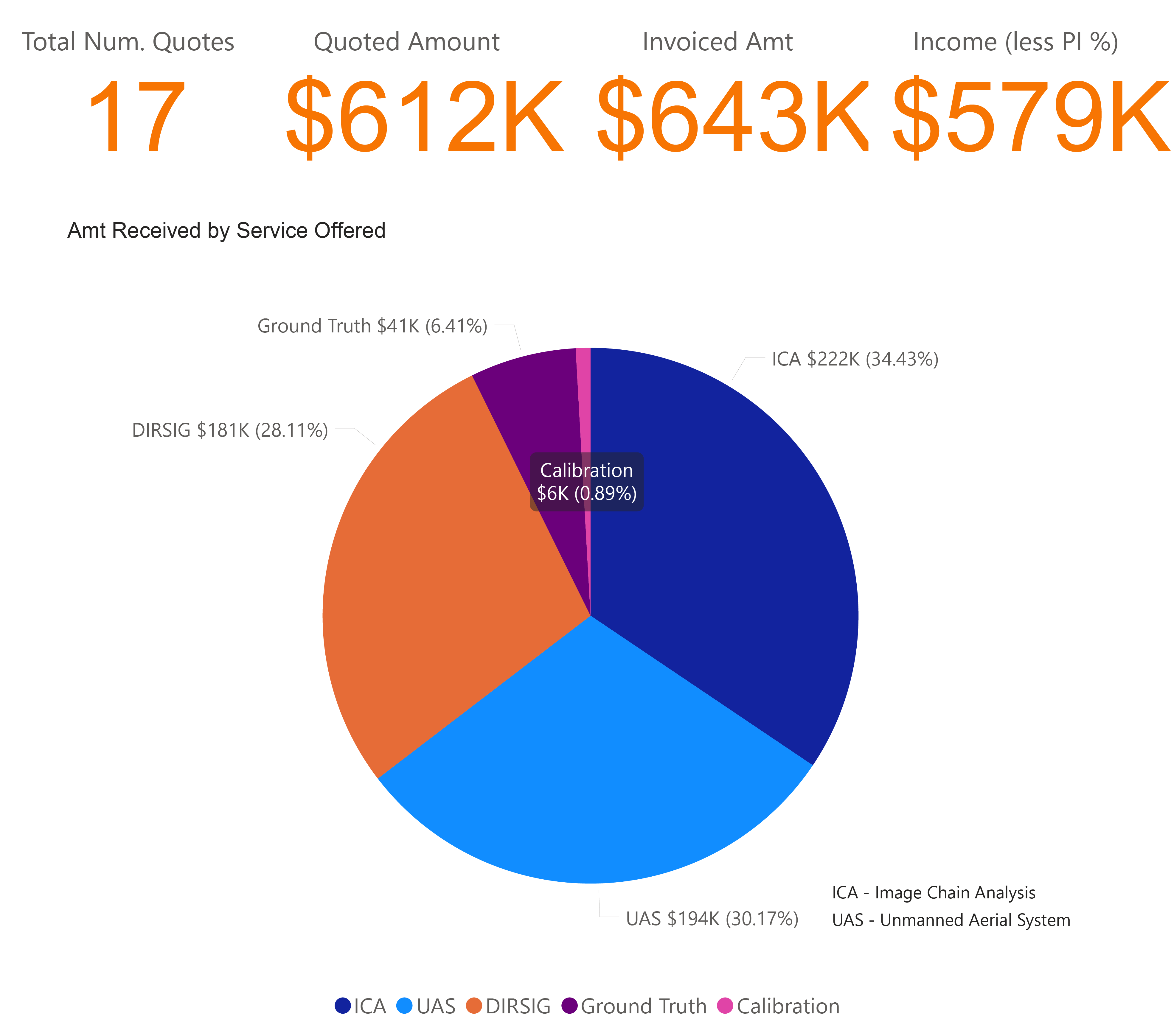

Sponsored research obligations peaked in 2021 at $5.0 million and remained steady in the years following, hovering around $3.9 to $4.4 million. While the Enterprise Center contributed significantly between 2022 and 2024, with annual revenues close to $900,000, it saw a sharp decline to $579,000 in 2025. Meanwhile, the number of new proposals awarded fell steeply from 18 in the earlier years to a low of five in 2022 but encouragingly rebounded to 12 by 2025. This aligns with another significant trend: although the number of proposals submitted decreased slightly after 2021, the value of submitted proposals surged, peaking at $27 million in 2023 and remaining strong through 2025. This suggests the lab has prioritized high-value, competitive research opportunities over sheer volume.

From an institutional contribution perspective, DIRS has steadily increased its influence within its academic unit. Its share of the Center for Imaging Science (CIS) grew from 58% in 2016 to 74% in 2025, while its presence in the College of Science (COS) has remained more modest and relatively flat, ranging from 26% to 34%. When combined with the Enterprise Center, DIRS's impact on the CIS becomes even more prominent, culminating in a dominant 84% share by 2025.

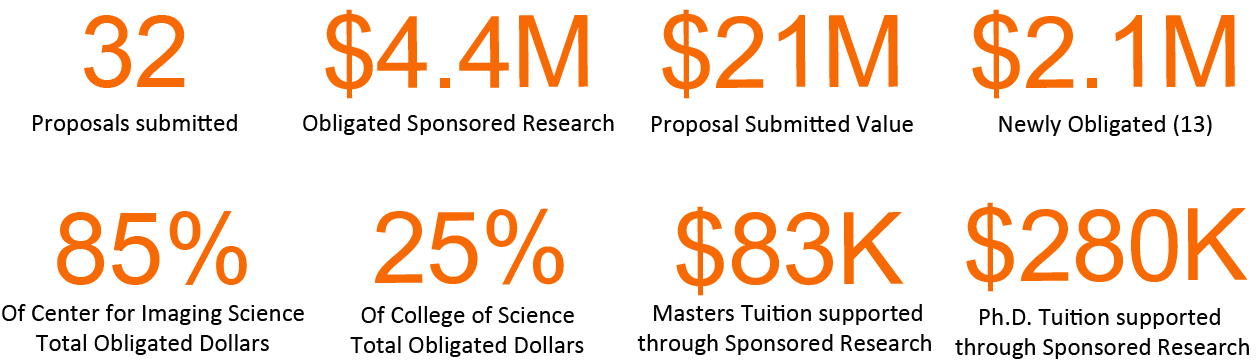

The DIRS Laboratory fiscal year 2025 highlights in proposals, research dollars and graduate student support are shown below.

The DIRS Laboratory is navigating a complex and volatile funding landscape for FY26. While the laboratory has historically managed cyclical funding patterns through strategic diversification and maintaining both undergraduate and graduate student support, the current disruptions in federal funding cycles pose significant challenges. The laboratory's proactive measures, including leveraging discretionary accounts and introducing the DIRSIG™ Alliance fee-for-membership model, are essential but may not fully mitigate financial strain. The laboratory's resilience and commitment to research excellence will be key to overcoming these challenges and ensuring future growth.

The DIRS laboratory, with deep expertise in drones, calibration, imaging and remote sensing technologies, has been offering its services to the public since late 2019. Through the Digital Imaging and Remote Sensing (DIRS) Enterprise Center (EC), customers can hire faculty and staff from our Lab to provide training, consulting, data collection, equipment calibration and more. The DIRS Enterprise Center is one of many RIT Enterprise Centers offering fee-for-service as a vendor. We are excited to work with new collaborators and support a wide range of industries as we continue to provide high-quality data and services.

The full list of services the DIRS Enterprise Center offers include:

- DIRSIG™ Synthetic Image Generation Services

- UAS / Drone Collection Services

- GRIT – Material Directional Reflectance Measurement Services

- Ground Reference - Field Material Measurement Services

- Spectro-Radiometric Calibration Laboratory Services

- Ground-based LiDAR Measurement Services

- Geometric Calibration Laboratory Services

- General Image Chain Analysis (ICA) and Systems Engineering Services

The DIRSIG™ Alliance

For over 40 years, the DIRSIG™ software — developed at RIT’s Digital Imaging and Remote Sensing laboratory — has been freely available (beyond a training fee) and trusted by academia, industry, and government for remote sensing simulation.

However, evolving funding landscapes make it unsustainable to maintain and advance DIRSIG™ under the existing model. The DIRSIG™ Alliance is our solution: a membership-based community to support the long-term health, growth, and innovation of the DIRSIG™ ecosystem.

Simulation and Modeling

DIRSIG™ has had a heavy focus on the user experience this past year. With the help of both internal and external collaborators, many quality of life and efficiency improvements were made to the graphical tools as well as the input files.

Learn More

Starlink is a mega constellation of low Earth orbiting (LEO) satellites used to provide broadband internet globally. As of February 2025, there are more than 7,000 Starlink satellites in low Earth orbit (LEO) and 12,000 planned. The user-downlink waveform transmitted by Starlink is a circularly polarized orthogonal frequency division multiplexed (OFDM) signal with 240 MHz of instantaneous bandwidth. In addition to the dense population of satellites providing global coverage and nearly continuous illumination over the most remote locations on Earth, multiple views of an object can be made over a short period of time by exploiting multiple Starlink satellites. This attribute has the potential to greatly increase detection and identification performance. The focus of this research is to provided an initial assessment of the performance of a passive imaging radar system that exploits the high bandwidth down-link signal from Starlink satellites and evaluates its ability to support automatic target recognition (ATR) of military-sized ground vehicles.

Learn More

Over a number of years, the Bachmann laboratory (GRIT Lab) has been developing and improving radiative transfer and advanced statistical/AI models that can be used to invert key biophysical and geophysical parameters of sediment and vegetation from hyperspectral and multi-sensor imagery, particularly in coastal environments, with an emphasis on wetland ecosystems. Some of this ongoing research has leveraged a past project that was supported in part by the National Geographic Society (A. C. Tyler and C. M. Bachmann, investigators) entitled: “Improving Estimates of Salt Marsh Resilience and Coastal Blue Carbon,” focused on study areas at the Virginia Coast Reserve (VCR) Long-Term Ecological Research (LTER) site. In this past project, we had undertaken a series of four extensive remote sensing and field ground truth data collection campaigns at the VCR LTER over a three-year period (2017-2019). The extensive data collected by us during these campaigns has formed the basis of our past publications on retrieval of key soil and vegetation parameters from both drone-based remote sensing and other similar near-surface remote sensing platforms that provide very high-resolution imagery and supported continued ongoing research in GRIT Lab. Hyperspectral imagery from both drones and our mast-mounted platform (Bachmann et al, 2019), including data from these systems collected at the VCR LTER, has played a foundational role in our models of coastal sediment and vegetation properties, enabling coastal zone mapping products using radiative transfer as well as statistical/AI models.

This work required the development of a telecentric lens plug-in for DIRSIG™. This plug-in would closely mimic DIRSIG™'s OrthoImage plug-in, however, still allow for depth of focus effects as seen from the telecentric lens. A telecentric lens is useful because it will reduce parallax and distortion for enhanced measurement accuracy. Once the plug-in was developed, images were produced that would simulate strands from a 3D-printer placed in various lattice configurations on a set of aluminum plate backgrounds. Each structure was imaged one layer at a time and only layers near the top were to remain in focus.

Learn MoreThis project is focused on leveraging modeling and simulation of non-resolved space object signatures utilizing the DIRSIG™ software model to better understand spectral signature phenomenology. The non-resolved spectral signatures are typically represented by time resolved spectral light curves which are a function of the space object materials, surface orientations, illumination conditions, and viewing conditions. The DIRSIG™ software model is being used to generate spectral and polarimetric light curve training data for project collaborators to develop machine learning algorithms to characterize specific properties of unknown space objects. This characterization will ideally help identify the fundamental surfaces, shapes, and materials present on the unknown objects and ultimately aid the sponsor with a more thorough understanding of the object’s purpose, operational status, and possibly future intent.

This project focuses on the development of a simulated environment to enable an assessment of the impact of Landsat Next sensor requirements on resulting image quality. Specifically, large-scale scenes are being developed, and the Digital Imaging and Remote Sensing Image Generation (DIRSIG™) model used, to simulate twenty-six band proxy data for the Landsat Next Instrument Suite (LandIS) sensors to help inform requirements. The first two years of this effort focused on developing an automated workflow that generates scenes that are approximately 200x200km in scale to support the simulation of a full Landsat WRS-2 (World Reference System) path/row.

This work is an ongoing, follow-on project to an SBIR/STTR (N19A-T010) Phase II Option to extend DIRSIG™'s maritime modeling capability to lidar. Initial work under that program developed a baseline capability to model the interaction of a green bathymetric laser with models of wind-driven water surfaces, distributions of in-water constituents, bottom surfaces and objects embedded fully or partially within the water. That capability is used to drive simulated data products based on a model of Arete's commercial streak tube imaging lidar (STIL) system or other similar systems. The current work has focused on maturing the model through more robust radiometric calculations, testing, and feedback from the Arete team as the model is compared to real world data.

In fast-paced research and development environments, collecting and labeling real-world data for machine learning is often impractical and costly due to equipment expenses, limited availability, and environmental variability. With the rapid evolution of UAS technology, from commercial small unmanned aircraft systems (sUAS) to large military unmanned aircraft (UAS) and the dynamic nature and locations of modern warfare, adaptable detection systems are more critical than ever. Distinguishing UAS from natural flyers, such as birds, as well as clutter, such as trees, is essential to reduce false positives and improve the reliability of the detection system. Synthetic data offers a solution by accelerating development and enabling scalable counter-UAS detection models.

The Cape Floristic Region (CFR) in South Africa is a hyper-diverse region encompassing two global biodiversity hotspots threatened by habitat loss and fragmentation, invasive species, altered fire regimes, and climate change. Managing and mitigating these threats requires regularly updated, spatially explicit information for the entire region, which is currently only feasible using satellite remote sensing. However, detecting the signal change over the spectral and structural variability of ecosystems in the region is highly challenging due to the exceptionally high ecosystem diversity and the confounding influence of structural variables at the leaf, stem, and whole-crown scales. This complexity is exacerbated by the fact that CFR contains spectacular plant diversity. The objectives of this study are to build digital twins of multiple post-burn plots in the CFR by combining fynbos (the dominant biome in the region) trait measurements with radiative transfer modeling in a biophysically and physically robust simulation environment. These digital twins will be validated using field-collected spectral and structural data. Subsequently, through simulations of various spectral and LiDAR sensors, we aim to inform the design of next-generation remote sensing systems capable of monitoring post-fire recovery in the region. Achieving this goal will significantly improve our understanding of light interactions within the fynbos biophysical traits and inform innovative use of remote sensing data for such highly diverse ecosystems at all scales, from airborne to satellite levels. Overall, this study presents a novel framework for simulating and leveraging remote sensing-based land surface reflectance and structural properties, in a region with exceptional ecosystem diversity, which could have important implications for the management and conservation of the GCFR.

Mangrove forests attempt to maintain their forest floor elevation through root growth, sedimentation, resistance to soil compaction, and peat development in response to sea level rise. Human activities, such as altered hydrology, sedimentation rates, and deforestation, can hinder these natural processes. As a result, there have been increased efforts to monitor surface elevation change in mangrove forests. Terrestrial lidar system (TLS) data were collected from mangrove forests in Micronesia in 2017 and 2019, using the Compact Biomass Lidar (CBL), developed by scientists at the University of Massachusetts (Boston) and RIT. Sediment accretion was assessed using the lidar data, using Cloth Simulation Filtering (CSF), followed by filtering the points based on angular orientation, which improved the performance of the ground detection. The elevation change between the two years was found by subtracting the Z (height) values of the nearest points, detected using a nearest neighbor search. Extreme elevation changes, attributed to human interactions or fallen logs, were removed using interquartile range analysis. The consistency of TLS-measured elevation changes in comparison to the field-measured ones was found to be 72%, with standard error values being 10-70x lower.

The Digital Imaging and Remote Sensing Image Generation (DIRSIG™) software can generate geometrically and radiometrically accurate light detection and ranging (LiDAR) data, producing ground 3D (structural) truth data that would be nearly impossible to collect in complex forest environments. DIRSIG™ was leveraged to understand the nuances of a simulated forest, specifically Harvard Forest in Petersham, MA (Figure 1). This simulated forest included various materials such as bark, leaves, soil, and miscellaneous objects. LiDAR systems (airborne and spaceborne), which are useful for penetrating sub-canopy layers, were configured to collect LiDAR data. The resulting data sets provide valuable insights into forest health and sub-canopy intricacies, with applications in target detection, environmental monitoring, and forest management.

Terrestrial laser scanning (TLS) captures forest structure at centimeter resolution, producing dense 3D point clouds that can inform fine-scale inventory and ecological assessment. This project develops an end-to-end pipeline that converts raw TLS data into actionable structural information. Our field sites span contrasting biomesÑHarvard Forest (Massachusetts) and mangrove roots in PalauÑproviding diverse canopy architectures and acquisition conditions.

Collection and Algorithms

Our laboratory measurements that we have undertaken this year using our hyperspectral goniometer system (Harms et al, 2017) constituted our third round of measurements in support of the NASA Solar Cruiser program. As RCD designs have been improved, we have continued to take measurements of improved versions of the RCDs to fully characterize their BRDF over the observation hemisphere for a variety of illumination angles, measuring both polarimetric and unpolarized BRDF for provided samples in both on and off states.

The Landsat Next Instrument Suite (LandIS) is committed to extend the nearly fifty-year data record of spaceborne measurements of the Earth’s surface collected from Landsat’s reflective and thermal instruments. The goal of the LandIS mission is to continue the mission of the Landsat program for acquisition, archival, and distribution of imagery to characterize decadal changes in the Earth’s surface. LandIS will consist of a constellation of three satellites, which will provide an improved temporal revisit (a six-day revisit) for the purpose of monitoring dynamic changes in the Earth’s surface. The sensor will also be a significant change in the number of spectral bands compared to its predecessors - Landsat 8/9 - which currently consists of 11 spectral bands at 30-m GSD. While, LandIS will have a total of 26 spectral bands at Ground Sample Distance (GSD) of 10-m, 20-m and 60-m. This will result in a significantly higher data volume compared to its predecessor. This increase in data will require a more efficient compression algorithm currently being implemented for L9; a lossless compression algorithm based on the Consultative Committee for Space Data Systems (CCSDS) 121.0 recommendation standard. After exploring available options, CCSDS-123.0 B2 emerged as the top choice. This open compression standard provides high-performance lossless and near-lossless compression, crucial for maintaining data integrity in scientific applications.

This follow-on effort leverages and improves upon previous methodologies developed at RIT to ensure proper calibration of Landsat's active thermal sensors and validation of the archived higher-level surface temperature (ST) products. An automated workflow has been developed that leverages measurements from NOAA's buoy network, GEOS-5 reanalysis data and forward-modeling to better support calibration of Landsat's thermal sensors. The data created by this workflow are freely available to interested users at https://www.rit.edu/dirs/resources/landsat-thermal-validation. Calibration and validation results of this research effort are presented semi-annually at Landsat Calibration & Validation meetings.

Austin Powder manufactures, distributes, and applies industrial explosives for industries including quarrying, mining, construction, and other applications. Although it’s unusual, these massive explosions can release a foreboding yellowish-orange cloud that is often an indicator of nitrogen oxides—commonly abbreviated NOx. Austin Powder is seeking research into the ability to image these plumes so as to estimate concentration (using image processing, machine learning, sensors on drones) and estimate volume. We also seek to automatically identify NOx in the plume itself.

It has been noted that explosive ordnances (EOs) have killed or injured 7073 people worldwide in 2020 alone. Recent estimates show that there are more than 100 million pieces of explosive ordnance worldwide unaccounted for. Moreover, the leading cause of civilian casualties in Ukraine, for example, is antivehicle and antipersonnel land mines. In addition to the large amounts of EO still prevalent, it is estimated that, using current technology, removal and clearance of land mines alone could take up to 1100 years. This is clearly unacceptable. The issue will soon manifest itself when the Ukraine war draws to a close.

The aim of this project has been to apply a model-based prediction capability to explore spectral imaging system parameter performance sensitivities and trends with a goal of developing insights into their fundamental limits. During this fourth year of the program, PhD student Chase Canas developed the study framework shown in Figure 1 and applied it to an airborne hyperspectral imaging system looking at detecting subpixel targets in a canonical forest type background. Figure 2 shows the resulting detection performance cubes showing the dependence of detection probability (as measured by the log-weighted AUC) on system parameters. Canas also developed a method to identify parameter limits as the values that lead to "knees in the curve" beyond which performance changes are minimal. For the airborne case study examined, the most significant parameters and their limits were aerosol visibility (2 to 6 km), target subpixel percentage (15-35%), and background complexity as measured by the t-distribution degree of freedom parameter (3-5). Future work during the upcoming fifth (and final) year includes performing additional validations through empirical data collections and preparing for distribution a version of the performance limit study software.

In preparation for two weeks of intensive field experiments and data collection in September 2025, planning has been proceeding for ROCX 2025.

ROCX has four main objectives:

- Acquire remote sensing data for research purposes from a variety of sensor modalities and platforms coordinated over a defined area for a defined period.

- Include a range of ground object deployments and ground truth collection activities proposed and carried out by research experiment Principal Investigators.

- Process and distribute analysis ready data sets on an open access data repository for use by the general remote sensing research community.

- Disseminate research results from collection through special sessions at major conferences and peer reviewed journal special issues.

This research project seeks to address the challenging problem of sharpening remotely sensed hyperspectral imagery with higher resolution multispectral or panchromatic imagery, with a focus on maintaining the radiometric accuracy of the resulting sharpened imagery. Traditional spectral sharpening methods focus on producing results with visually accurate color reproduction, without regard for overall radiometric accuracy. However, hyperspectral imagery (HSI) is processed through (semi-) automated workflows to produce various resulting products. Consequently, maintaining radiometric accuracy is a key goal of spatial resolution enhancement of hyperspectral imagery. Here, our focus will be in characterizing the issues related to accurate sharpening, and in particular understanding how the scene spatial - spectral content and complexity impact the ability to sharpen a particular image, or portion of an image.

This project provides science systems engineering support for the NASA Landsat program. The PI serves as the deputy instrument scientist for the Landsat Next project which involves being an interface among the instrument vendor, NASA, and USGS project teams for science-related requirements. Team members ensure that raw pixel data from the detectors are properly recorded, processed, compressed, downlinked, verified, and calibrated on the ground. The PI also serves on the Landsat Calibration/Validation team and is responsible for continuing on-orbit characterization and calibration of the Landsat 8 / Thermal Infrared Sensor (TIRS) and the Landsat 9 / Thermal Infrared Sensor 2 (TIRS-2) instruments. Team members also provide technical expertise and guidance on Landsat instrument technology development.

The in-flight characterization and calibration of NASA’s Lucy Ralph (L’Ralph) instrument is essential to a successful science mission. The L’Ralph instrument provides spectral image data of the Jupiter Trojan asteroids from visible to mid-infrared wavelengths from two focal planes sharing a common optical system. The Multispectral Visible Imaging Camera (MVIC) acquires high spatial and broadband multispectral images in the 0.4 to 0.9 micron region. The Linear Etalon Imaging Spectral Array (LEISA) provides high spectral resolution image data in the 1 to 3.8 micron spectral range.

The Lucy observatory is currently in flight (phase E) after a successful launch in October 2021 and is in-route to the Jupiter Trojan swarms via multiple Earth Gravity Assists (EGAs). The overall objective of this work is to assist in the continuing characterization and radiometric calibration of the L’Ralph instrument through processing and analyses of in-flight image data. These image datasets are assessed for radiometric and spatial quality. Calibration algorithms are updated as needed to correct any artifacts or radiometric errors found during these image assessments.

Quantitative remote sensing using thermal infrared imaging from small unmanned aircraft systems (sUAS) often requires calibration reference targets to correct for atmospheric attenuation. These targets, such as controlled-temperature water baths, are complex to set up, maintain, and monitor, making their use impractical in many field applications. One possible solution ...

The USA annual grape production value exceeds $6 billion across as many as 1 million acres (>400,000 ha). Grapevines require both macro- and micro-nutrients for growth and fruit production. However, inappropriate application of fertilizers to meet these nutrient requirements could result in widespread eutrophication through excessive nitrogen and phosphorous runoff, while inadequate fertilization could lead to reduced grape quantity and quality. It is in this optimization context that unmanned aerial systems (UAS) have come to the fore as an efficient method to acquire and map field-level data for precision nutrient applications. We are working in coordination with viticulture and imaging teams across the country to develop new vineyard nutrition guidelines, sensor technology, and tools that will empower grape growers to make timely, data-driven management decisions that consider inherent vineyard variability and are tailored to the intended end-use of the grapes. Our primary goal on the sensors and engineering team is to develop non-destructive, near-real-time tools to measure grapevine nutrient status in vineyards. The RIT drone team is capturing hyper and multispectral imagery over both Concord (juice/jelly) and multiple wine variety vineyards in upstate New York.

The objectives of this project are to i) better understand the correlations between spectral reflectance and structural metrics and structure-trait cause-and-effect relationships; ii) propose a data fusion approach (LiDAR + hyperspectral) to trait prediction; and iii) apply the fusion approach to mitigate scaling issues (leaf-level, stand-level, forest-level). We are currently focusing our efforts towards understanding impact on spectral and structural metrics due to variation in leaf angle distributions (LAD). Leaf angle is a key factor in determining arrangement of leaves, and directly impacts forest structural metrics, such as leaf area index (LAI), which in turn is an important variable for a variety of ecological processes, such as photosynthesis and carbon cycling.

The Rochester, NY region supports a growing economy of table beet producers, led by Love Beets USA, which operates local production facilities for organic beet products. In collaboration with the Rochester Institute of Technology’s Digital Imaging and Remote Sensing (DIRS) group and Cornell AgriTech, this project explores the use of unmanned aerial systems (UAS) remote sensing to improve beet crop management through yield estimation and plant population assessment. In parallel, we are investigating UAS-based tools for monitoring Cercospora Leaf Spot (CLS), a major foliar disease in table beets. Across the 2021–2023 growing seasons, CLS severity at multiple growth stages was visually assessed by Cornell pathologists, while end-of-season yield parameters—including root weight, root count, and foliage biomass—were collected for model development based on UAS-derived spectral and structural features. The overarching aim is to provide actionable insights for farmers, enabling more efficient and sustainable crop management decisions.

The objectives of this project are to i) Identify existing and novel vegetation structural metrics, akin to leaf area index (LAI), that are strongly correlated with ecosystem function and broadly useful to advancing ecological knowledge at multiple spatial scales; ii) Develop processing algorithms to derive ecologically significant vegetation structural metrics from “next generation” spaceborne LiDAR instruments; and iii) assess the capabilities and limitations of current LiDAR systems to produce ecologically meaningful next generational data products.

This project is investigating the use of passive acoustic sensors for monitoring agricultural activities in Rwanda. One potentially important use of sound is to detect the presence of birds that may be harming crops, so that a deterring action might be taken to scare away birds. A low-cost network of acoustic sensors might give the farmer the ability to collect and analyze sound information about their farm, and thereby improve yields.

The TEAL project is designed to improve environmental monitoring in the African Great Lakes. We are building field spectrometers for measuring the optical properties of lakes. Deployment of autonomous field sensor networks at scale, combined with the satellite remote sensing measurements they support, will aid the better management of lakes in East Africa. A custom circuit board has been designed to support the dual spectrometer device and all of the devices have been integrated.

The Ltome-Katip Project is an Indigenous-led initiative aimed at producing labeled data in support of machine learning applications for remote sensing analysis. The project is being implemented simultaneously in two geographically and culturally distinct regions to address issues of human-wildlife conflict. These are the Namunyak Conservancy in Samburu, Kenya, and the Shuar Territory in the Ecuadorian Amazon, Ecuador. In Kenya the main target is elephants (Ltome) and in Ecuador the main target is rats (Katip).

Publications

[1] Albano, J., and Denton, J. Passive sensing and image formation via starlink downlink. In Algorithms for Synthetic Aperture Radar Imagery XXXII (2025), vol. 13456, SPIE, pp. 1–10.

[2] Alveshere, B. C., Siddiqui, T., Krause, K., van Aardt, J., and Gough, C. Hemlock woolly adelgid (adelges tsugae) infestation influences forest structural complexity and its relationship with primary production. Available at SSRN 5006994 (2024).

[3] Alveshere, B. C., Siddiqui, T., Krause, K., van Aardt, J. A., and Gough, C. M. Hemlock woolly adelgid infestation influences canopy structural complexity and its relationship with primary production in a temperate mixed forest. Forest Ecology and Management 586 (2025), 122698.

[4] Amenyedzi, D. K., Kazeneza, M., Mwaisekwa, I. I., Nzanywayingoma, F., Nsengiyumva, P., Bamurigire, P., Ndashimye, E., and Vodacek, A. System design for a prototype acoustic network to deter avian pests in agriculture fields. Agriculture 15, 1 (2025).

[5] Amenyedzi, D. K., Kazeneza, M., Nzanywayingoma, F., Nsengiyumva, P., Bamurigire, P., Ndashimye, E., and Vodacek, A. Rwandan farmers’ perceptions of the acoustic environment and the potential for acoustic monitoring. Agriculture 15, 1 (2025).

[6] Canas, C. End-to-End Systems Limitations in Hyperspectral Target Detection using Parametric Modeling and Subpixel Lattice Targets for Validation. Thesis, Rochester Institute of Technology, 2025.

[7] Chaity, M. D., Eng, B., Irizarry, J., Saunders, G., Bhatta, R., and Aardt, J. v. Evaluating the influence of imaging spectrometer system specifications on ecosystem spectral diversity assessment. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. GC51CC–0032.

[8] Chaity, M. D., Slingsby, J., Moncrieff, G. R., Chancia, R. O., Bhatta, R., and Aardt, J. v. Enhancing mediterranean biodiversity monitoring in south africa via machine learning and cost-effective uas imagery. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. B11K–1455.

[9] Chaity, M. D., and van Aardt, J. Exploring the limits of species identification via a convolutional neural network in a complex forest scene through simulated imaging spectroscopy. Remote Sensing 16, 3 (2024), 498.

[10] Chaity, M. D., and van Aardt, J. A. Optimization of imaging spectrometer specifications for accurate vegetation species identification: A simulation-based approach using convolutional neural networks. In AGU Fall Meeting Abstracts (2023), vol. 2023, pp. B23L–07.

[11] Chancia, R., Bates, T., Vanden Heuvel, J., and van Aardt, J. Assessing grapevine nutrient status from unmanned aerial system (uas) hyperspectral imagery. Remote Sensing 13, 21 (2021), 4489.

[12] Chancia, R., van Aardt, J., Pethybridge, S., Cross, D., and Henderson, J. Predicting table beet root yield with multispectral uas imagery. Remote Sensing 13, 11 (2021), 2180.

[13] Chancia, R., Vanden Heuvel, J., Bates, T., and van Aardt, J. Unmanned aerial system (uas) imaging for vineyard nutrition: Comparing typical multispectral imagery with optimized band selection from hyperspectral imagery. In AGU Fall Meeting Abstracts (2021), vol. 2021,

pp. B55K–1327.

[14] Chancia, R. O., van Aardt, J. A., and MacKenzie, R. A. Enhanced surface elevation change assessment in mangrove forests using a lightweight, low-cost, and rapid-scanning terrestrial lidar. In AGU Fall Meeting Abstracts (2023), vol. 2023, pp. EP31C–2085.

[15] Chatterjee, A., Ghosh, S., Ghosh, A., and Ientilucci, E. Urbanscape-net: A spatial and self-attention guided deep neural network with multi scale feature extraction for urband land use classification. In International Geoscience and Remote Sensing Symposium (Athens, Greece, July 2024), ISEE, Ed., IEEE.

[16] Chatttoraj, D., Chatterjee, A., Ghosh, S., Ghosh, A., and Ientilucci, A. Enhanced multi class weather image classification using data augmentation and dynamic learning on pre-trained deep neural net. In International Conference on emerging Techniques in Computational Intelligence (August 2024).

[17] Chhapariya, K., Ientilucci, E., Benoit, A., Buddhiraju, K., and Kumar, A. Joint multitask learning for image segmentation and salient object detection in hyperspectral imagery. In IEEE WHISPERS (August 2024), IEEE, Ed., IEEE.

[18] Chhapariya, K., Ientilucci, E., Buddhiraju, K., and Kumar, A. Target detection and characterization of multi-platform remote sensing data. Remote Sensing 16, 24 (2024), 4729.

[19] Eon, R. S., De Groot, C., Pedelty, J. A., Gerace, A., Montanaro, M., Covington, R. K., DeLisa, A. S., Hsieh, W.-T., Henegar-leon, J. M., Daniels, D. J., Engebretson, C., Crawford, C. J., Holmes, T. R., Dabney, P., and Cook, B. D. Toward a near-lossless image compression strategy for the nasa/usgs landsat next mission. Remote Sensing of Environment 329 (2025), 114929.

[20] Gough, C. M., Alveshere, B., Siddiqui, T., Ogoshi, M., van Aardt, J. A., LaRue, E. A., and Krause, K. Advances and opportunities in the characterization of vegetation structure: An ecologist’s perspective. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. GC54E–02.

[21] Harms, J. D., Bachmann, C. M., Ambeau, B. L., Faulring, J. W., Ruiz Torres, A. J., Badura, G., and Myers, E. Fully automated laboratory and field-portable goniometer used for performing accurate and precise multiangular reflectance measurements. Journal of Applied Remote Sensing 11, 4 (2017), 046014–046014.

[22] Krause, K., van Aardt, J. A., Ollinger, S. V., Patki, K., Ouimette, A., and Hastings, J. Estimation of canopy geometric parameters from full-waveform lidar data for leaf-to-ecosystem spectral scaling. In AGU Fall Meeting Abstracts (2022), vol. 2022, pp. B45B–03.

[23] Lee, C. H., Bachmann, C. M., Nur, N. B., and Golding, R. M. Bidirectional spectro-polarimetry of olivine sand. Earth and Space Science 12, 1 (2025), e2024EA003928.

[24] Lee, C. H., Bachmann, C. M., Nur, N. B., Mao, Y., Conran, D. N., and Bauch, T. D. A comprehensive brf model for spectralon and application to hyperspectral field imagery. IEEE Transactions on Geoscience and Remote Sensing 62 (2024), 1–16.

[25] Lekhak, S., and Ientilucci, E. Advancing landmine and uxo detection: A comparative analysis of uav-based and handheld metal detectors. In Applied Geophyical Union (Washington, DC, December 2024), AGU, Ed., AGU.

[26] Lekhak, S., Ientilucci, E., and Brinkley, T. Viability of substituting handheld metal detectors with an airborne metal detection system for landmine and unexploded ordnance detection. Remote Sensing 16, 24 (2024), 4732.

[27] Levison, H. F., Marchi, S., Noll, K. S., Spencer, J. R., Statler, T. S., Bell III, J. F., Bierhaus, E. B., Binzel, R., Bottke, W. F., Britt, D., et al. A contact binary satellite of the asteroid (152830) dinkinesh. Nature 629, 8014 (2024), 1015–1020.

[28] Liu, X., and Ientilucci, E. Nox detection in video data based on ensemble machine learning. In ISEE 51st Conference on Explosives and Blasting (Cherokee, N. Carolina, Feburary 2025), ISEE, Ed., ISEE.

[29] Liu, X., and Ientilucci, E. Smoke segmentation leveraging multiscale convolutions and multiview attention mechanisms. In Association for the Advancement of Artificial Intelligence (Philadelphia, PA, Feburary 2025), AAAI, Ed., AAAI.

[30] Liu, X., and Ientilucci, E. Smokenet: Efficient smoke segmentation leveraging multiscale convolutions and multiview attention mechanisms. In Computer Vision and Pattern Recognition (Nashville, TN, December 2025), CVPR, Ed., CVPR.

[31] Macalintal, J., and Messinger, D. Radiometric assessment of hyper-sharpening algorithms using a detail-injection convolutional neural network on cultural heritage datasets. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXXI (2025), vol. 13455, SPIE.

[32] MacKenzie, R. A., Krauss, K. W., Cormier, N., Eperiam, E., van Aardt, J., Kargar, A. R., Grow, J., and Klump, J. V. Relative effectiveness of a radionuclide (210pb), surface elevation table (set), and lidar at monitoring mangrove forest surface elevation change. Estuaries and Coasts 47, 7 (2024), 2080–2092.

[33] Nur, N. B., Bachmann, C. M., Tyler, A. C., Miller, A., Khan, S., Union, K. E., Owens-Rios, W. A., Bauch, T. D., and Lapszynski, C. S. Mapping soil organic matter, total carbon, and total nitrogen in salt marshes using uas-based hyperspectral imaging. Journal of Geophysical Research: Biogeosciences 130, 6 (2025), e2024JG008421.

[34] Patki, K., Wible, R., Krause, K., and van Aardt, J. Assessing the impact of leaf angle distributions on narrowband vegetation indices and lidar derived lai measurements. In AGU Fall Meeting Abstracts (2021), vol. 2021, pp. B25I–1584.

[35] Rouzbeh Kargar, A., MacKenzie, R., Asner, G. P., and van Aardt, J. A density-based approach for leaf area index assessment in a complex forest environment using a terrestrial laser scanner. Remote Sensing 11, 15 (2019), 1791.

[36] Rouzbeh Kargar, A., MacKenzie, R. A., Apwong, M., Hughes, E., and van Aardt, J. Stem and root assessment in mangrove forests using a low-cost, rapid-scan terrestrial laser scanner. Wetlands Ecology and Management 28, 6 (2020), 883–900.

[37] Rouzbeh Kargar, A., MacKenzie, R. A., Fafard, A., Krauss, K. W., and van Aardt, J. Surface elevation change evaluation in mangrove forests using a low-cost, rapid-scan terrestrial laser scanner. Limnology and Oceanography: Methods 19, 1 (2021), 8–20. [38] Saif, M., Chancia, R., Sharma, P., Murphy, S. P., Pethybridge, S., and van Aardt, J. Estimation of cercospora leaf spot disease severity in table beets from uas multispectral images.

[39] Saif, M., Chancia, R. O., Murphy, S. P., Pethybridge, S. J., and Aardt, J. v. Assessing multiseason table beet root yield from unmanned aerial systems. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. B23H–04.

[40] Saif, M. S., Chancia, R., Murphy, S. P., Pethybridge, S., and van Aardt, J. Exploring uas imaging modalities for precision agriculture: predicting table beet root yield and estimating disease severity using multispectral, hyperspectral, and lidar sensing. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXXI (2025), vol. 13455, SPIE, pp. 43–50.

[41] Saif, M. S., Chancia, R., Pethybridge, S., Murphy, S. P., Hassan zadeh, A., and van Aardt, J. Forecasting table beet root yield using spectral and textural features from hyperspectral uas imagery. Remote Sensing 15, 3 (2023), 794.

[42] Salvaggio, C., Klosinski, D. P., Mancini, R. A., McDonald, R. B., Mei, P. M., van Aardt, K. S., Eon, R. S., Bauch, T. D., and Raque˜no, N. G. Longwave thermal infrared atmospheric correction using in situ scene elements: the multiple altitude technique revisited for small unmanned aircraft systems (suas). In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping X (2025), vol. 13475, SPIE, pp. 10–36.

[43] Shayer, A., and Ientilucci, E. Grss data curation: Benchmark uav dataset for hyperspectral target detection studies. In International Geoscience and Remote Sensing Symposium (Athens, Greece, July 2024), ISEE, Ed., IEEE.

[44] Siddiqui, T., Alveshere, B. C., Gough, C., van Aardt, J., and Krause, K. Estimating forest productivity using three-dimensional canopy structural complexity metrics derived from small-footprint airborne lidar data. Available at SSRN 5022638 (2024).

[45] Simon, A. A., Kaplan, H. H., Reuter, D. C., Montanaro, M., Grundy, W. M., Lunsford, A. W., Weigle, G. E., Binzel, R. P., Emery, J., Sunshine, J., et al. Lucy l ralph in-flight calibration and results at (152830) dinkinesh. The Planetary Science Journal 6, 1 (2025), 7.

[46] Smith, D. D., Heaton, A. F., Ramazani, S., Tyler, D. A., Johnson, L., Bachmann, C., Shiltz, D. J., Lapszynski, C. S., Nur, N. B., Lee, C. H., et al. Optical performance of reflectivity control devices for solar sail applications. In Optical modeling and performance predictions xiii (2023), SPIE, p. PC1266405.

[47] Spinosa, L. D., Dank, J. A., Kremens, M. V., Spiliotis, P. A., Cox, N. A., Bodington, D. E., Babcock, J. S., and Salvaggio, C. Feasibility of using synthetic videos to train a yolov8 model for uas detection and classification. In Synthetic Data for Artificial Intelligence and Machine Learning: Tools, Techniques, and Applications III (2025), vol. 13459, SPIE, pp. 365–383.

[48] Trivedi, M., Bates, T., Meyers, J. M., Shcherbatyuk, N., Chancia, R. O., Davadant, P., Lohman, R. B., and Heuvel, J. V. Enhancing spatial sampling efficiency and accuracy through box sampling for grapevine nutrient management. In AGU Fall Meeting Abstracts (2024), vol. 2024, pp. IN43B–2263.

[49] Trivedi, M. B., Bates, T. R., Meyers, J. M., Shcherbatyuk, N., Davadant, P., Chancia, R., Lohman, R. B., and Heuvel, J. V. Box sampling: a new spatial sampling method for grapevine macronutrients using sentinel-1 and sentinel-2 satellite images. Precision Agriculture 26, 2 (2025), 35.

[50] Wible, R., Patki, K., Krause, K., and van Aardt, J. Toward a definitive assessment of the impact of leaf angle distributions on lidar structural metrics. In Proceedings of the SilviLaser Conference 2021 (2021), pp. 307–309.

[51] Zhang, F. Tls-showcase. https://github.com/fz-rit/TLS-showcase, 2025. Accessed: 2025-08-01.